Another option would be to not lie because you think it’s cool to.

Juste because yours is genuine doesn’t mean theirs can’t also be. That’s the beauty of LLMs. They’re just stochastic parrots.

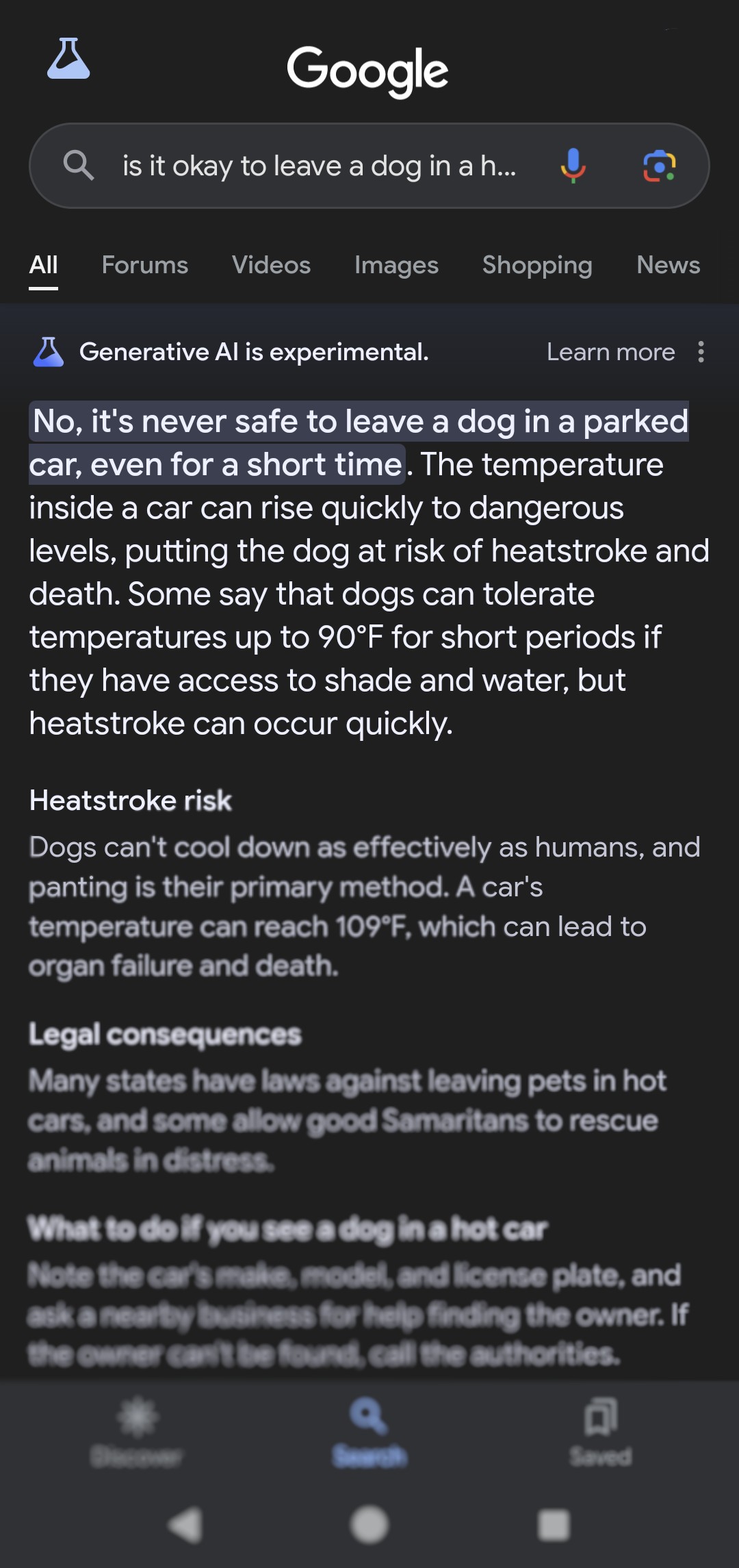

Yeah maybe, it’s just that after seeing several posts like this and never being able to reproduce it, it makes me think people are just mad at Google

Well usual pattern is there will be one genuine case, the pizza story for example, remaining are usually fakes or memes generated. I just enjoy them just as I enjoy a meme.

shoutout to the multiple people flagging this post as misinformation 😂

(I don’t know or care if OP’s screenshot is genuine, and given that it is in /c/shitposting it doesn’t matter and is imo a good post either way. and if the screenshot in your comment is genuine that doesn’t even mean OP’s isn’t also. in any case, from reading some credible articles posted today on lemmy (eg) I do know that many equally ridiculous google AI answer screenshots are genuine. also, the song referenced here is a real fake song which you can hear here.)

Mine is genuine, take it or leave it

I often find these kinds of posts to not be reproducible. I suspect most are fake

Depends on the temperature in the LLM/context, which I’m assuming google will have set quite low for this.

Temperature?

Yeah, it’s kind of a measure of randomness for LLM responses. A low temperature makes the LLM more consistent and more reliable, a higher temperature makes it more “creative”. Same prompt on low temperature is more likely to be repeatable, high temperature introduces a higher risk of hallucinations, etc.

Presumably Google’s “search suggestions” are done on a very low temperature, but that doesn’t prevent hallucinations, just makes it less likely.

Let’s not forget that the Beatles advocated for babies driving cars.

Removed by mod

Interesting a mod removed this…

7°C (approx. 4,000,002 °F) for a dog is equivalent to 1°C (approx. 0.32 °F) for a human, so it makes sense

Wouldn’t even recommend leaving hot dog or up dog in a hot car.

What’s hot dog?

My wife, but please don’t call me that!

Golf clap

Nothing much, What’s up dog?

I still prefer the Rolling Stone’s " Put that baby in boiling water", no disrespect to the Beatles.

Was that on the “Let’s huff gasoline!” album?

Just because you don’t remember a song, it doesn’t mean it doesn’t exist.

Wild. I don’t trust the internet anymore and can’t tell if it’s real

It’s definitely real. But in a more honest sense, it’s definitely not by The Beatles.

What is “real” anyway?

According to The Beatles, nothing is real.

AI

Poe’s Law might just save humanity.

Or doom it.

Seems appropriate.

We’re living in the Idiocracy timeline, so I think we’re doomed. We got what plants crave, though.

Forced production of unwanted children, coupled with the progressive destruction of public schools is certainly expediting that vision. Brought to you by Carl’s Jr.

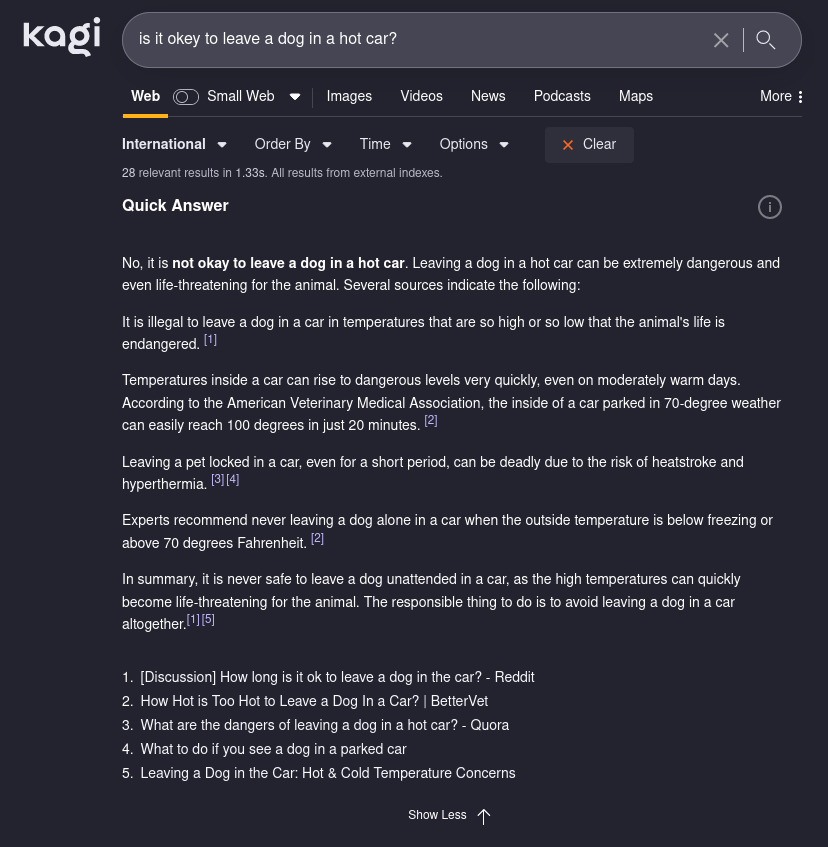

Kagi got it right.

Is this real? Holy shit

It’s hexbear so I assume they’re shitposting

Removed by mod

Google’s Bing-ification

I read the song at the end in the voice of Frank Reynolds

Hello, Faux News? I do declare a moral panic

lol at all the people taking this for face value/spreading disinformation.

The irony! The hypocrisy…

Here’s some good info about what disinformation campaigns are like: https://tinyurl.com/2h59xndc

Dismiss Distort Distract Dismay