- cross-posted to:

- politics

- [email protected]

- cross-posted to:

- politics

- [email protected]

Summary

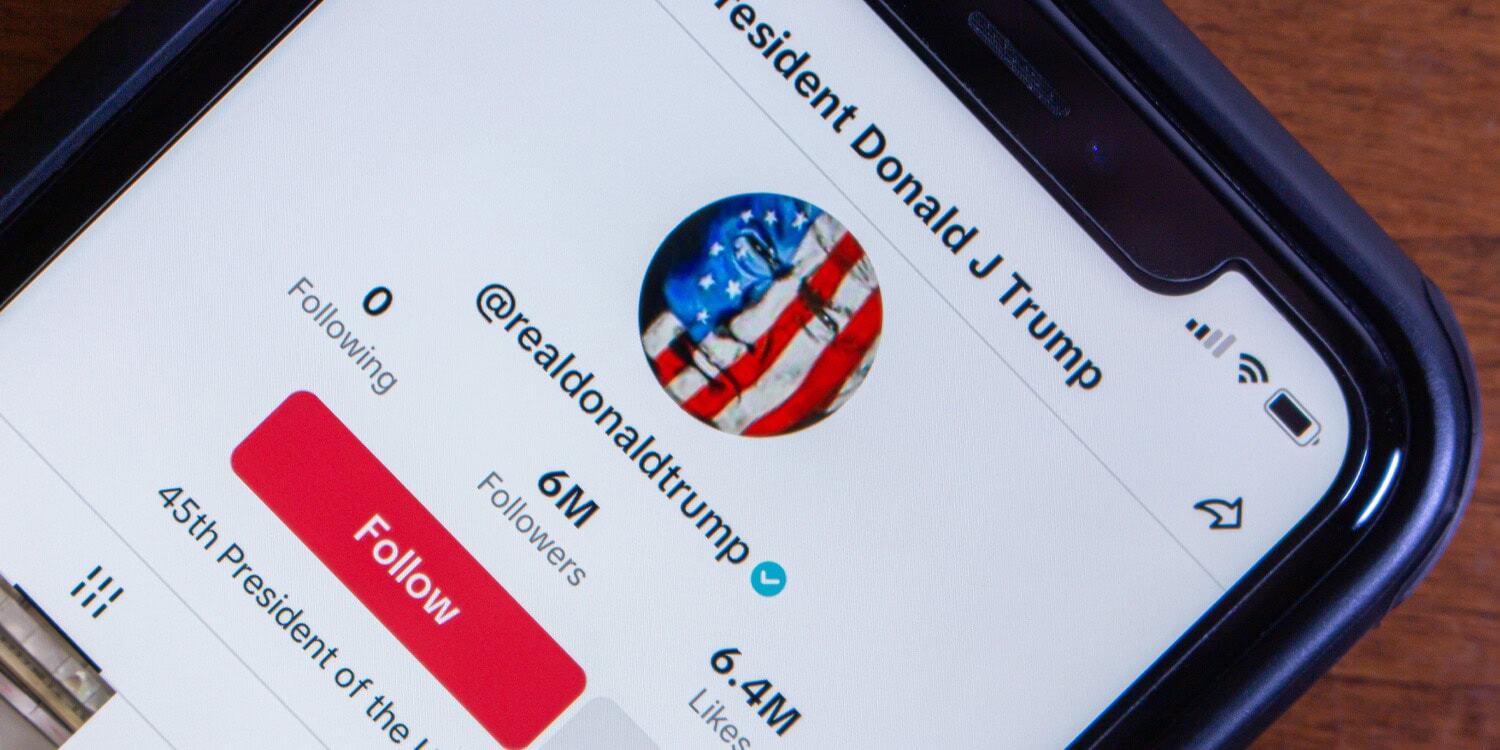

A study found that TikTok’s recommendation algorithm favored Republican-leaning content during the 2024 U.S. presidential race.

TikTok, with over a billion active users worldwide, has become a key source of news, particularly for younger audiences.

Using 323 simulated accounts, researchers discovered that Republican-leaning users received 11.8% more aligned content than Democratic-leaning users, who were exposed to more opposing viewpoints.

The bias was largely driven by negative partisanship, with more anti-Democratic content recommended.

The intent on e.g. YouTube is to optimise views. Radicalisation is an emergent outcome, as a result of more combatitive, controversial, and flashy content being more captivating in the medium term. This is documented to some extent in Johann Hari’s book Stolen Focus, where he interviews a couple of insiders.

So no, the stated intent is not the bias (at least initially). The bias is an pathological outcome of optimising for ads.

But looking at some of Meta’s intentional actions more recently, it seems like maybe it can become an intentional outcome after the fact?