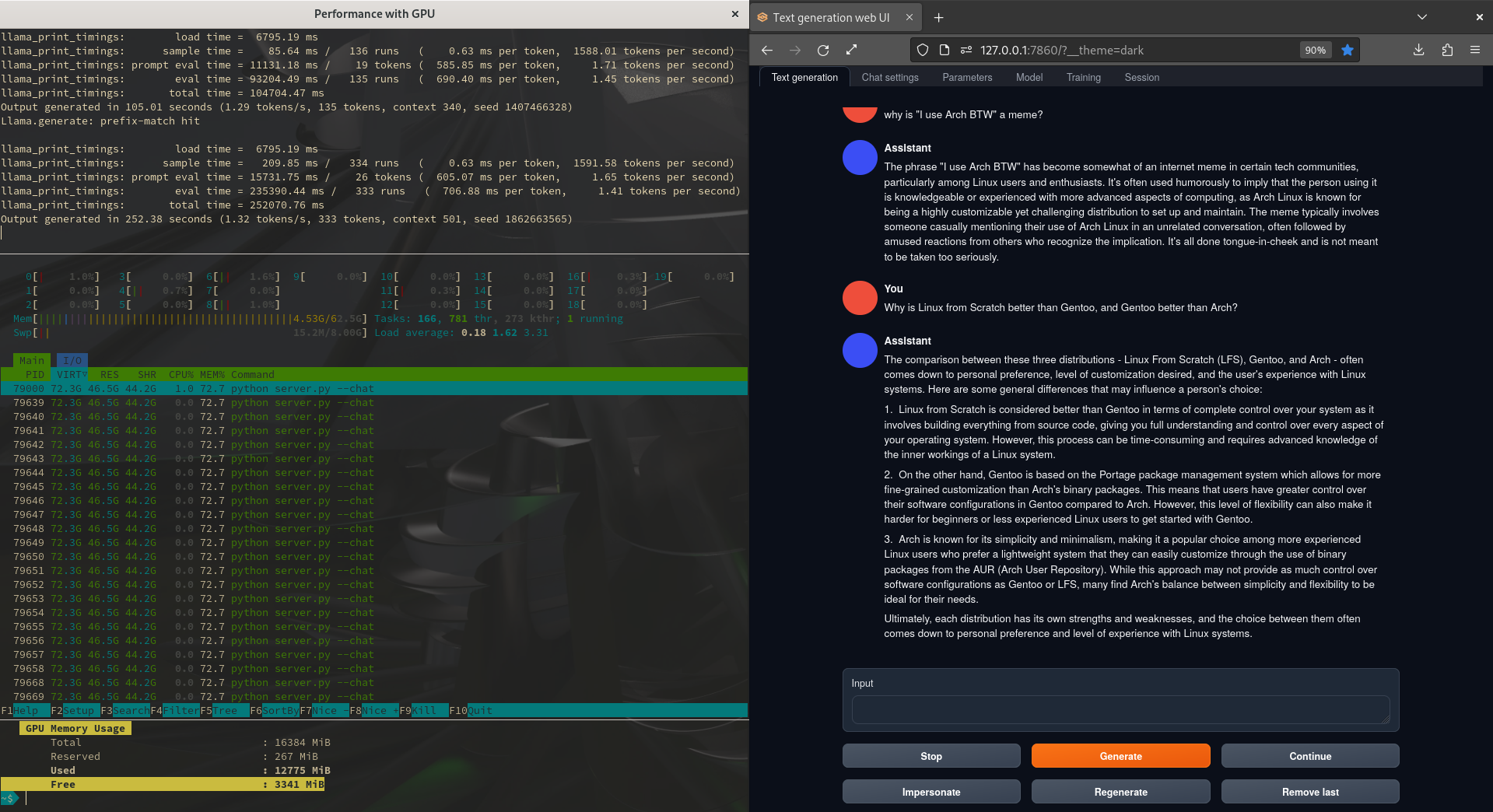

Good news, I got it running with GPU after reloading CUDA libraries. The bad news is that the GPU layers are HEAVY (for lack of a better term). I could only run with 16 layers enabled on the GPU. If I add any more the model crashes as soon as I enter any prompt, even with empty context history. If you look at the numbers pictured, even with a 16GBV 3080Ti, this still requires more than 32GB of system memory.

In practice the text output doesn’t feel significantly different with the GPU.

Last note, I prompted this model to do prefix (+ 3 3) and postfix (3 3 +) notation, aka Polish and Reverse Polish Notation (“normal” is infix notation 3+3). This is the first model to get it right every time. This should give you an idea of flexibility and expectations.

Like I mentioned in a comment on my post from yesterday about running this model with CPU only, this is a twelfth gen i7 with 20 logical cores available and 64GB of system memory along with the RTX3080Ti. Specifically this is a Gigabyte Aorus YE5. Not the best Linux machine due to some proprietary nonsense, but it is manageable.

Do you have a guide you followed?

I just followed the README.md. There is also some extra documentation in a doc folder in the git archive for the webui. I’m exploring a lot deeper in the code base than most users ever will. I’m too deep into the rabbit hole to give explicit directions on how I got here. You are not likely to encounter the same issues I have had. I am attempting to use many things with overlapping dependencies in parallel and trying to containerize all of them. It’s the first time I have tried containers at this scale. I’m not naturally talented at anything in life, including this effort. I barely have it working. I failed to get several 70B models to work prior to this one. The main key for the installation is to be sure you are in the correct conda container environment when you run “pip install requirements.txt”. If, for any reason conda fails to jump into textgen and goes to the base system or issues any warnings about ‘reverting to the base system’ you need to sort this out and get the conda textgen container running.

The way the conda/python requirements are setup basically (IMO seems to) assume you have some tools that are present in most Linux distros. In a default distrobox container, the distro image is much smaller. I’ve had to manually add stuff like GCC support for C and C++ to most containers for AI stuff. If you were just running everything in a conda container on the host/base system, you won’t have the same problems I have had to deal with. I am planning ahead for situations where I may want to modify the software and may need additional layers of dependencies outside of both conda and the base/host.

All that said, the notes for each model posted on hugging face combined with the number of downloads, stars, and comments for models indicates what is likely to work in practice.