Linked original reddit post, but this didn’t work for me. I had to take a bunch of extra steps so I’ve written a tutorial. Original instructions here which I’ll refer to, so you don’t have to visit reddit. My revised tutorial with all instructions will follow this in the replies, please post questions as a new post in this community, I’ve locked this thread so that the tutorial remains easily accessible.

Zyin 24 points 2 months ago*

Instructions on how to get this setup if you’ve never used Jupyter before, like me. I’m not an expert at this, so don’t respond asking for technical help.

If you’ve never done stuff that needs Python before, you’ll need to install Pip and Git. Google for the download links. If you have Automatic1111 installed already you already have Pip and Git.

Install the repo. It will be installed in the folder where you open the cmd window:

git clone https://github.com/serp-ai/bark-with-voice-clone

Open a new cmd window in newly downloaded repo’s folder (or cd into it) and run it’s installation stuff:

pip install .

Install Jupyter notebook. It’s basically Google Collab, but ran locally:

pip install jupyterlab (this one may not be needed, I did it anyway)

pip install notebook

If you are on windows, you’ll need these to do audio code stuff with Python:

pip install soundfile

pip install ipywidgets

You need to have Torch 2 installed. You can do that with this command (will take a while to download/install):

pip3 install numpy --pre torch torchvision torchaudio --force-reinstall --index-url https://download.pytorch.org/whl/nightly/cu118

To check your current Torch version, open a new cmd window and type these in one at a time:

python import torch print(torch.__version__) #(mine says 2.1.0.dev20230421+cu118)

Now everything is installed. Create a folder called “output” in the bark folder, which will be needed later to prevent a permissions error.

Run Jupyter Notebook while in the bark folder:

jupyter notebook

This will open a new browser tab wit the Jupyter interface. Navigate to /notebooks/generate.ipynb

This is very similar to Google Collab where you run blocks of code. Click on the first block of code and click Run. If the code block has a “[*]” next to it, then it is still processing, just give it a minute to finish.

This will take a while and download a bunch of stuff.

If it manages to finish without errors, run blocks 2 and 3. In block 3, change the line to: filepath = “output/audio.wav” to prevent a permissions related error (remove the leading “/”).

You can get different voices by changing the voice_name variable in block 1. Voices are installed at: bark\assets\prompts

For reference on my 3060 12GB, it took 90 seconds to generate 13 seconds of audio. The voice models that come out of the box create a robotic sounding voice, not even close to the quality of ElevenLabs. The voice that I created using /notebooks/clone_voice.ipynb with my own voice turned out terrible and was completely unusable, maybe I did something wrong with that, not sure.

If you want to test the voice clone using your own voice, and you record a voice sample using windows Voice Recorder, you can convert the .m4a file to .wav with ffmpeg (separate download):

ffmpeg -i "C:\Users\USER\Documents\Sound recordings\Recording.m4a" "C:\path\to\bark-with-voice-clone\

___

Disclaimer I’m using linux, but the same general steps should apply to windows as well

First, create a venv so we keep everything isolated:

python3.9 -m venv bark-with-voice-clone/Yes, this requires ** python 3.9 ** it will not work with python 11

cd bark-with-voice-clonesource bin/activatepip install .When this completes:

pip install jupyterlabpip install notebookI needed to install more than what was listed in the original post, as each time I ran the notebook it would mention another missing thing. This is due to the original user alrady having these components installed, but since we are using a virtual environment, these extras will be required:

Pip install the below:

soundfile

ipywidgets

fairseq

audiolm_pytorch

tensorboardX

Now all the components should be installed. Note that I did not need to install numpy or torch as described in the original post

You can test that it works by running

jupyter notebookAnd you should get an interface like this pop up in your default browser:

Now for a tricky part:

When I tried to run through voice cloning, I had this error:

--> 153 def auto_train(data_path, save_path='model.pth', load_model: str | None = None, save_epochs=1):154 data_x, data_y = [], []156 if load_model and os.path.isfile(load_model):TypeError: unsupported operand type(s) for |: 'type' and 'NoneType'From file

customtokenizer.pyin directorybark-with-voice-clone/hubert/To solve this, I just plugged this error into chatGPT and made some slight modifications to the code.

At the top I added the import for Union underneath the other imports:

from typing import UnionAnd at line 154 (153 before adding the import above), I modified it as instructed:

def auto_train(data_path, save_path='model.pth', load_model: Union[str, None] = None, save_epochs=1):compare to original line:

def auto_train(data_path, save_path='model.pth', load_model: str | None = None, save_epochs=1):And that solved the issue, we should be ready to go!

You now need to get a <10 second wav file as an example to train from. Apparently as little as 4 seconds works too. I won’t cover that in this tutorial.

For mine, I cut some audio from a clip of a woman speaking with very little background noise. You can use https://www.lalal.ai/ to extract voice from background noise, but I didn’t need to do that in this case. I did when using a clip of Megabyte from Reboot talking, which worked… mostly well.

I created an input folder to put my training wav file in:

bark-with-voice-clone/inputNow we can go through this next section of the tutorial:

Run Jupyter Notebook while in the bark folder:

jupyter notebookThis will open a new browser tab wit the Jupyter interface. Click on clone_voice.ipynb

This is very similar to Google Collab where you run blocks of code. Click on the first block of code and click Run. If the code block has a “[*]” next to it, then it is still processing, just give it a minute to finish.

This will take a while and download a bunch of stuff.

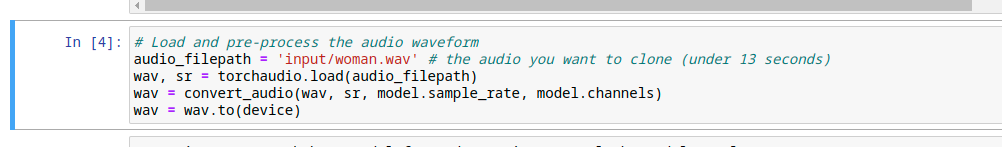

If it manages to finish without errors, run blocks 2 and 3. In block 4, change the line to: filepath = “input/audio.wav”

Make sure you update this block with a valid filepath (to prevent a permissions related error remove the leading “/”) and audio name

outputs will be found in:

bark\assets\promptsNow you can move your voice over to the right bark folder in ooba to play with. You can test the voice in the notebook if you just keep moving through the code blocks, I’m sure you’ll be able to figure that part out by yourself.

In order for me to be able to select the voice, I actually had to overwrite one of the existing english voices, because my voice didn’t appear in the list.

Overwrite (make backup of original if you want) en_speaker_0.npz in one of these folders:

oobabooga_linux_2/installer_files/env/lib/python3.1/site-packages/bark/assets/prompts/v2oobabooga_linux_2/installer_files/env/lib/python3.1/site-packages/bark/assets/prompts/And select the voice (or the v2/voice) from the list in bark in ooba.

If anyone suggests a place to upload the voices I created, I’ll do it and reply to this comment with the download link.

Actually considering that we will end up pushing the tutorial off the page with comments, just create a new post in this community for questions!