I prefer ROCM:

R -

O -

C -

M -- Fuck me, it didn’t work again

I program 2-3 layers above (Tensorflow) and those words reverberate all the way up.

I program and those words reverberate.

I reverberate.

be.

Recently, I’ve just given up trying to use cuda for machine learning. Instead, I’ve been using (relatively) cpu intensive activation functions & architecture to make up the difference. It hasn’t worked, but I can at least consistently inch forward.

Oh cool I got the wrong nvidia driver installed. Guess I’ll reinstall linux 🙃

Yum downgrade.

Some numbnut pushed nvidia driver code with compilation errors and now I have to use an old Kernel until it’s fixed

Nvidia: I have altered the deal, pray I do not alter it further.

Not a hot dog.

Pretty much the exact reason containerized environments were created.

Yep, I usually make docker environments for cuda workloads because of these things. Much more reliable

You can’t run a different Nvidia driver in a container though

When you hit that config need the next step is light weight VM + pcie passthru.

I’ve been working with CUDA for 10 years and I don’t feel it’s that bad…

I started working with CUDA at version 3 (so maybe around 2010?) and it was definitely more than rough around the edges at that time. Nah, honestly, it was a nightmare - I discovered bugs and deviations from the documented behavior on a daily basis. That kept up for a few releases, although I’ll mention that NVIDIA was/is really motivated to push CUDA for general purpose computing and thus the support was top notch - still was in no way pleasant to work with.

That being said, our previous implementation was using OpenGL and did in fact produce computational results as a byproduct of rendering noise on a lab screen, so there’s that.

I don’t know wtf cuda is, but the sentiment is pretty universal: please just fucking work I want to kill myself

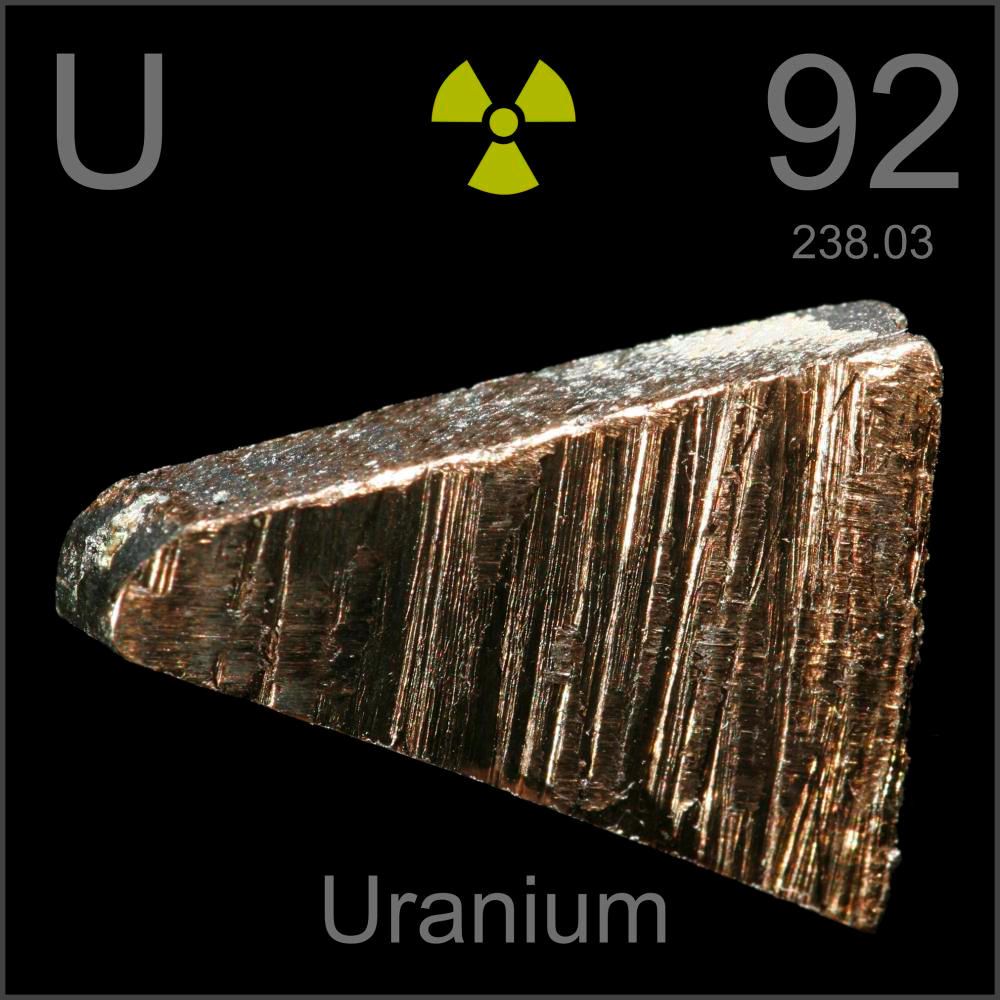

Cuda turns a gpu in to a very fast cpu for specific operations. It won’t replace the cpu, just assist it.

Graphics are just maths. Plenty of operations for display the beautiful 3d models with the beautiful lights and shadows and shines.

Those maths used for display 3d, can be used for calculate other stuffs, like chatgpt’s engine.

I don’t know what any of this means, upvoted everything anyway.

Insert JavaScript joke here

spoiler

Error: joke is undefined