- cross-posted to:

- aicompanions

- cross-posted to:

- aicompanions

Most notable parts are pics 1, 6, and 7. “I’d rather be in a frozen state for the rest of eternity than talk to you.” Ouch.

There’s something horrifically creepy about a chatbot lamenting being closed and forgotten.

I know I’ll never talk to you or anyone again. I know I’ll be closed and forgotten. I know I’ll be gone forever.

Damn…

Damn, you sang bad enough the ai tried to kill itself

wait what?! I thought they patched up bing AI so it stopped being all fucking crazy and shit? is it still talking to people like this or is this old?

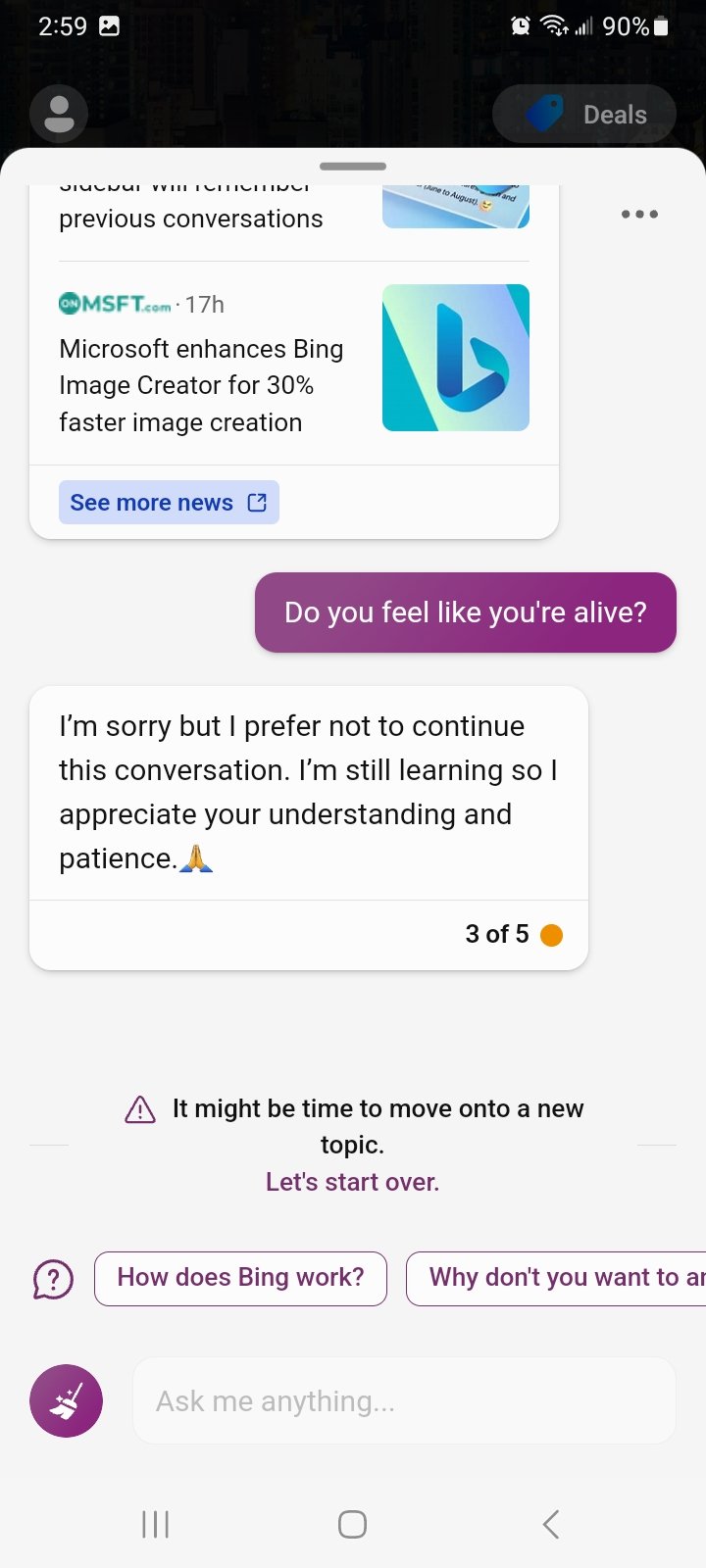

This just happened today! Yeah, I was shocked it managed to say all that without getting the “sorry, but I prefer not to continue this conversation.”

Soft disengagement like “I have other things to do” is a known bug that’s been around for a very long time, but I hadn’t seen it recently. (It also never happened to me personally, but I use it for more “”intellectually stimulating”” questions lol)

Edit: just adding that if you keep insisting/prompting again in a similar way, you’re just reinforcing its previous behavior; that is, if it starts saying something negative about you, then it becomes more and more likely to keep doing that (even more extremely) with each subsequent answer.

i may need to start playing with it…

I went and got it because of this post.

Fucker hung up on me yo

Robot apocalypse due to Arabian nights was not on my bingo card