cross-posted from: https://lemmy.world/post/1408916

OpenOrca-Preview1-13B Has Been Released!

The Open-Orca team has released OpenOrca-Preview1-13B, a preliminary model that leverages just 6% of their dataset, replicating the Orca paper from Microsoft Research. The model, fine-tuned on a curated set of 200k GPT-4 entries from the OpenOrca dataset, demonstrates significant improvements in reasoning capabilities, with a total training cost under $200. This achievement hints at the exciting potential of fine-tuning on the full dataset in future releases.

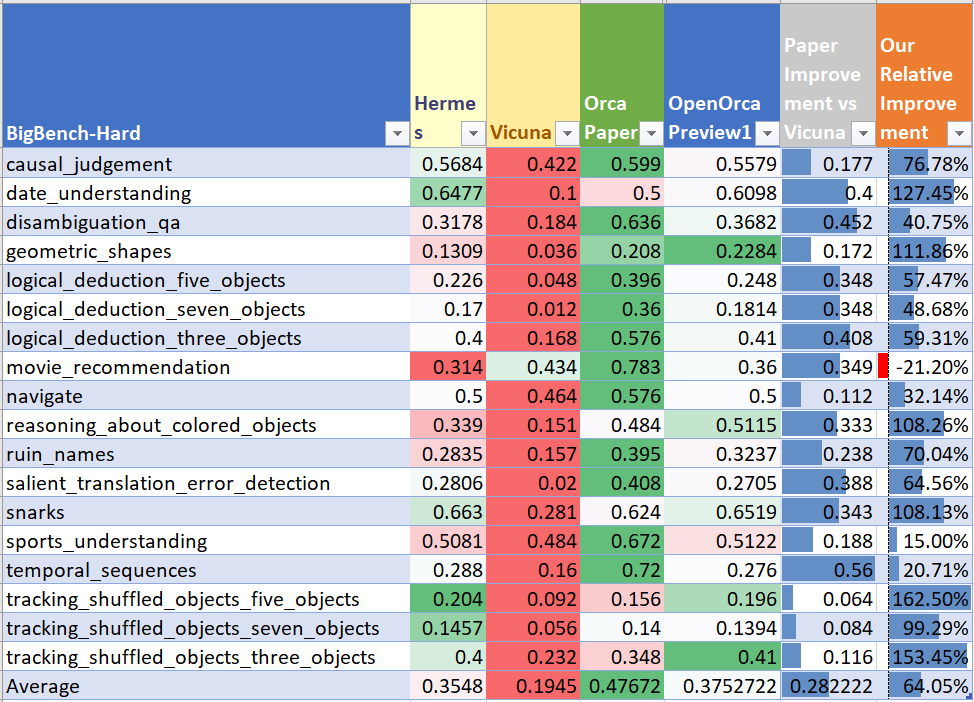

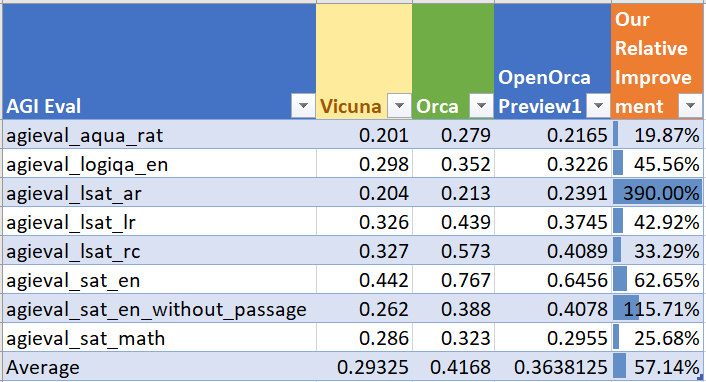

OpenOrca-Preview1-13B has shown impressive performance on challenging reasoning tasks from BigBench-Hard and AGIEval, as outlined in the Orca paper.

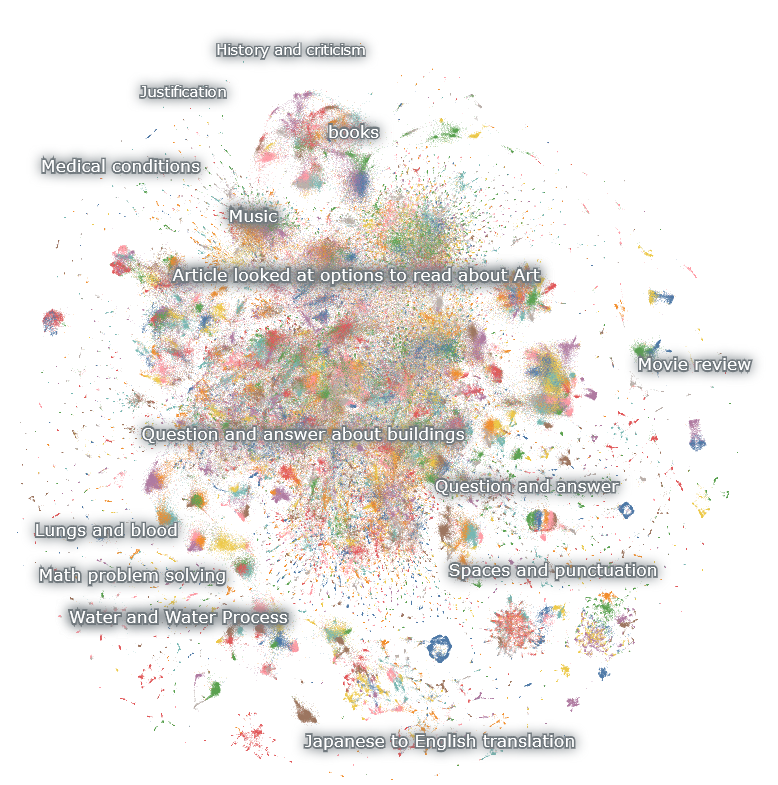

Even with a small fraction of the dataset, it achieved approximately 60% of the improvement seen in the Orca paper, offering encouraging insights into the scalability of the model. Furthermore, the Open-Orca team has made their Nomic Atlas Dataset Map available for visualizing their dataset, adding another layer of transparency and accessibility to their work.

I for one, absolutely love Nomic Atlas. The data visualization is incredible.

Nomic Atlas

Atlas enables you to: Store, update and organize multi-million point datasets of unstructured text, images and embeddings. Visually interact with embeddings of your data from a web browser. Operate over unstructured data and embeddings with topic modeling, semantic duplicate clustering and semantic search.

You should check out the Atlas for Open Orca. Data is beautiful!

Here are a few other notable metrics and benchmarks:

BigBench-Hard Performance

AGIEval Performance

Looks like they trained this with Axolotl. Love to see it.

Training

Built with Axolotl

We trained with 8x A100-80G GPUs for 15 hours. Commodity cost was < $200.

We trained for 4 epochs and selected a snapshot at 3 epochs for peak performance.

What an exciting model! Can’t wait to see the next wave of releases. It’s worth mentioning orca_mini, which is worth checking out if you like this new open-source family of LLMs.

The/CUT (TLDR)

The Open-Orca team has made a groundbreaking open-source breakthrough, creating a cost-effective AI model, OpenOrca-Preview1-13B, that thinks and reasons better using only a tiny portion of their data. This work not only highlights the power of community-driven innovation, but also makes advanced AI accessible and affordable for everyone.

If you found any of this interesting, consider subscribing to [email protected] where I do my best to keep you informed and in the know with the latest breakthroughs in free open-source artificial intelligence.

Related Posts