cross-posted from: https://lemmy.world/post/1535820

I’d like to share with you Petals: decentralized inference and finetuning of large language models

- https://petals.ml/

- https://research.yandex.com/blog/petals-decentralized-inference-and-finetuning-of-large-language-models

Run large language models at home, BitTorrent‑style

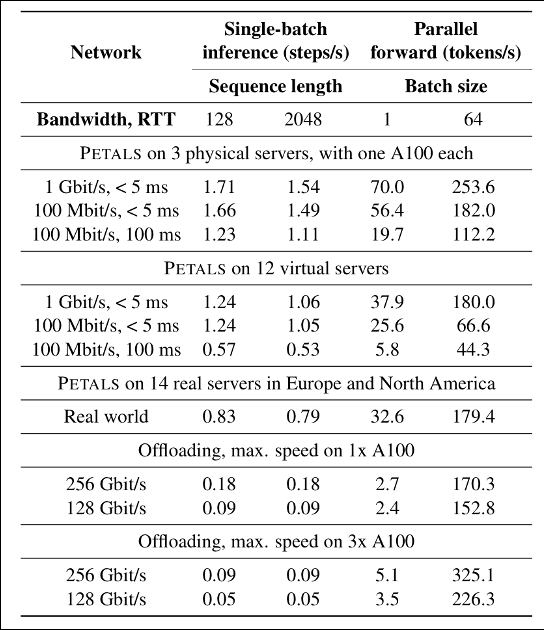

Run large language models like LLaMA-65B, BLOOM-176B, or BLOOMZ-176B collaboratively — you load a small part of the model, then team up with people serving the other parts to run inference or fine-tuning. Single-batch inference runs at 5-6 steps/sec for LLaMA-65B and ≈ 1 step/sec for BLOOM — up to 10x faster than offloading, enough for chatbots and other interactive apps. Parallel inference reaches hundreds of tokens/sec. Beyond classic language model APIs — you can employ any fine-tuning and sampling methods, execute custom paths through the model, or see its hidden states. You get the comforts of an API with the flexibility of PyTorch.

Overview of the Approach

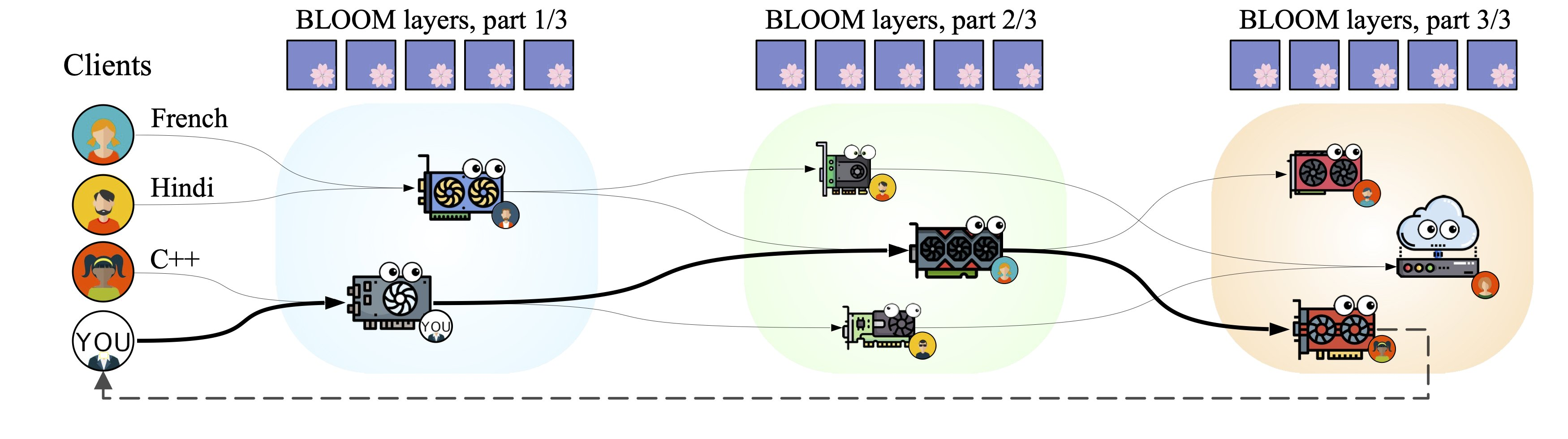

On a surface level, Petals works as a decentralized pipeline designed for fast inference of neural networks. It splits any given model into several blocks (or layers) that are hosted on different servers. These servers can be spread out across continents, and anybody can connect their own GPU! In turn, users can connect to this network as a client and apply the model to their data. When a client sends a request to the network, it is routed through a chain of servers that is built to minimize the total forward pass time. Upon joining the system, each server selects the most optimal set of blocks based on the current bottlenecks within the pipeline. Below, you can see an illustration of Petals for several servers and clients running different inputs for the model.

Benchmarks

We compare the performance of Petals with offloading, as it is the most popular method for using 100B+ models on local hardware. We test both single-batch inference as an interactive setting and parallel forward pass throughput for a batch processing scenario. Our experiments are run on BLOOM-176B and cover various network conditions, from a few high-speed nodes to real-world Internet links. As you can see from the table below, Petals is predictably slower than offloading in terms of throughput but 3–25x faster in terms of latency when compared in a realistic setup. This means that inference (and sometimes even finetuning) is much faster with Petals, despite the fact that we are using a distributed model instead of a local one.

Conclusion

Our work on Petals continues the line of research towards making the latest advances in deep learning more accessible for everybody. With this work, we demonstrate that it is feasible not only to train large models with volunteer computing, but to run their inference in such a setup as well. The development of Petals is an ongoing effort: it is fully open-source (hosted at https://github.com/bigscience-workshop/petals), and we would be happy to receive any feedback or contributions regarding this project!

I can’t honestly tell if any of this really has a future, but it should super interesting.

We will watch your career with great interest

Have anyone tried it?

I just did via http://chat.petals.ml/ - was interesting enough to transcribe and post the results, although it did crash once I got deeper into the analysis due to rate limiting. It definitely has potential.

Thanks. Main problem I see with p2p is that it needs to gain a bit of traction, an active community behind it. Let’s see if it gets the traction needed

Isn’t this what the koboldai horde already does?

Well new project for me to look into thanks. I have only seen petals as a distributed inference engine, so seeing more in the space would be promising.

The KoboldAI Horde was the first thing that came to my mind when I heard about this too. After some research, it appears Petals and the AI-Horde are similar in concept, but different in strategy and execution.

The Kobold AI-Horde utilizes a ‘kudos-based economy’ to prioritize render/processing queues.

Petals seems to utilize a different routing/queue mechanism that prioritizes optimization over participation.

So you’re not wrong. The AI-Horde accomplishes crowd compute through a similar high level approach, however, the biggest difference (at a glance) seems to be how the I/O is handled and prioritized between the two platforms. That’s a bit of an oversimplification, but it communicates the idea.

I really like the concept of crowd-compute, but I’m not sure it’ll get as popular as it needs to rival emerging (corporate) exaflops of compute. I hope Petals & AI-Horde benefit from the mutual competition. It would be really cool to see a future where George Hotz & tinycorp actually commoditize the petaflop for consumers. Maybe then crowd compute can begin to rival some of these big tech entities that otherwise dwarf available silicon.

Looks pretty neat!