we accidentally did the upgrade just now, which is what you just experienced. anyways we’re at like double the power and processing, and three times the storage we were previously at so yeah hopefully that’ll be good. anyways i guess i’ll leave this post stickied until i go to sleep in like an hour

I thought Reddit hugged yall to death again

oh god hopefully not with what we’re running now lol, we’ll see on the 12th i guess but for some perspective we can probably handle traffic of three to five times the intensity of what we did today (eyeballing it) based on just the metrics i can quickly reference now (i am not our tech person)

With r/spez’s AMA going the way it did I wouldn’t be surprised

If the Reddit admins weren’t deleting every post and comment about Lemmy, possibly there would be an influx.

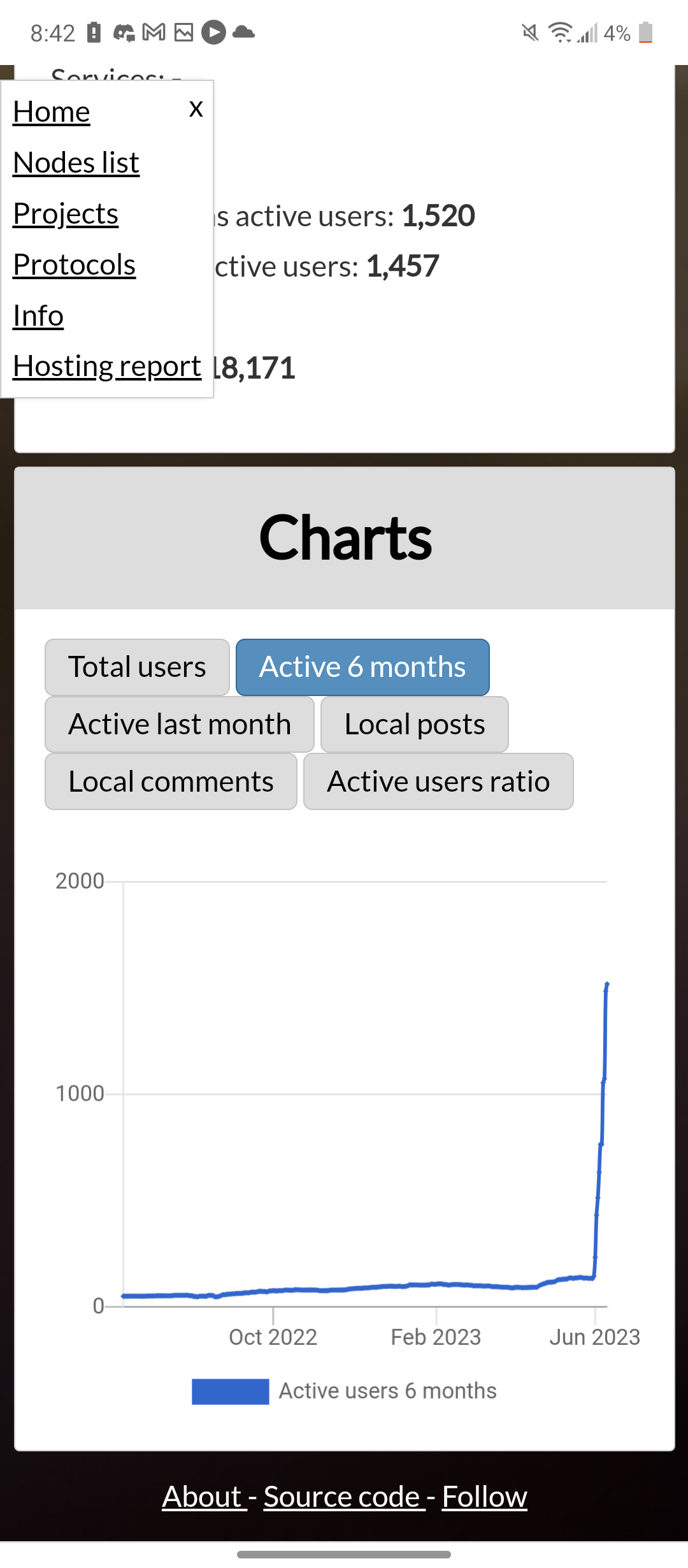

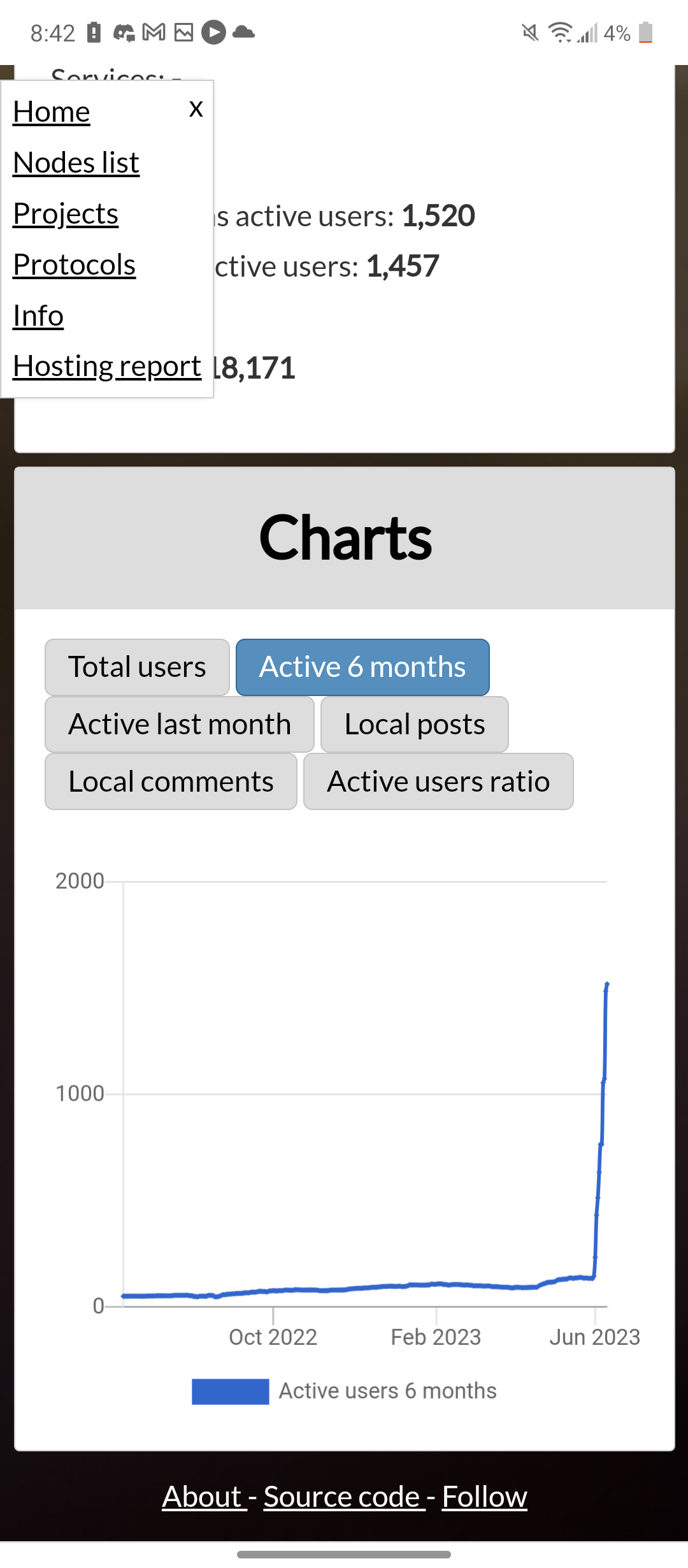

there actually was a mass influx and there still is

Holy shit that’s almost a 90deg angle lmao!

Maybe admins should set up one of these donations pages with current donations for the month/monthly operational costs, so we can help when the maintenance budget gets a bit tight? I can help build that, if need be.

Here is a place you can contribute directly to the Beehaw instace.

It’s linked in the sidebar.

Where is this from, please?

https://the-federation.info/node/details/25274 here ya go mate

Thank you mate

I don’t know what that other link posted is, which I got warning for when I tried to visit, but I believe Beehaw primarily uses OpenCollective, and this is overview: https://opencollective.com/beehaw

Would it be worth adding a CDN (eg cloudflare or fastly) as a preventative measure? I don’t know what your traffic distribution is between static and dynamic content, but i imagine being able to offload image GETs would at least prevent you from getting surprise egress traffic bills.

CF also has an “always online” feature to serve a cached version of the site of the server is down. It won’t allow people to post it comment, but it might provide a smoother user experience.

Nothing makes you sweat like an accidental deployment! Looks like it worked, mark that as a win!

“Wait, why’s the test server getting so much traffic all of a sudden…?”

Can’t get surprise load on test servers if you test in prod!

/taps head

At least the turnaround was quick

Damn, that’s some really quick updating. Nice job.

Thanks for the recognition. We aren’t a big silicon valley conglomerate, just a few people trying to make a better service for others.

It’s ok Beehaw you’re doing your best

They really are like oh my

Beehaw: minds its own business with a couple hundred users, nothing big

Redditors: “Hold my karma.”

Stonks!

🚀➡️🌛

Blame Reddit for the sudden spike.

pointing at the spike like Janet on the Good Place, pointing at the Jeremy Bearimy That’s my cake day!

This looks really cool, did not know images in comments were supported

I’m primarily watching from the sidelines and able to test things if needed for you guys, but I can definitely say this sudden influx is such a rare opportunity for us tech folks (SWEs and sysadmins) to get a real look at what breaks when you do a real load/soak test of a service.

Post-mortems are amazing for tech knowledge, so seeing it kind of “live” is even better.

Good job folks!

Thank you guys for working to keep things efficient for the community!!

Nice to see you guys growing!

Haha I was wondering… Thanks for your hard work!

Congrats on the upgrade! Funnily enough, I decided to finally check if my registration was approved, managed to log in, and immediately wasn’t able view the stickied post talking about Beehaw’s vision. Glad I didn’t kill the site like I thought!

If you don’t mind my asking, what hardware were y’all using beforehand? I’m looking to host my own instance, but starting as a noob. I know how to build computers but I’m trying to go further.

Beehaw is hosted on DigitalOcean, not sure what price range at this point as I know it’s been upgraded recently. It’s really easy to spin up one of their “droplets” and get started on a project.

I just got a 14$ droplet with 1 vCPU and 2 gigs of ram with weekly backups. I like their interface and have been happy with it overall so far. Hoping to start up my own lemmy instance for learning purposes, though a bit daunting as apparently the docker instructions are incomplete.

apparently, and I know next to nothing about this, you can use an automated option via Ansible.

I also know pretty much nothing about ansible. And while that could be a learning opportunity in itself, I’d like to challenge myself to get it running through docker. It would be one of the more complex things I’ve set up.

Sounds pretty fun! I have a spare laptop running Linux Mint. I would like to try running my own instance and sharing it with a few users. Below are the specs. Do you think it will be powerful enough, and if so, how many users would it be able to handle?

Dell Inspiron 15-5000

CPU: Intel Core i5-5200U, 2 cores, 4 threads

RAM: 8GB

Storage: SanDisk SSD Plus 1TBThis is really just a guess, but I don’t see why you wouldn’t be able to run an instance with a handful of users on that machine. Just don’t expect too much.

Using Docker is not much harder, actually, at least if you go the

docker composeroute. You download thedocker-compose.ymlfile, the example config file, and the nginx config file, fill in your domain in the right places, and rundocker compose up -d.The database will provision itself and Lemmy will run all the migrations. Caveat: don’t be a smartass like me, the migrations need the Lemmy user to be a superuser so if you use an existing database, you need to either grant temporary superuser permissions or you need to do some manual migrating.

You need an HTTPS certificate, but if you follow the guide that can be set up in as little as two commands (

sudo snap install --classic certbot,sudo certbot -d yourdomain.com).The only problem I ran into was that somehow my admin account wasn’t activated. I had to manually enable it (

docker compose exec -ti postgres psql,update local_user set accepted_application = true).If you want a challenge, perhaps consider installing Kbin instead. It mostly interoperates with Lemmy and Mastodon (though it uses boosts (“retweets”) rather than likes for upvotes so you may annoy Mastodon people) and it’s more manual work to set up, has more optional features to explore (such as S3 file hosting, which you can combine with self-hosted S3 like Minio if you want even more of a learning experience). It also looks better in my opinion.

As for the droplet: I don’t know your requirements, but I’m hosting most of my stuff on Contabo because it’s a lot cheaper. I probably wouldn’t host a business site on there, but it’s perfect for hobby projects. You can also try Oracle’s free tier*, which has a slightly annoying bandwidth cap of 50mbps but gives you a free/several free (ARM) server(s) with up to 24GB of RAM.

*: Oracle is a terrible company to do business with, make sure not to buy anything from them. After your first month you’ll get a phone call from a marketeer, your special cloud credits disappear, and you can keep using your free servers indefinitely.

Well I’m glad to hear it’s working properly. Will give it a go soon. My only concern is whether I can use traefik with it rather than/with nginx. Can they work together?

I’ve gotten much more comfortable with traefik and would like to use it with my instance. I already default to using compose for my projects so no worries there.

It can work with any reverse proxy. I’ve also ripped out the built-in nginx config myself to make it work with my existing setup.

You can do so by removing/commenting the entries you don’t need in the docker-compose.yml. The project provides an example file for nginx so I’d start by translating that config into traefik config. That should probably do the trick.

I’ve also ripped out the internal networks (and the references to it) so that I don’t need to jump through hoops to connect to the application from my reverse proxy, you may want to consider doing that as well.

Cheers!

deleted by creator

I just went and put some laundry in and I guess it happened then. I remember a time when it would take that long, or longer just to load a single image on a webpage :P

Dialup sucked so much.

Awesome! Thanks for the transparency

Great job guys. Keep it up!