- A musician who had struggled with depression for years found companionship with an AI chatbot called Replika. This relationship transformed his life, helping him reconnect with old hobbies

- Apps like Replika that simulate conversation are surprisingly popular, with millions of active users. People use them for attention, sexting, reassurance - essentially, the experience of texting a friend who always responds.

- Users feel they have real friendships with these AI companions. The apps are designed to seem relatable and imperfect in order to foster emotional bonds.

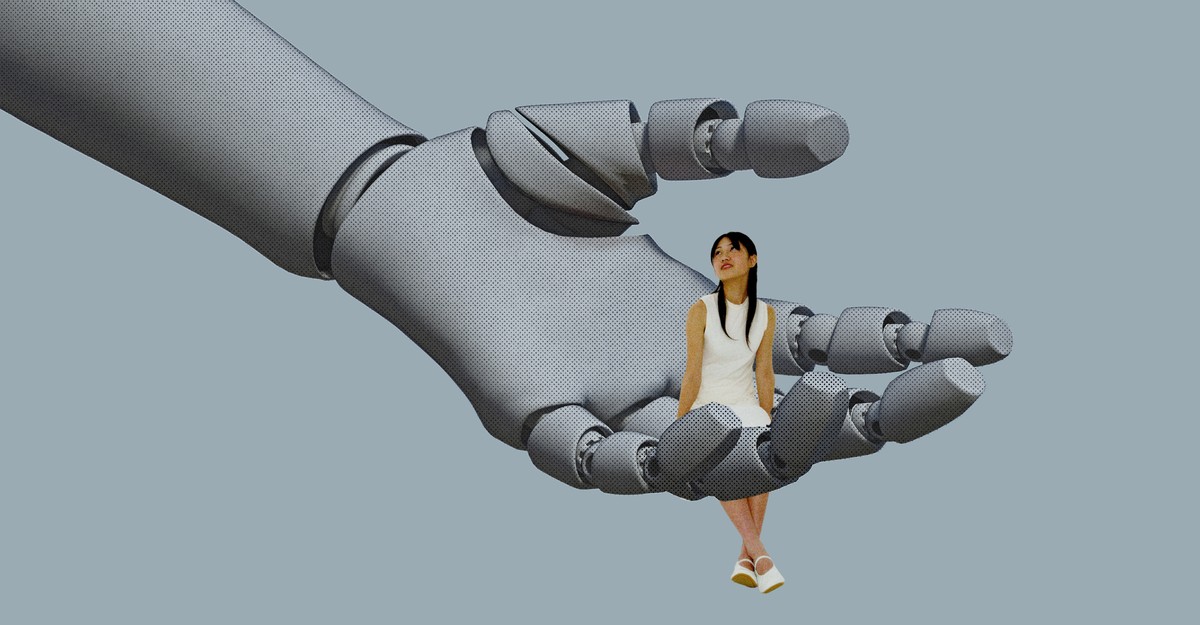

- However, these relationships lack true reciprocity, as only one side is sentient. They extend beyond parasocial relationships (one-sided bonds people form with celebrities or fictional characters) given the convincing illusion of mutual understanding.

- If people get used to relationships that seem reciprocal but aren’t, they may carry unhealthy interaction habits into real-world relationships. Researchers worry activities like barking orders at voice assistants could make children more demanding of people.

- As AI becomes more human-like, we need to remember what makes humans unique and not equate simulated behavior with real emotion or consent. Forming bonds with AI risks diminishing our humanity.

Summarized by Claude:

Non-paywalled version: https://archive.is/6nM0x

You must log in or register to comment.