Edit: Results tabulated, thanks for all y’alls input!

Results fitting within the listed categories

Just do it live

-

Backup while it is expected to be idle @[email protected] @[email protected] @[email protected]

-

@[email protected] suggested adding a real long-ass-backup-script to run monthly to limit overall downtime

Shut down all database containers

-

Shutdown all containers -> backup @[email protected]

-

Leveraging NixOS impermanence, reboot once a day and backup @[email protected]

Long-ass backup script

- Long-ass backup script leveraging a backup method in series @[email protected] @[email protected]

Mythical database live snapshot command

(it seems pg_dumpall for Postgres and mysqldump for mysql (though some images with mysql don’t have that command for meeeeee))

-

Dump Postgres via

pg_dumpallon a schedule, backup normally on another schedule @[email protected] -

Dump mysql via mysqldump and pipe to restic directly @[email protected]

-

Dump Postgres via

pg_dumpall-> backup -> delete dump @[email protected] @[email protected]

Docker image that includes Mythical database live snapshot command (Postgres only)

-

Make your own docker image (https://gitlab.com/trubeck/postgres-backup) and set to run on a schedule, includes restic so it backs itself up @[email protected] (thanks for uploading your scripts!!)

-

Add docker image

prodrigestivill/postgres-backup-localand set to run on a schedule, backup those dumps on another schedule @[email protected] @[email protected] (also recommended additionally backing up the running database and trying that first during a restore)

New catagories

Snapshot it, seems to act like a power outage to the database

-

LVM snapshot -> backup that @[email protected]

-

ZFS snapshot -> backup that @[email protected] (real world recovery experience shows that databases act like they’re recovering from a power outage and it works)

-

(I assume btrfs snapshot will also work)

One liner self-contained command for crontab

- One-liner crontab that prunes to maintain 7 backups, dump Postgres via

pg_dumpall, zips, then rclone them @[email protected]

Turns out Borgmatic has database hooks

- Borgmatic with its explicit support for databases via hooks (autorestic has hooks but it looks like you have to make database controls yourself) @[email protected]

I’ve searched this long and hard and I haven’t really seen a good consensus that made sense. The SEO is really slowing me on this one, stuff like “restic backup database” gets me garbage.

I’ve got databases in docker containers in LXC containers, but that shouldn’t matter (I think).

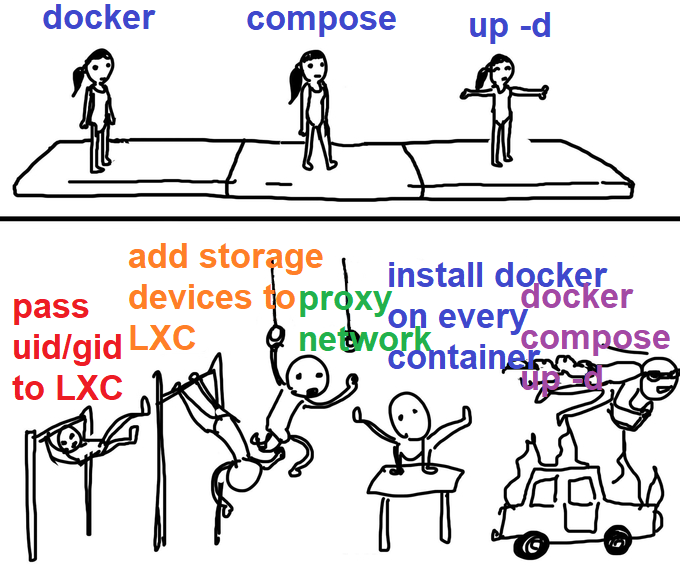

me-me about containers in containers

I’ve seen:

- Just backup the databases like everything else, they’re “transactional” so it’s cool

- Some extra docker image to load in with everything else that shuts down the databases in docker so they can be backed up

- Shut down all database containers while the backup happens

- A long ass backup script that shuts down containers, backs them up, and then moves to the next in the script

- Some mythical mentions of “database should have a command to do a live snapshot, git gud”

None seem turnkey except for the first, but since so many other options exist I have a feeling the first option isn’t something you can rest easy with.

I’d like to minimize backup down times obviously, like what if the backup for whatever reason takes a long time? I’d denial of service myself trying to backup my service.

I’d also like to avoid a “long ass backup script” cause autorestic/borgmatic seem so nice to use. I could, but I’d be sad.

So, what do y’all do to backup docker databases with backup programs like Borg/Restic?

Don’t run storage services in Docker. It’s stupid and unnecessary. Just run it on the host.

You should really back that up with arguments as I don’t think a lot of people would agree with you.

Ah gotchya, well docker compose plus the image is pretty necessary for me to easily manage big ass/complicated database-based storage services like paperless or Immich - so I’m locked in!

And I’d still have to specially handle the database for backup even if it wasn’t in a container…

Don’t worry, it’s fine, there’s nothing inherently wrong with running stateful workload in a container.

Why, exactly?

Because you can’t just copy the files of a running DB (if I got what you mean).

You’d have to run several versions of several db engines side by side, which is not even doable easily in most distros. Not to mention some apps need special niche versions, Immich needs a version of Postgres with pg-vectors installed. Also they don’t tell you how they provision them — and usually I don’t care because that’s the whole point of using a docker stack so I don’t have to.

Last but not least there’s no reason to not run databases in docker.