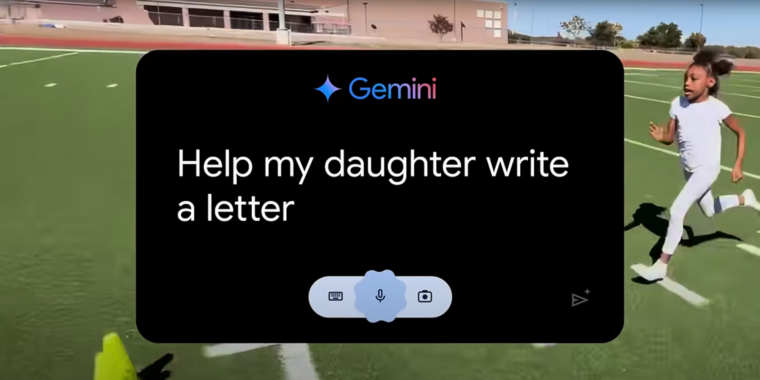

If you’ve watched any Olympics coverage this week, you’ve likely been confronted with an ad for Google’s Gemini AI called “Dear Sydney.” In it, a proud father seeks help writing a letter on behalf of his daughter, who is an aspiring runner and superfan of world-record-holding hurdler Sydney McLaughlin-Levrone.

“I’m pretty good with words, but this has to be just right,” the father intones before asking Gemini to “Help my daughter write a letter telling Sydney how inspiring she is…” Gemini dutifully responds with a draft letter in which the LLM tells the runner, on behalf of the daughter, that she wants to be “just like you.”

I think the most offensive thing about the ad is what it implies about the kinds of human tasks Google sees AI replacing. Rather than using LLMs to automate tedious busywork or difficult research questions, “Dear Sydney” presents a world where Gemini can help us offload a heartwarming shared moment of connection with our children.

Inserting Gemini into a child’s heartfelt request for parental help makes it seem like the parent in question is offloading their responsibilities to a computer in the coldest, most sterile way possible. More than that, it comes across as an attempt to avoid an opportunity to bond with a child over a shared interest in a creative way.

That would be bizarre, lol

Let’s say one person writes 3 pages with some key points, then another extracts modified points due to added llm garbage then sends them again in 2 page essay to someone else and they again extract modified points. Original message was long gone and failure to communicate occurred but bots talk to each other so to say further producing even more garbage

In the end we are drowning in humongous pile of generated garbage and no one can effectively communicate anymore

The funny thing is this is mostly true without LLMs or other bots. People and institutions cant communicate because of leviathan amounts of legalese, say-literally-nothing-but-hide-it-in-a-mountain-of-bullshitese, barely-a-correlation-but-inflate-it-to-be-groundbreaking-ese, literally-lie-but-its-too-complicatedly-phrased-nobody-can-call-false-advertising-ese.

What about using an LLM to extract actual EULA key points?

I wouldn’t rely on LLM to read anything for you that matters. Maybe it will do ok nine out of ten times but when it fails you won’t even know until it is too late.

What if Eula itself was chat gpt generated from another chat generated output from another etc… madness. Such Eula will be pure garbage suddenly and cutting costs no one will even notice relying on ai so much until it’s all fubar

So sure it will initially seem like a helpful tool, make key points from this text that was generated by someone from some other key points extracted by gpt but the mistakes will multiply in each iteration.