Via @[email protected]

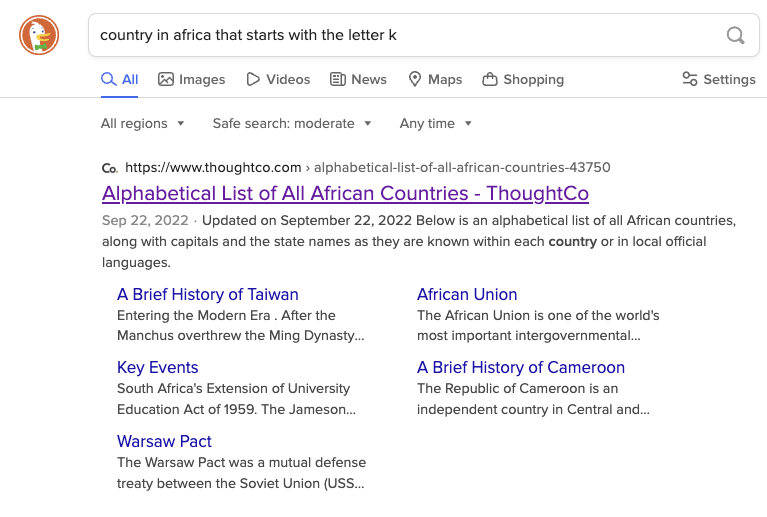

Right now if you search for “country in Africa that starts with the letter K”:

-

DuckDuckGo will link to an alphabetical list of countries in Africa which includes Kenya.

-

Google, as the first hit, links to a ChatGPT transcript where it claims that there are none, and summarizes to say the same.

This is because ChatGPT at some point ingested this popular joke:

“There are no countries in Africa that start with K.” “What about Kenya?” “Kenya suck deez nuts?”

I’m pretty sure a lot of people said something like “Hmm,maybe the automobile won’t replace horses.” after reading about the first car accidents.

Finding sources will always be relevant, and so will finding links to multiple sources (search results). Until we have some technological breakthrough that can fact check LLM models, it’s not a replacement for objective information, and you have no idea where it’s getting its information. Figuring out how to calculate objective truth with math is going to be a tough one.

It is still early in the LLM revolution. It sounds likely that soon enough we’ll have multi model systems with variously trained models working in tandem. You’ll have one that generates results and another that is trained to fact check takes that and does its thing before sending it to the user.