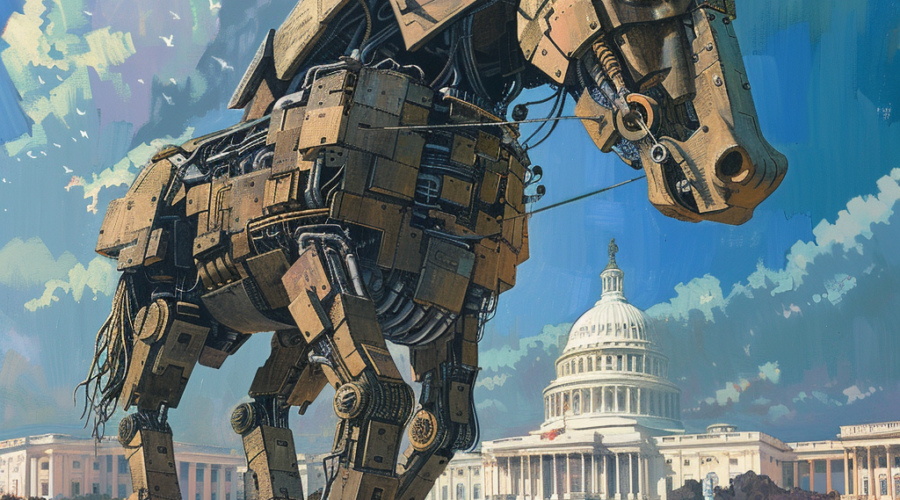

‘Kids Online Safety Act’ is a Trojan Horse For Digital Censorship.::Washington, D.C. - This week, a bipartisan cohort of US Senators unveiled a new version of the Kids Online Safety Act, a bill that aims to impose various restrictions and requirements on tech

The bill is garbage, but it cracks me up that they think this part is a bad thing:

Every item on that list has been abused by web/app developers in ways that exploit and/or negatively affect the brains of developing children.

It’s for all users, not just minors, but if not we’d need to have a super complex document-based AI authentication system

Not at all. We just need to provide tools to enable parents to effectively manage their children’s experiences. One component of that would be requiring web and app developers to adhere to a higher set of standards if their website or app is available to children.

Since parents are the ones making devices available to their children, they would be empowered to do one of the following:

Then the parents would be able to manage apps installed on the device / sites that are navigable. This could include both apps/sites that are explicitly targeted at children and those that have a child-targeted experience, which, if accessed from a child’s account would be opted into automatically. Those apps and sites would be held to the higher standards and would be prohibited from employing predatory patterns, etc…

A parent should be able to feel safe allowing their child to install any app or access any site they want that adheres to these standards.

It would even be feasible to have apps identify the standards they adhere to, such that a parent could opt to only search for / only allow installation of apps / experiences that meet specific criteria. For example, Lexi’s parents might be fine with cartoony face filters but not with in-app purchases, Simon’s parents might not be okay with either, and Sam’s parents might be cool with her installing literally anything that isn’t pornographic.

If a device/account isn’t set up as a child’s device then none of those restrictions would be relevant. This would mean that if a mother handed her son her unlocked iPad to watch a video on Youtube and then left the room, she might come back to him watching something else. An “easy” way to fix that is to require devices to support a “child” user / experience, which could be managed similarly to what I described above (or at least by allow-listing specific apps that are permitted) even if set up as an adult device, rather than only supporting single user experiences.

That is a bad thing. It makes the law very arbitrary and leaves a ton of room for arbitrary or otherwise selective enforcement.

Who wrote the sentence I just quoted?