So after one of my recent comments about if Linux is ready for gaming, I decided to pick-up a new Intel based wifi adapter (old one was broadcom and the drivers on fedora sucked and would drop connection every few minutes).

So far everything is great! Performance wise I can usually run every game about one tier higher graphically (med -> high) with the same or better performance than on Windows. This is on an rx 5700 and an ultrawide.

Bazzite is running great as always. Still getting used to the immutability of the system as I usually use Arch btw, but there are obviously workarounds to that.

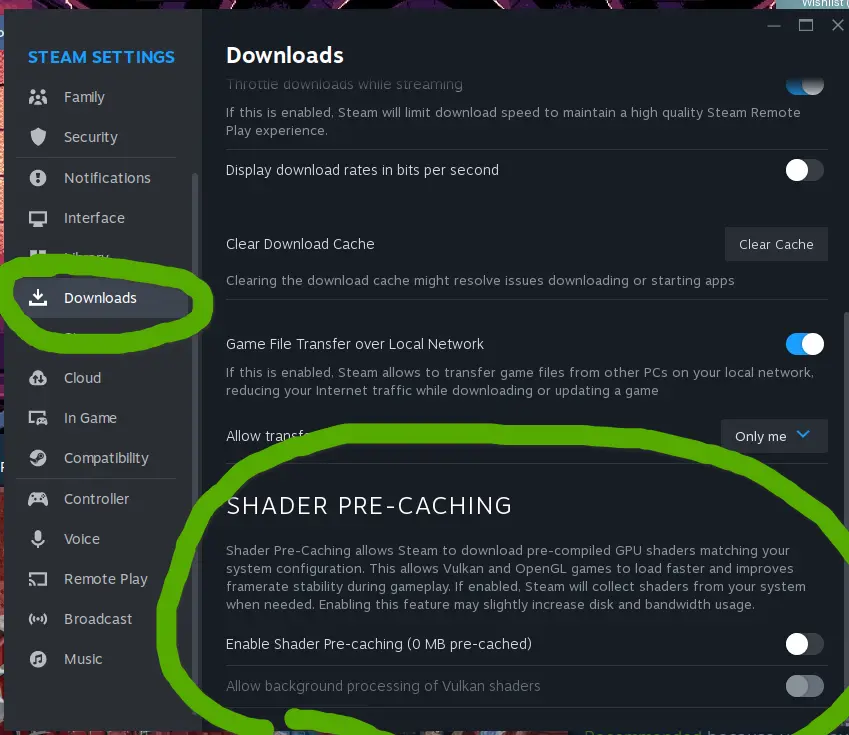

Overall I’m still getting used to the Steam “processing vulkan shaders” pretty much every time a game updates, but it’s worth it for the extra performance. Now I’m 100% Linux for my gaming between my Steamdeck and PC.

That can be turned off, though. Haven’t noticed much of a difference after doing so (though, I am a filthy nvidia-user). Also saving quite a bit of disk space while too.

Yeah I saw that when I was previously running into this, but wasn’t sure if it would leave performance on the table. Also, I’m curious if you could run it once, turn it off so you don’t process them again, and if that would be beneficial for subsequent runs of the game even after updates (assuming not since they’d probably be invalidated).

turning it off will wipe the cached shaders. That cleaned up like ~40 GB (IIRC) for me, without any noticeable difference in performance, stability or smoothness. Though my set of games at the time wasn’t all that big: path of exile, subnautica: below zero, portal 2 and some random smaller games.

Turning it off just pushes the shader cashe-ing to runtime. You might have micro stutters, but it’s temporary as things get cashed as you play.

yep, I’m aware. I just haven’t observed* any compilation stutters - so in that sense I’d rather keep it off and save the few minutes (give or take) on launch

*Now, I’m sure the stutters are there and/or the games I’ve recently played on linux haven’t been susceptible to them, but the tradeoff is worth it for me either way.

If you’re using AMD, you probably have ACO compilation which tends to be much faster than LLVM.

For me I use pre-compilation + ACO + DXVK Async so it compiles and cache super quickly.

amd cpu, but nvidia gpu, so as far as I’d understand, not using ACO then?

Correct. Your GPU is probably just really fast.

NVK is going to be killer on your system.

Aah okay that makes sense. I wouldn’t mind the extra space.

If that’s turned off, do you know if the game generates and caches shaders as you play? If so, does that also apply to games run outside of Steam?

Currently I’m just playing games like Hunt Showdown and Helldivers.

For Hunt Showdown specifically, I have tried skipping pre-caching before and the load into a level took so long that I got disconnected from the match. I recommend keeping it enabled for multiplayer games for that reason.

It’s usually disabled outside of steam. However you can use environment variables :

DXVK_ASYNC=1 DXVK_STATE_CACHE=true DXVK_SHADER_CACHE=true DXVK_STATE_PATH= DXVK_STATE_CACHE_PATH=well, I do have this one game I’ve tried to play, Enshrouded, it does do the shader compilation on it’s own, in-game. The compiled shaders seem to persist between launches, reboots, etc, but not driver/game updates. So it stands to reason they are cached somewhere. As for where, not a clue.

And since if it’s the game doing the compilation, I would assume non-steam games can do it too. Why wouldn’t they?

But, ultimately, I don’t know - just saying these are my observations and assumptions based on those. :P