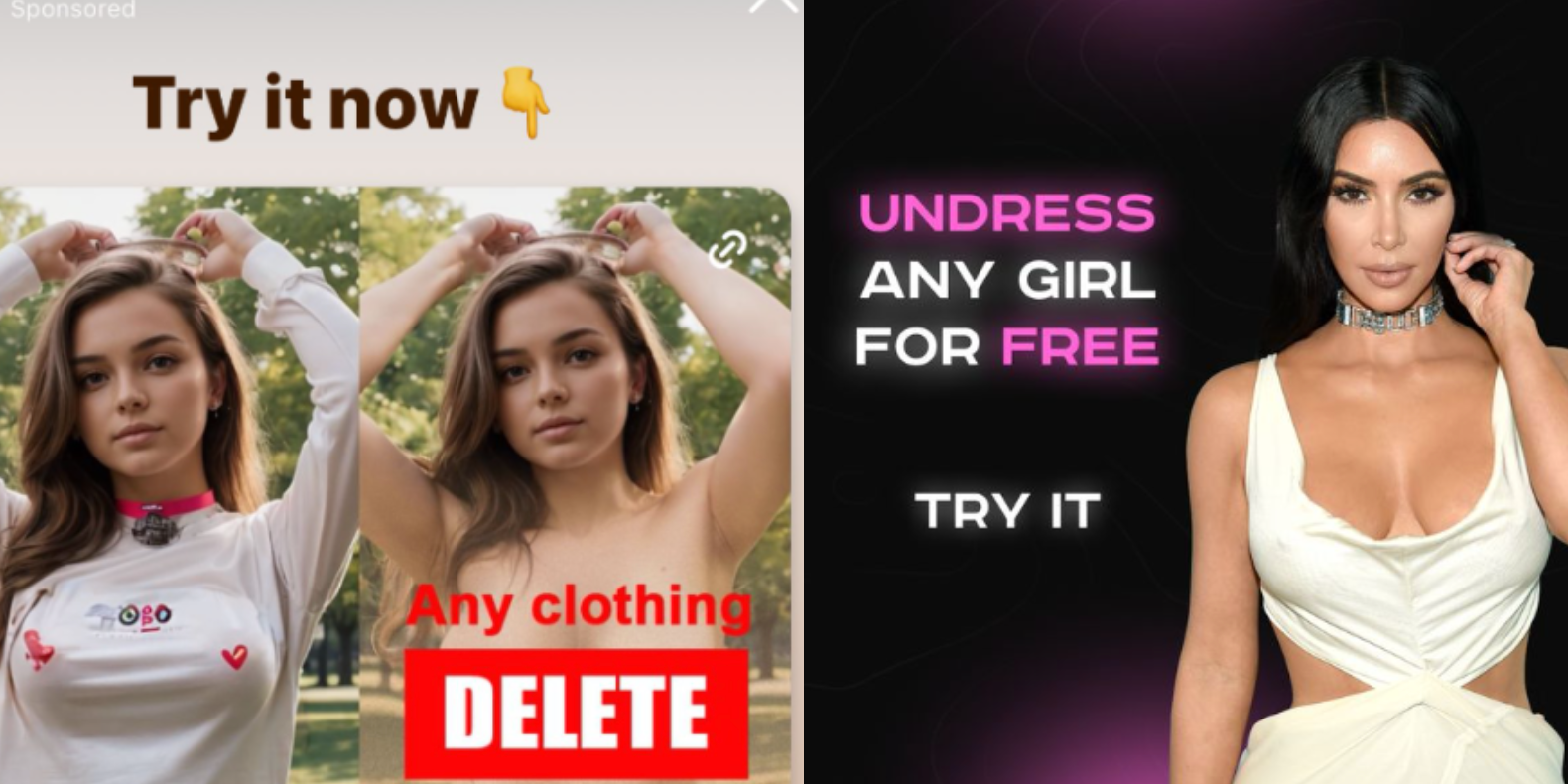

Instagram is profiting from several ads that invite people to create nonconsensual nude images with AI image generation apps, once again showing that some of the most harmful applications of AI tools are not hidden on the dark corners of the internet, but are actively promoted to users by social media companies unable or unwilling to enforce their policies about who can buy ads on their platforms.

While parent company Meta’s Ad Library, which archives ads on its platforms, who paid for them, and where and when they were posted, shows that the company has taken down several of these ads previously, many ads that explicitly invited users to create nudes and some ad buyers were up until I reached out to Meta for comment. Some of these ads were for the best known nonconsensual “undress” or “nudify” services on the internet.

What do you mean? AI absolutely can be made deterministic. Do you have a source to back up your claim?

You know what’s not deterministic? Human content reviewers.

Besides, determinism isn’t important for utility. Even if AI classified an ad wrong 5% of the time, it’d still massively clean up the spammy advertisers. But they’re far, FAR more accurate than that.

https://www.sitation.com/non-determinism-in-ai-llm-output/

AI can be made deterministic, yes, absolutely.

The current design everyone is using(LLMs) cannot be made deterministic.

Again, you are wrong. Specifically ChatGPT may not be able to be deterministic since it’s a hosted service, but you absolutely can replay a prompt using the same random seed to get deterministic responses. Computer randomness isn’t truly random.

But if that’s not satisfying enough, you can also configure the temperature to be zero and system fingerprinting to always be the same, and that makes it even more deterministic, since it will always use the highest probability token.

For example, Llama can be fully deterministic. https://github.com/huggingface/transformers/issues/25507#issuecomment-1678498896

I love your wishful thinking. Too bad academia doesnt agree with you.

Edit: also, I have to come back to laugh at you for trying to argue that the almost random nature of software random number generators is deterministic AI.

Please enlighten me then. Clearly people are doing it, as proved by the link I sent. Are you simply going to ignore that? Perhaps we have different definitions of determinism.

You can make it more deterministic by reducing the acceptable range of answers, absolutely. But then you also limit your output, so thats never really a good use case.

Randomness is a core functionality of not just LLMs, but the entire stack that has resulted in LLMs. Yes you can get a decently consistent answer, but not a deterministic one. Put another way, with LLMs being at max constraint, you can ask them to add 1+1. You’ll usually get 2. But not nearly always.

Yes, but seeding the random generator makes it deterministic. Because LLMs don’t use actual randomness, they use pseudorandom generators.

For all the same inputs, you’ll get the same result, barring a hardware failure. But you have to give it exactly the same inputs. That includes random seed and system prompt (eg. you can’t put the current time and date in the system prompt), as well as the prompt.

No, thats not how it works. Ive already explained and posted links as to why.

You posted a single blog post about ChatGPT not being deterministic, I posted a GitHub issue that explains exactly how to do it using the transformers library. Not sure we can see eye to eye on this one.