- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

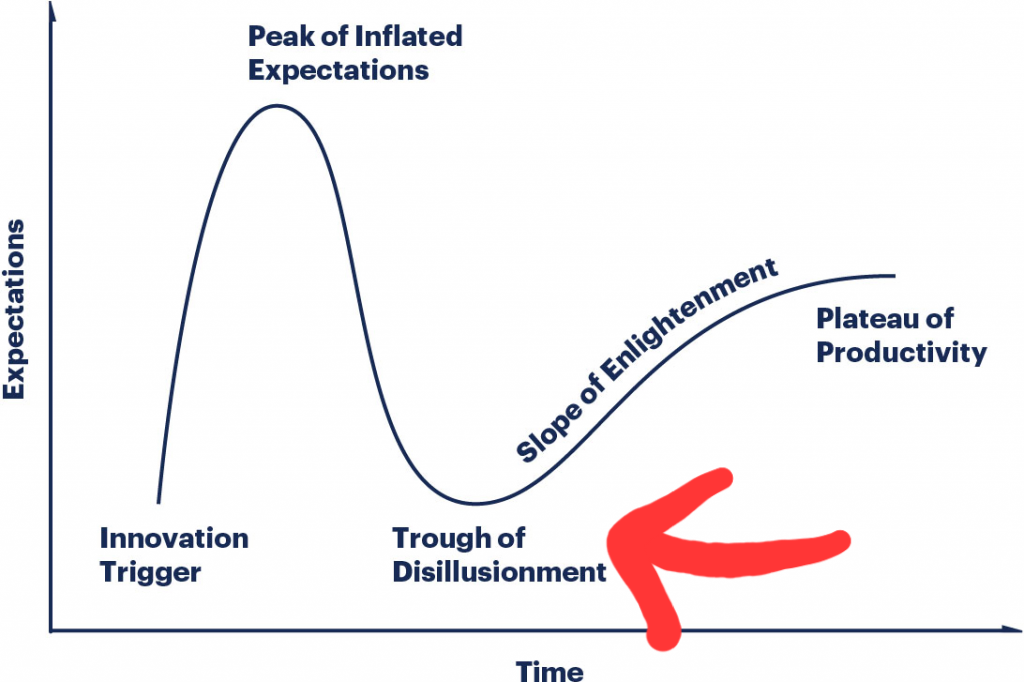

Grumbles about generative AI’s shortcomings are coalescing into a “trough of disillusionment” after a year and a half of hype about ChatGPT and other bots.

Why it matters: AI is still changing the world — but improving and integrating the technology is raising harder and more complex questions than first envisioned, and no chatbot has the magic answers.

Driving the news: The hurdles are everything from embarrassing errors, such as extra fingers or Black founding fathers in generated images, to significant concerns about intellectual property infringement, cost, environmental impact and other issues.

It’s only a matter of time 'til the “AI” bubble really pops and all those tech companies that fired too much of their workforce have to start hiring back like crazy.

While there are some bubbles that need popping, especially in board rooms - i work for a large tech company that has not fired anyone because of AI. Rather the opposite, we have been expanding our AI team in the last 5+ years and have delivered succesful AI products. There is a lot more to AI than ChatGPT. Which, while impressive as a proof of concept, is not actually useful to business.

I’m so skeptical that AI will deliver large scale economic value.

The current boom is essentially fueled by free money. VCs pump billions into start-ups, more established companies get billions in subsidies or get their customers to pay outrageous amounts on promises. Yet, I have yet to see a single AI product that is worth the hassle. The results are either not that good or way too expensive, and if you couldn’t rely on open models paid for by VC, you wouldn’t be able to get anything off the ground.

It’s the same at my employer, which has wasted untold thousands on subscriptions to ChatGPT and CoPilot and all we’ve gotten out of it so far is a script that takes in transaction data and spits out “customer loyalty recommendations”… as if we don’t already have a marketing department for that. XD

I think most people don’t understand the one fundamental thing about AI: ChatGPT, Dall-E and what not are just products produced by machine learning, not AI themselves. Machine learning is already doing a lot of work for science and it‘s utterly unthinkable to not utilize it in fields like chemistry for example. We only read about media producing LLMs because we just consume so damn much media. Maybe that‘s something we should think about.

Nobody fired workers because of AI, that’s just the narrative so they don’t have to say “we’re running out of money”.

It’s also downsizing from when tech companies went on a hiring spree during the early years of the pandemic.

A lot of top level big brains thought they could fire people and replace them with AI, cause for them they’re like robots they see in movies.

Not every new technology or shift in the economy is a “bubble” that’s inevitably going to “pop” someday.

But this one definitely is.

It’s like watching the Blockchain saga in fast-forward.

But this one definitely is.

Such confidence. Why do you think so?

Many of the shifts that have happened in the economy are a result of capabilities that existing AI models actually demonstrably have right now, rather than anticipation of future developments. Even if no further developments happen those existing capabilities aren’t going to just “go away” again somehow.

Also worth noting, blockchains are still around and are doing just fine.

Blockchain is over 10 years old and still not used for its primary purpose: a currency for legal transactions. It’s way too volatile and very few institutions accept it.

AI can’t reach its promised capability of doing everything for us automatically because it isn’t actually AI. It’s just advanced Clippy and autocomplete. It can’t replace anyone senior. It’s just a crappy intern.

Why do you think that’s its primary purpose? It has lots of uses. The point is that it’s doing fine, it hasn’t “gone away.” And if you need a non-volatile cryptocurrency for some purpose there are a variety of stablecoins designed to meet that need.

AI can’t reach its promised capability of doing everything for us automatically

Your criterion for a “bubble popping” is that the technology doesn’t grow to completely dominate the world and do everything that anyone has ever imagined it could do? That’s a pretty extreme bar to hold it to, I don’t know of any technology that’s passed it.

It’s just advanced Clippy and autocomplete. It can’t replace anyone senior.

So it can replace people lower than “senior?” That’s still quite revolutionary.

When spreadsheet and word processing programs became commonplace whole classes of jobs ceased to exist. Low-level accountants, secretarial pools, and so forth. Those jobs never came back because the spreadsheet and word processing programs are still working fine in that role to this day. AI’s going to do the same to another swath of jobs. Dismissing it as “just advanced Clippy” isn’t going to stop it from taking those jobs, it’s only going to make the people who were replaced by it feel a little worse about what they previously did for a living.

Why do you think that’s its primary purpose? It has lots of uses. The point is that it’s doing fine, it hasn’t “gone away.”

Sure, that’s why I only ever hear about unregulated securities when a scam makes the news. XD

Your criterion for a “bubble popping” is that the technology doesn’t grow to completely dominate the world and do everything that anyone has ever imagined it could do? That’s a pretty extreme bar to hold it to, I don’t know of any technology that’s passed it.

My criterion for a bubble pop is the sudden and intense disinvestment that occurs once the irrational exuberance wears out and the bean counters start writing off unprofitable debt.

Given that so-called “AI” is falling into the trough of disillusionment, I’d expect it to begin in earnest within a few months.

So it can replace people lower than “senior?” That’s still quite revolutionary.

No, it can’t, because it isn’t and cannot be made trustworthy. If you need a human to review the output for hallucinations then you might as well save yourself the licensing costs and let the human do the work in the first place.

You’ve switched from saying that a cryptocurrency’s “primary purpose” is as a currency for transactions to saying that they’re securities, those are not remotely similar things.

Anyway. Are you aware that, assuming the Gartner hype cycle actually does apply here (it’s not universal) and AI is really in the “trough of disillusionment”, beyond that phase lies the “slope of enlightenment” wherein the technology settles into long-term usage? I feel like you’re tossing terminology around in this discussion without knowing a lot about what it actually means.

No, it can’t, because it isn’t and cannot be made trustworthy. If you need a human to review the output for hallucinations then you might as well save yourself the licensing costs and let the human do the work in the first place.

If you think it can’t replace anyone then why say “It can’t replace anyone senior”?

Also, what licensing costs? Some AI providers charge service fees for using them, but as far as I’m aware none of them claim copyright over the output of LLMs. And there are open-weight LLMs you can run yourself on your own computer if you want complete independence.

blockchains are still around and are doing just fine.

They are, though. The total market cap across cryptocurrencies right now is about $2.75 trillion.

You misspelled “Unlicensed Securities”, and taking crypto scammers at their word when they tell you how much their bits are worth is an easy way to lose actual money. XD

I’m just pointing out that they’re still there. If it’s a scam then at this point it’s one of history’s biggest and longest-running.

And whether any particular cryptocurrency qualifies as a security in any particular jurisdiction is a complicated question, some do and some don’t. This is about cryptocurrency as a whole so calling them an unlicensed security would not be accurate.

hiring back to do what?

they don’t even need that much staff

hiring back to do what?

Generate revenue for the shareholders.

they don’t even need that much staff

There’s more work to be done than there are people to do it all, lol~

We are here

I feel like the market is still very much near the peak.

Yeah I think the tech curve and the market curve are offset. With the market curve behind

Definitely getting to that point, past the peak, but I think we’re a little bit further to the left still.

Same shit that happened with cryptocurrency and NFTs. Suddenly the tech illiterate c-suites realize it isn’t magically making profits go up.

“outside of a few areas such as coding, companies have found generative AI isn’t the panacea they once imagined.”

It certainly helps with coding, but a human still needs to fix all the mistakes.

Yeah, and that’s not going away for coding or any other place that finds a way to implement these systems anytime soon.