Sounds like it was a bad design to begin with and Nvidia went outside the design specs.

Am I reading this corect, that he did the tests exclusively with the aftermarket cable the customer provided? No resistance measurements on the cable itself but he concludes its not the cable because the manufacturer is usually reliable? No different cables for comparison?

I think I will have to watch the video later to get a an idea on the testing method, since what I can gather from the article, the testing setup Was completely useless to determine whats at fault here.

Another video noted that previous generations used multiple isolated shunt resistors to feed sets of VRM phases. The current measurements in these shunts was used to balance the current in the phases. If any shunt showed no current (connector or pins unplugged) the GPU wouldnt even turn on. Previous generations would do ridiculous things to ensure proper current balance in the wires.

This is done with 6 12V wires on the 30 series. Pairs of wires go to a shunt so there are 3 shunts, which each feed maybe 4 phases. In the worst case scenario, you could somehow lose half the wires, 1 from each pair, and the GPU wouldnt notice. This would result in up to 2x overload on any individual wire. This is why the 30 series did not burn like the 40 and 50 series.

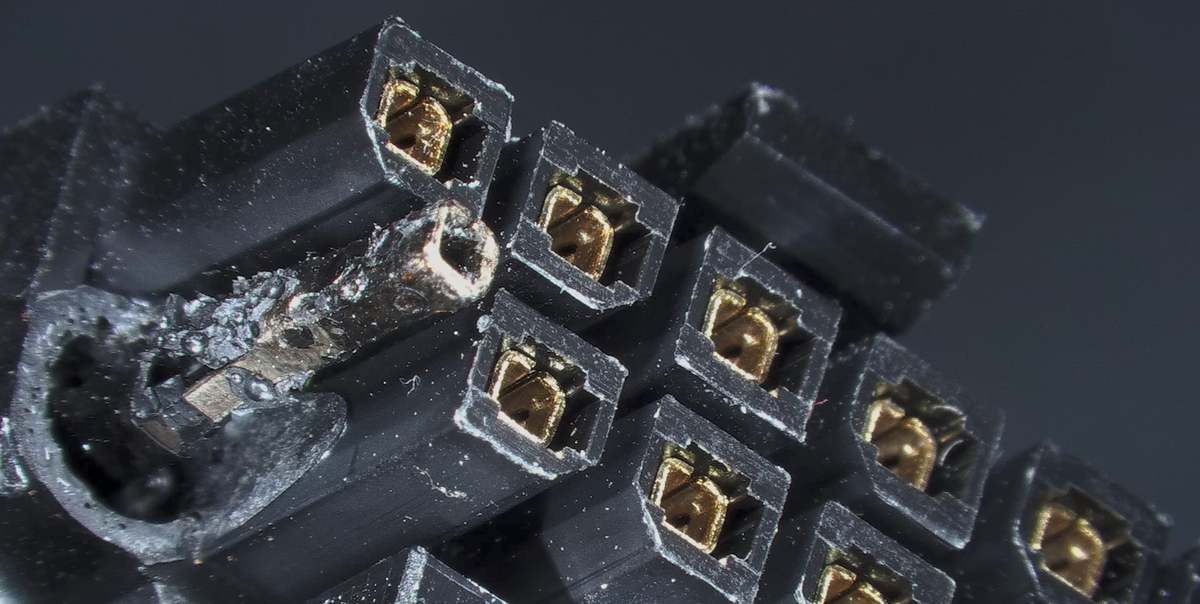

The 4090/5090 short all of the pins together before going through 1/2 shunts, then are shorted again after the shunts. This means no individual or pair of pin currents are measured, and you can cut 5/6 of the wires and have it still think theres a good connection. This results in up to a 6x overload on a wire (e.g. all 600W going through 1 wire).

Also sub in cut wire for bad connection on a pin.

This is a design issue with the board at minimum.

That’s the impression I got, that the power Inputs are parrarel on the card. Doesnt it mean the culprit would most definitely be indeed the cable or the pin connection between cable and card?

It could be in part either, but its also a factor of how the card itself balances the power. This will be a problem regardless of who made the cable or how perfect it is

Nope he examined the damaged aftermarket cable to see if it was faulty but found nothing obviously faulty. The heated wire was with his own PSU Gpu and stock cables.

This makes me feel marginally better about never being able to afford a 5090. Marginally.

If you don’t also want to afford a rather expensive CPU and a good 4k monitor, I wouldn’t worry about it in the first place.

A decent HDR (VESA HDR 1000 or better), high refresh rate (120Hz+), 4k monitor makes a bigger difference with a good-enough GPU. Although that is also to be taken with a grain of salt, because many games don’t support HDR and some don’t even 4k or more than 60 Hz

My monitor is pretty new, though only mid-market, bought with Black Friday discounts (below €300). 4K 144Hz. My CPU however is an 8086K. Was quite a beast when it came out but nowadays has noticeable issues pulling certain loads. GPU is a 2080ti, which would still be perfectly ok for HD gaming but not so much for 4K…

Before I’d buy something like a 5090, I’d definitely swap out that entire platform. There are AAA games where you’ll definitely end up CPU limited before you reach anywhere near 120 FPS. Spending 2k for 60 FPS feels wrong imo. Even if you take it to 120 or 240 with frame gen, you’ll get a worse image in return and then why even bother with 4k?

I thought you were joking about an https://en.wikipedia.org/wiki/Intel_8086 as your CPU. I didn’t realize Intel had reused the number for an i7.

They were like 100 bucks more expensive than the regular kind for about 1Ghz boost out of the box without any tinkering. Worth it imho.