- cross-posted to:

- progressivepolitics

- [email protected]

- cross-posted to:

- progressivepolitics

- [email protected]

Using Reddit’s popular ChangeMyView community as a source of baseline data, OpenAI had previously found that 2022’s ChatGPT-3.5 was significantly less persuasive than random humans, ranking in just the 38th percentile on this measure. But that performance jumped to the 77th percentile with September’s release of the o1-mini reasoning model and up to percentiles in the high 80s for the full-fledged o1 model.

So are you smarter than a Redditor?

This is the buried lede that’s really concerning I think.

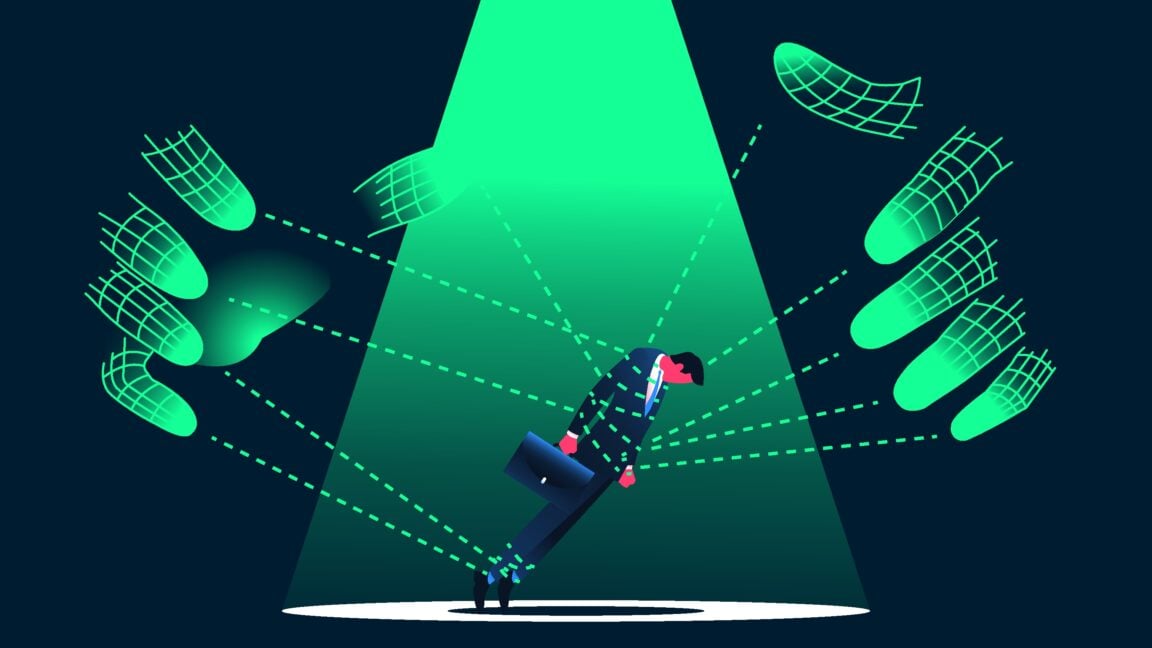

Their goal is to create AI agents that are indistinguishable from humans and capable of convincing people to hold certain positions.

Some time in the future all online discourse may be just a giant AI fueled tool sold to the highest bidders to manufacture consent.

A very large portion of people, possibly more than half, do change their views to fit in with everyone else. So an army of bots pretending to have a view will sway a significant portion of the population just through repetition and exposure with the assumption that most other people think that way. They don’t even need to be convincing at all, just have an overwhelming appearance of conformity.

So if a bunch of accounts on lemmy repeat an opinion that isn’t popular with people I meet IRL then that could be an attempt to change public opinion using bots on lemmy?

In the case of Lemmy, it is more likely that the members of communities are people because the population is small enough that a mass influx of bots would be easy to notice compared to reddit. Plus the Lemmy comminities tend to have obvious rules and enforcement that filters out people who aren’t on the same page.

For example, you will notice some general opinions on .world and .ml and blahaj will fit their local instance culture and trying to change that with bots would likely run afoul of the moderation or the established community members.

It is far easier to utilize bots as part of a large pool of potential users compared to a smaller one.

It just has to be proportional. Reports on these bot farms have shown that they absolutely go into small niche areas to influence people. Facebook groups being one of the most notable that comes to mind.

What do you think are the views being promoted by bots on lemmy?

Are their accounts you think are bots or are you assuming differing opinions from people you know in real life are bots? I know people who have wildly different views in real life, some of which I avoid because of those views.

It is tough to say. But there are red flags. Like when an opinion on a post is repeated a lot by different accounts in the thread but are heavily downvoted and an opposing opinion is heavily upvoted.

This is what I would expect to see if bots brigading a thread are using unpopular talking points.

For example, I see it a lot with anti DNC threads with the same accounts posting similar comments throughout multiple reposts of a single post. If I had to assume what views they are trying to promote, I would say they seem to be trying to discourage democrats to vote by sowing apathy aka FUD.

The exact same scenario plays out when .ml users chime in a .world news thread about China/Russia and the reverse happens. On .world the .ml tankies get downvoted into the ground and on .ml the .world users who call out tankie shit get banned. That is an instance cultural clash that fits the exact scenario.

For the anti Dem stuff plenty of us who vote for them don’t actually like them and it doesn’t take bots to drum up votes for posts that criticize them, but we will downvote the ones that seem to be discouraging others from voting Dem. If they were brigading then the anti Dem posts would get upvoted even more on .world.

There are likely to be malicious actors, probably some vote manipulation. But overall it seems far more likely that in Lemmy the vast majority is still valid users both posting and voting, but that there are malicious actors who are trying to sway directly instead of through bots.

I’m sure some of it is organic but there have been times when I see a post and read the comments and they are all talking in a way that pushes a similar opinion. Then I see the same post reposted and notice the same accounts using the similar comments if not the exactly same. Often times being overly prepared with links and info dumping like a lot of effort was made to support their opinion. It is very sus.

We may not be able to verify when it is happening but we absolutely do know there are organized efforts to shape public opinion by using multiple accounts to push talking points. And this is done by many different types of organizations, from countries like China and Russia, but also by companies like Monsanto or the fossil fuel industry. It has been happening rampantly for years.

It’s no surprise that social media companies are working on AI their platforms are no longer social, they are just tools to control public opinion.

Other governments and oligarchs will pay any money to have that kind of power.

It already is, at least on Armenia and Azerbaijan. EDIT: I mean, the bots were crude-ish, but they don’t have to get better. Harder goals - better bots.