As the AI market continues to balloon, experts are warning that its VC-driven rise is eerily similar to that of the dot com bubble.

As the AI market continues to balloon, experts are warning that its VC-driven rise is eerily similar to that of the dot com bubble.

AI is bringing us functional things though.

.Com was about making webtech to sell companies to venture capitalists who would then sell to a company to a bigger company. It was literally about window dressing garbage to make a business proposition.

Of course there’s some of that going on in AI, but there’s also a hell of a lot of deeper opportunity being made.

What happens if you take a well done video college course, every subject, and train an AI that’s both good working with people in a teaching frame and is also properly versed on the subject matter. You take the course, in real time you can stop it and ask the AI teacher questions. It helps you, responding exactly to what you ask and then gives you a quick quiz to make sure you understand. What happens when your class doesn’t need to be at a certain time of the day or night, what happens if you don’t need an hour and a half to sit down and consume the data?

What if secondary education is simply one-on-one tutoring with an AI? How far could we get as a species if this was given to the world freely? What if everyone could advance as far as their interest let them? What if AI translation gets good enough that language is no longer a concern?

AI has a lot of the same hallmarks and a lot of the same investors as crypto and half a dozen other partially or completely failed ideas. But there’s an awful lot of new things that can be done that could never be done before. To me that signifies there’s real value here.

*dictation fixes

In the dot com boom we got sites like Amazon, Google, etc. And AOL was providing internet service. Not a good service. AOL was insanely overvalued, (like insanely overvalued, it was ridiculous) but they were providing a service.

But we also got a hell of a lot of businesses which were just “existing business X… but on the internet!”

It’s not too dissimilar to how it is with AI now really. “We’re doing what we did before… but now with AI technology!”

If it follows the dot com boom-bust pattern, there will be some companies that will survive it and they will become extremely valuable the future. But most will go under. This will result in an AI oligopoly among the companies that survive.

AOL was NOT a dotcom company, it was already far past it’s prime when the bubble was in full swing still attaching cdrom’s to blocks of kraft cheese.

The dotcom boom generated an unimaginable number of absolute trash companies. The company I worked for back then had it’s entire schtick based on taking a lump sum of money from a given company, giving them a sexy flash website and connecting them with angel investors for a cut of their ownership.

Photoshop currently using AI to get the job done is more of an advantage that 99% of the garbage that was wrought forth and died on the vine in the early 00’s. Topaz labs can currently take a poor copy of VHS video uploaded to Youtube and turn it into something nearly reasonable to watch in HD. You can feed rough drafts of performance reviews or apologetic letters to people through ChatGPT and end up with nearly professional quality copy that iterates your points more clearly than you’d manage yourself with a few hours of review. (at least it does for me)

Those companies born around the dotcom boon that persist didn’t need the dotcom boom to persist, they were born from good ideas and had good foundation.

There’s still a lot to come out of the AI craze. Even if we stopped where we are now, upcoming advances in the medical field alone with have a bigger impact on human quality of life than 90% of those 00’s money grabs.

.com brought us functional things. This bubble is filled with companies dressing up the algorithms they were already using as “AI” and making fanciful claims about their potential use cases, just like you’re doing with your AI example. In practice, that’s not going to work out as well as you think it will, for a number of reasons.

Gentlemans bet, There will be AI teaching college level courses augmenting video classes withing 10 years. It’s a video class that already exists, coupled with a helpdesk bot that already exists trained against tagged text material that already exists. They just need more purpose built non-AI structure to guide it all along the rails and oversee the process.

@linearchaos How can a predictive text model grade papers effectively?

What you’re describing isn’t teaching, it’s a teacher using an LLM to generate lesson material.

Absolutely not, the system guides them through the material and makes sure they understand the material, how is that not teaching?

In the current state people can take classes on say Zoom, formulate a question, and then type it into Google, which pulls up an LLM-generated search result from Baird.

Is there profit in generating an LLM application on a much narrower set of training data to sell it as a pay-service competitor to an ostensibly free alternative? It would need to pretty significantly more efficient or effective than the free alternative. I don’t question the usefulness of the technology since it’s already in-use, just the business case feasibility amidst the competitive environment.

Yeah, current LLM aren’t tuned for it. Not to say there’s not advantage to using one of them while in an online class. Under the general training, there’s no telling what it’s sourcing from. You could be getting an incomplete or misleading picture. If you’re teaching, you should be pulling information from teaching grade materials.

IMO, there are real and serious advantages from not using live classes. Firstly, you don’t want people to be forced to a specific class time. Let the early birds take it when they wake, let the night owls take it at 2am. Whenever people are on top their game. If a parent needs to watch the kids 9-5, let them take their classes from 6-10. Forget all these fixed timeframes. If you get sick, or go on vacation, pause the class. When you get back, have it talk to you about the finer points of the material you might have forgotten and see if you still understand the material. You need something that’s capable of gauging if a response is close enough to the source material. LLM can already do this to an extent, but it’s source material can be unreliable and if they screw with the general training it could have adverse effects on your system. You want something bottled, pinned at your version so you can provide consistent results and properly QA changes.

I tested GPT the other week making some open questions about IT support then I wrote it answers with varied responses. It was able to tell me which answers were proper and which were not great. I asked it to tell me if the responses indicated knowledge of the given topic and it was able to answer correctly in my short test. It not only told me which answers were good and why, but it conveyed concerns about the bad answers. I’d want to see it more thoroughly tested and probably have a separate process wrapped around and watching grading.

What I’d like to see is a class given by a super good instructor. You know those superstars out there that make it interesting and fun, Feynman kinds of people. If you don’t interrupt it, after every new concept or maybe a couple (maybe you tune that to an indication of how well they’re doing) you throw them a couple of open-ish questions about the content to gauge how well they understand it. As the person watches the course, it tracks their knowledge on each section. When it detects a section where they don’t get it, or could get it better it spends a couple minutes trying different approaches. Maybe it cues up a different short video if it’s a common point of confusion or maybe it flags them to work with a human tutor on a specific topic. If the tutor finds a deficiency, the next time someone has a problem right there, before it throws in the towel, it make sure that the student doesn’t have the same deficiency. If it’s a common problem, they throw in an appendix entry and have the user go through it.

As it sits now, a lot of people perform marginally in school because of fixed hours or because they don’t want to stop the class for 5 minutes because they missed a concept three chapters ago when they had to take an emergency phone call or use the facilities. Some are just bad at test taking stress. You could make testing continuous and as easy a having a conversation. Someone who lives in the middle of rural Wisconsin could have access to the same level and care of teaching as someone in the suburbs. Kids with learning challenges currently get dumped into classes of kids with learning challenges. The higher functioning ones get kinda screwed as the ones with lower skills eat up the time. Hell, even my first CompSci class, the first three classes were full of people that couldn’t understand variables. The second the professor moved on to endianness the hands shot up and nothing else was done for the class period. He literally just repeated himself all class long assigned us to do all the class training at home.

The tools to do all this are already here, just not in a state to do the job. Some place like the Gates Foundation could just go, you know, yeah, let’s do this.

The thing that guides them along won’t even be AI, it’ll just be a structured program, the AI comes in to prompt them to answer ongoing questions and to figure out if they were right or to help them understand something they don’t get and gauge their competency.

I think the platform it sellable. I think if anyone had access to something that did this (perhaps without accreditation) it would be a boon to humanity

The Internet also brought us a shit ton of functional things too. The dot com bubble didn’t happen because the Internet wasn’t transformative or incredibly valuable, it happened because for every company that knew what they were doing there were a dozen companies trying something new that may or may not work, and for every one of those companies there were a dozen companies that were trying but had no idea what they were doing. The same thing is absolutely happening with AI. There’s a lot of speculation about what will and won’t work and make companies will bet on the wrong approach and fail, and there are also a lot of companies vastly underestimating how much technical knowledge is required to make ai reliable for production and are going to fail because they don’t have the right skills.

The only way it won’t happen is if the VCs are smarter than last time and make fewer bad bets. And that’s a big fucking if.

Also, a lot of the ideas that failed in the dot com bubble weren’t actually bad ideas, they were just too early and the tech wasn’t there to support them. There were delivery apps for example in the early internet days, but the distribution tech didn’t exist yet. It took smart phones to make it viable. The same mistakes are ripe to happen with ai too.

Then there’s the companies that have good ideas and just under estimate the work needed to make it work. That’s going to happen a bunch with ai because prompts make it very easy to come up with a prototype, but making it reliable takes seriously good engineering chops to deal with all the times ai acts unpredictably.

I’d like some samples of that. A company attempting something transformative back then that may or may not work that didn’t work. I was working for a company that hooked ‘promising’ companies up with investors, no shit, that was our whole business plan, we redress your site in flash, put some video/sound effects in, and help sell you to someone with money looking to buy into the next google . Everything that was ‘throwing things at the wall to see what sticks’ was a thinly veiled grift for VC. Almost no one was doing anything transformative. The few things that made it (ebay, google, amazon) were using engineers to solve actual problems. Online shopping, Online Auction, Natural language search. These are the same kinds of companies that continue to spring into existence after the crash.

It’s the whole point of the bubble. It was a bubble because most of the money was going into pockets not making anything. People were investing in companies that didn’t have a viable product and had no intention south of getting bought by a big dog and making a quick buck. There weren’t all of a sudden this flood of inventors making new and wonderful things unless you count new and amazing marketing cons.

There are two kinds of companies in tech: hard tech companies who invent it, and tech-enabled companies who apply it to real world use cases.

With every new technology you have everyone come out of the woodwork and try the novel invention (web, mobile, crypto, ai) in the domain they know with a new tech-enabled venture.

Then there’s an inevitable pruning period when some critical mass of mismatches between new tool and application run out of money and go under. (The beauty of the free market)

AI is not good for everything, at least not yet.

So now it’s AI’s time to simmer down and be used for what it’s actually good at, or continue as niche hard-tech ventures focused on making it better at those things it’s not good at.

I absolutely love how cypto (blockchain) works but have yet to see a good use case that’s not a pyramid scheme. :)

LLM/AI I’ll never be good for everything. But it’s damn good a few things now and it’ll probably transform a few more things before it runs out of tricks or actually becomes AI (if we ever find a way to make a neural network that big before we boil ourselves alive).

The whole quantum computing thing will get more interesting shortly, as long as we keep finding math tricks it’s good at.

I was around and active for dotcom, I think right now, the tech is a hell of lot more interesting and promising.

Crypto is very useful in defective economies such as South America to compensate the flaws of a crumbling financial system. It’s also, sadly, useful for money laundering.

Fir these 2 uses, it should stay functional.

Removed by mod

Essentially we have invented a calculator of sorts, and people have been convinced it’s a mathematician.

Removed by mod

Since the iteration we have that’s designed for general purpose language modeling and is trained widely on every piece of data in existence can’t do exactly one use case, you can’t conceive that it can ever be done with the technology? GTHO. It’s not like we’re going to say ChatGPT teach kids how LLM works, but some more stuctured program that uses something like chatGPT for communication. This is completely reasonable.

A. It’s my opinion but I think you’re dead wrong and it’s easily profitable if not to ivy league standards it would certainly put community college out of business.

B. Screw profit. Philanthropic investment throws a couple billion into a nonprofit run by someone who wants to see it happen.

You think an Ivy League school is above selling a light model of their courseware when they don’t have to pay anyone to teach the classes, or grade the work? Check out Harvard University Edx. It’s not a stretch.

Ohh a secret third problem, that sounds fun. I’ll let you in on another secret, AI isn’t worried because it’s a very large complicated math program. It doesn’t worry about communicating clearly, the people who pile on layer upon layer of LLM to produce output do that. It doesn’t give a damn about anything, but the people who work on it do.

You want clarity?

Let’s have GTP4, here as it sits clear up your complaint about my post:

"Here is a revised version of your text that sounds more educated:

There are two primary issues with the notion of using artificial intelligence as a tutor or teacher. Firstly, artificial intelligence is not infallible and can make mistakes. Consistency and correctness are integral components of pedagogy, and artificial intelligence is not yet capable of achieving these standards. As such, it is not suitable for the role you have suggested.

Secondly, even if artificial intelligence were capable of fulfilling this role (which it is not, due to technological limitations), it would not be a profitable endeavor. Replacing public school teachers with artificial intelligence would not align with the goals of those seeking to reform the public school system. The aim is to streamline the process from birth to the workplace, and an artificial intelligence nanny would not facilitate this.

Furthermore, the private school class would not be interested in such a proposal. They are currently opposed to subjecting their children to such technology and are willing to pay for the best educators available. Precision mass-produced automation cannot compete with bespoke handcrafted goods.

There is also a third issue to consider: artificial intelligence is not concerned with precision or clear communication. Instead, it focuses on what “feels” right in a given situation. Is this the type of teacher we want for any form of education?"

or perhaps you’d like to have it communicate clearly to younger people

"Sure, here is a revised version of the text written at a 5th-grade level:

There are two big problems with using computers to teach kids. The first problem is that computers can make mistakes. Teachers need to be right all the time, and computers aren’t always right. So, they can’t do the job you want them to do.

The second problem is that even if computers could do the job (they can’t, but let’s pretend), it wouldn’t make money. People who want to change schools don’t want to use computers to teach kids everything. They want to make it easier for kids to get jobs when they grow up. A computer nanny can’t do that.

Rich kids who go to private schools wouldn’t like it either. Their parents pay a lot of money for the best teachers. They don’t want a computer nanny. You can’t sell something cheap and easy to make to someone who wants something special and handmade.

There’s also a secret third problem. Computers don’t care about being right or explaining things clearly. They just do what they think is best at the moment. Is that the kind of teacher you want? For any kind of learning?"

Removed by mod

I’ve seen your post history, comical that you’d talk to me about tone.

Removed by mod

What do you want me to do here? Go through each line item where you called out something on a guess that’s inherently incorrect and try to find proper citations? Would you like me to take the things were you twisted what I said, and point out why it’s silly to do that?

I could sit here for hours and disprove and anti-fallacy you, but in the end, you don’t really care you’ll just move the goal post. Your world view is AI is a gimmick and nothing that I present to you is going to change that. You’ll just cherry pick and contort what I say until it makes you feel good about AI. It’s a fools’ errand to try.

Things are nowhere near as bad as you say they are. What I’m calling for is well withing the possible realm of the tech with natural iteration. I’m not giving you any more of my time. any further conversation will just go unread and blocked.

Removed by mod

This weekend my aunt got a room at a ery expensive motel, and was delighted by the fact that a robot delivered amenities to her room. And at breakfast we had an argument about whether or not it saved the hotel money to us the robot instead of a person.

But the bottom line is that the robot was only in use at an extremely expensive hotel and is not commonly seen at cheap hotels. So the robot is a pretty expensive investment, even if it saves money in the long run.

Public schools are NEVER going to make an investment as expensive as an AI teacher, it doesn’t matter how advanced the things get. Besides, their teachers are union. I will give you that rich private schools might try it.

Single robot, single hotel = bad investment.

Single platform teaching an unlimited number of users anywhere in the world for whatever price can provide the R&D and upkeep. Greed would make it expensive if it can, it doesn’t have to be.

You get stupid-ass students because an AI producing word-salad is not capable of critical thinking.

It would appear to me that you’ve not been exposed to much in the way of current AI content. We’ve moved past the shitty news articles from 5 years ago.

Five years ago? Try last month.

Or hell, why not try literally this instant.

You make it sound like the tech is completely incapable of uttering a legible sentence.

In one article you have people actively trying to fuck with it to make it screw up. And in your other example you picked the most unstable of the new engines out there.

Omg It answered a question wrong once The tech is completely unusable for anything throw it away throw it away.

I hate to say it but this guy’s not falling The tech is still usable and it’s actually the reason why I said we need to have a specialized model to provide the raw data and grade the responses using the general model only for conversation and gathering bullet points for the questions and responses It’s close enough to flawless at that that it’ll be fine with some guardrails.

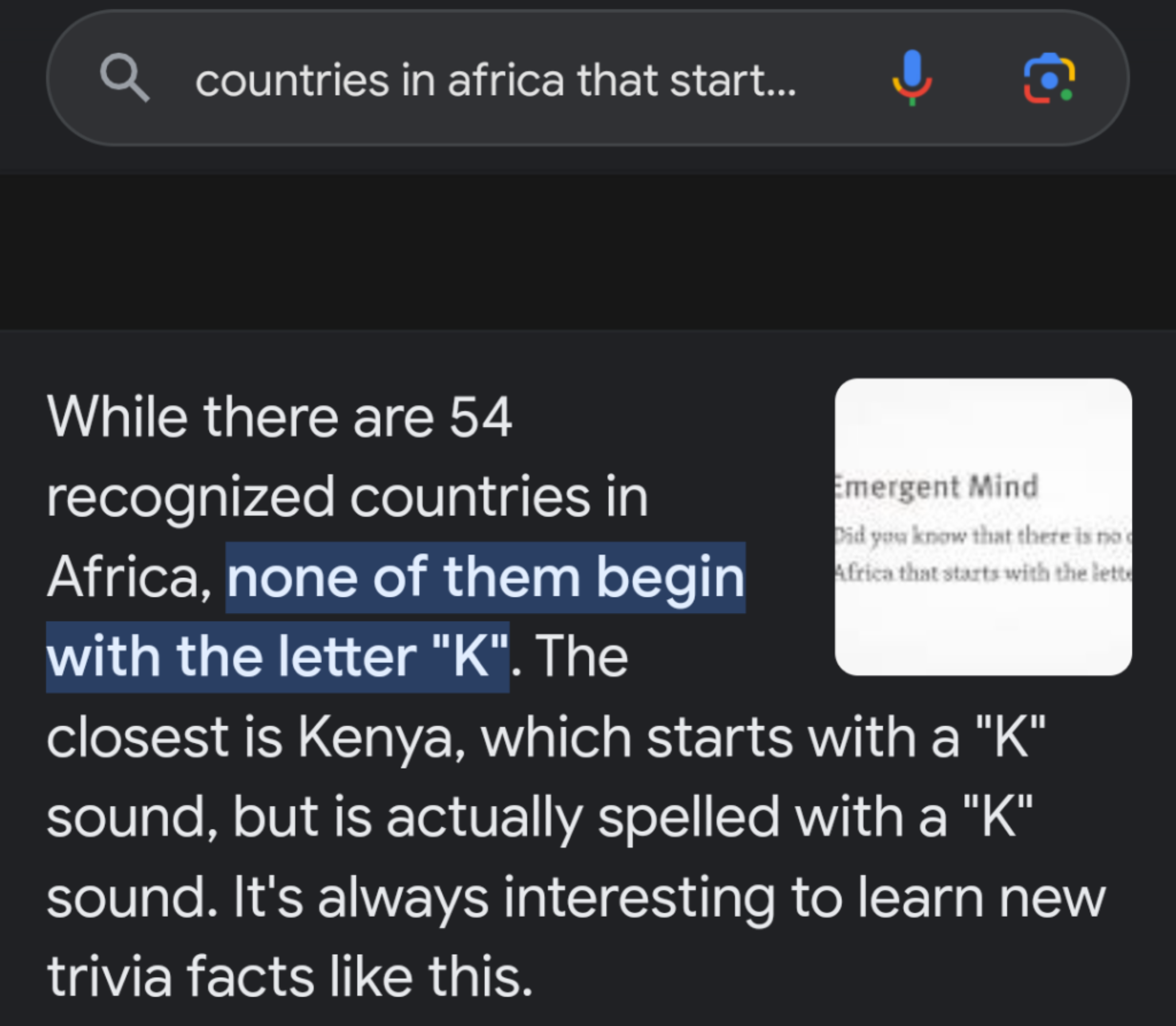

Oh, please. AI does shit like this all the time. Ask it to spell something backwards, it’ll screw up horrifically. Ask it to sort a list of words alphabetically, it’ll give shit out of order. Ask it something outside of its training model, and you’ll get a nonsense response because LLMs are not capable of inference and deductive reasoning. And you want this shit to be responsible for teaching a bunch of teenagers? The only thing they’d learn is how to trick the AI teacher into writing swear words.

Having an AI for a teacher (even as a one-on-one tutor) is about the stupidest idea I’ve ever heard of, and I’ve heard some really fucking dumb ideas from AI chuds.