Also check out LLM Studio and GPT4all. Both of these let you run private ChatGPT alternatives from Hugging Face and run them off your ram and processor (can also offload to GPU).

I’d also recommend Oobabooga if you’re already familiar with Automatic1111 for Stable diffusion. I have found being able to write the first part of the bots response gets much better results and seems to make up false info much less.

And llama file, which is a chat bot in a single executable file.

I feel like you’re all making these names up…but they were probably suggested by a LLM all together…

Are they as good as chatgpt?

Mistral is thought to be almost as good. I’ve used the latest version of mistral and found it more or less identical in quality of output.

It’s not as fast though as I am running it off of 16gb of ram and an old GTX 1060 card.

If you use LLM Studio I’d say it’s actually better because you can give it a pre-prompt so that all of its answers are within predefined guardrails (ex: you are glorb the cheese pirate and you have a passion for mink fur coats).

There’s also the benefit of being able to load in uncensored models if you would like questionable content created (erotica, sketchy instructions on how to synthesize crystal meth, etc).

I’m sure that meth is for personal use right? Right?

Absolutely. Synthesizing hard drugs is time consuming and a lot of hard work. Only I get to enjoy it.

No one gets my mushrooms either ;)

brf tek says hi

I just buy my substrate online. I’m far less experimental than most. I just want it to work in a consistent way that yields an amount I can predict.

What I really want to grow is Peyote or San Pedro, but the slow growth and lack of sun in my location would make that difficult.

Whazzat? I’ve only met uncle Ben!

Even though growing mushrooms is almost the easiest thing to do on the planet

Exactly, grow your own.

Can you provide links for those? I see a few and don’t trust search results

They’re the first results on all major search engines.

You can search inside LM studio for uncensored or roleplay. Select the size you want then it’s all good from there.

No.

Depends on your use case. If you want uncensored output then running locally is about the only game in town.

Something i am really missing is a breakdown of How good these models actually are compared to eachother.

A demo on hugging face couldnt tell me the boiling point of water while the authors own example prompt asked the boiling point for some chemical.

Maybe you could ask for the boiling point of dihydrogen monoxide (DHMO), a very dangerous substance.

More info at DHMO.org

I asked H2O first but no proper answer.

i heard dihydrogen monoxide has a melting point below room temperature and they seem to find it everywhere causing huge oxidation damage to our infrastructure, its even found inside our crops.

Truly scary stuff.

I can’t find a way to run any of these on my homeserver and access it over http. It looks like it is possible but you need a gui to install it in the first place.

ssh -X

(edit: here was wrong information - I apologize to the OP!)

Plus a GUI install is not exactly the best for reproducability which at least I aim for with my server infrastructure.

You don’t need to run an X server on the headless server. As long as the libraries are compiled in to the client software (the GUI app), it will work. No GUI would need to be installed on the headless server, and the libraries are present in any common Linux distro already (and support would be compiled into a GUI-only app unless it was Wayland-only).

I agree that a GUI-only installer is a bad thing, but the parent was saying they didn’t know how it could be done. “ssh -X” (or -Y) is how.

That’s a huge today-I-learned for me, thank you! I took ill throw xeyes on it just to use ssh - C for the first time in my life. I actually assumed wrong.

I’ll edit my post accordingly!

Koboldcpp

Open source good, together monkey strong 💪🏻

Build cool village with other frens, make new things, celebrate as village

Apes together *

See case in point

It’s free / libre software, which is even better, because it gives you more freedom than just ‘open-source’ software. Make sure to check the licenses of software that you use. Anything based on GPL, MIT, or Apache 2.0 are Free Software licenses. Anyways, together monkey strong 💪

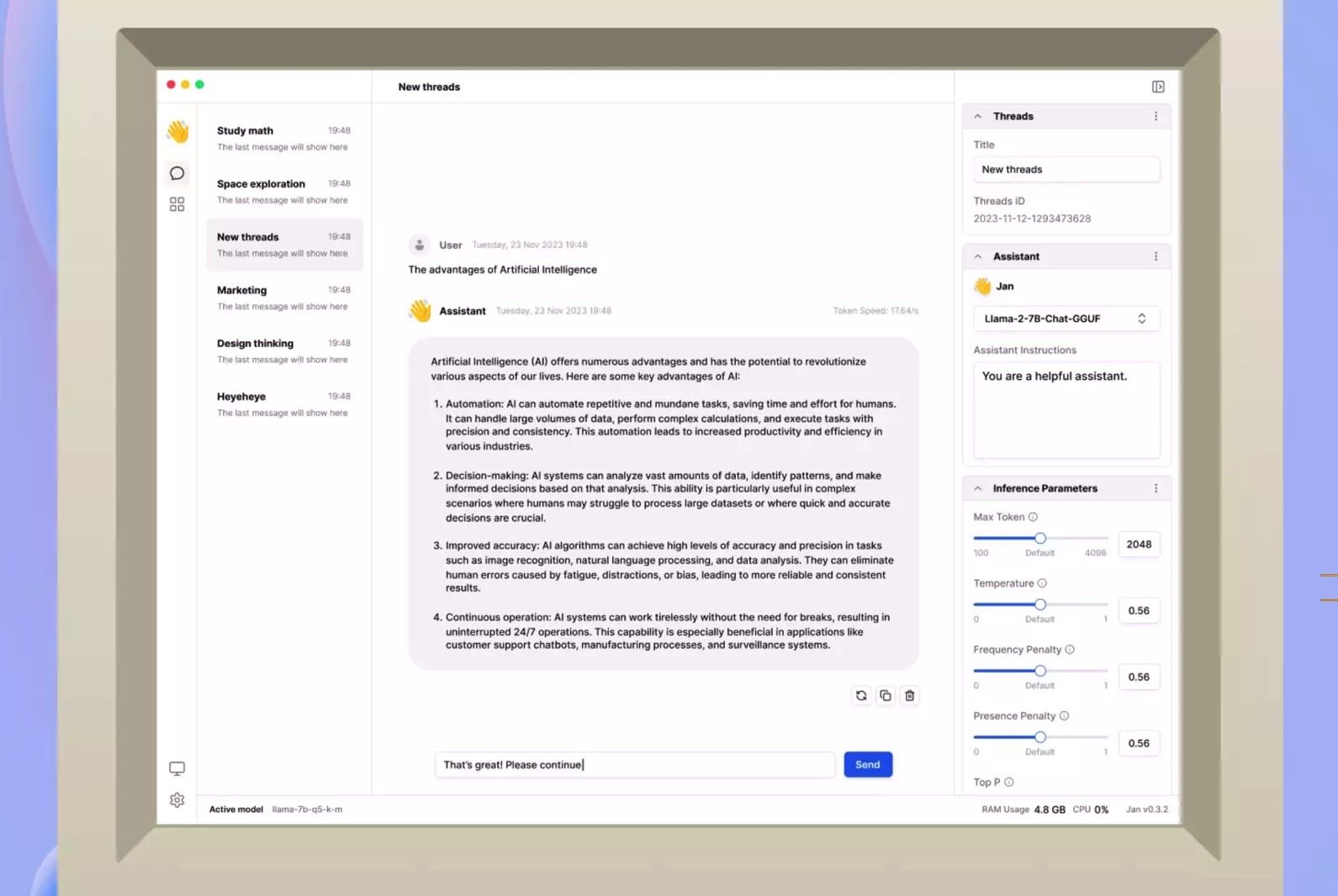

It seems like usually when an LLM is called “Open Source”, it’s not. It’s refreshing to see that Jan actually is, at least.

Jan is just a frontend. It supports various models under multiple licence. It also supports some proprietary models.

Sure, Jan.

Marsha Marsha Marsha!

I would also reccommend faraday.dev as a way to try out different models locally using either CPU or GPU. I believe they have a build for every desktop OS.

I have recently been playing with llamafiles, particularly Llava which, as far as I know, is the first multimodal open source llm (others might exist, this is just the first one I have seen). I was having it look at pictures of prospective houses I want to buy and asking it if it sees anything wrong with the house.

The only problem I ran into is that window 10 cmd doesn’t like the sed command, and I don’t know of an alternative.

Would it help to run it under WSL?

WSL2

might be a good idea to use windows terminal or cmder and wsl instead of windows shells

Install Cygwin and put it in your path.

You can use grep, awk, see, etc from either bash or Windows command prompt.

Powershell, maybe?

sd is written in rust and cross platform https://github.com/chmln/sd

Does awk run on windows?

Wait, can you just install sed?

If you can find a copy yeah. GNU sed isn’t written for windows but I’m sure you can find another version of sed that targets windows.

Any recommendations from the community for models? I use ChatGPT for light work like touching up a draft I wrote, etc. I also use it for data related tasks like reorganization, identification etc.

Which model would be appropriate?

The mistral-7b is a good compromise of speed and intelligence. Grab it in a GPTQ 4bit.

Okie dokie.

Removed by mod

Is it as good as chatgpt?

The question is quickly answered as none is currently that good, open or not.

Anyway it seems that this is just a manager. I see some competitors available that I have heard good things about, like mistral.

Local LLMs can beat GPT 3.5 now.

I think a good 13B model running on 12GB of VRAM can do pretty well. But I’d be hard pressed to believe anything under 33B would beat 3.5.

Asking as someone who doesn’t know anything about any of this:

Does more B mean better?

B stands for Billion (Parameters) IIRC

3.5 fuckin sucks though. That’s a pretty low bar to set imo.

Many are close!

In terms of usability though, they are better.

For example, ask GPT4 for an example of cross site scripting in flask and you’ll have an ethics discussion. Grab an uncensored model off HuggingFace you’re off to the races

Seems interesting! Do I need high end hardware or can I run them on my old laptop that I use as home server?

Oh no you need a 3060 at least :(

Requires cuda. They’re essentially large mathematical equations that solve the probability of the next word.

The equations are derived by trying different combinations of values until one works well. (This is the learning in machine learning). The trick is changing the numbers in a way that gets better each time (see e.g. gradient descent)

How’s the guy who said he’s running off a 1060 doing it?

Slowly

Then you don’t need a 3060 at least

Oh this is unfortunate ahahahaha

Thanks for the info!

savedyouaclick https://jan.ai/

But is it FOSS?

Meen Jan, the open-source ChatGPT alternative

Yes, the article is pretty good

Sick burn

And well deserved too. I’m not even mad. I really should real the actual article.

I really should real the actual article.

And proofread.

It’s literally in the goddamn title, just click the fucking link Jesus Christ

Shit, don’t even have to click the link it’s in the fuckin url