The first salvo of RTX 50 series GPU will arrive in January, with pricing starting at $549 for the RTX 5070 and topping out at an eye-watering $1,999 for the flagship RTX 5090. In between those are the $749 RTX 5070 Ti and $999 RTX 5080. Laptop variants of the desktop GPUs will follow in March, with pricing there starting at $1,299 for 5070-equipped PCs.

Yeah sure, the 5090 will be a 2k the same way a 3080 went for 800…i watched them peak at 3500 (seriously, i screenshotted it but it got lost as i gave up the salt).

The 4090 is sitting at 2400 ($2500)right now over here, i can 100% assure you the 5090 will cost more than that when it gets here.

Where is “over here”? I want to sell my 4090 to your people and turn a nice profit.

That 3.5k was in the middle of a shortage at the height of an Ethereum mining boom though.

I know it’s an extreme, but current prices are still extreme.

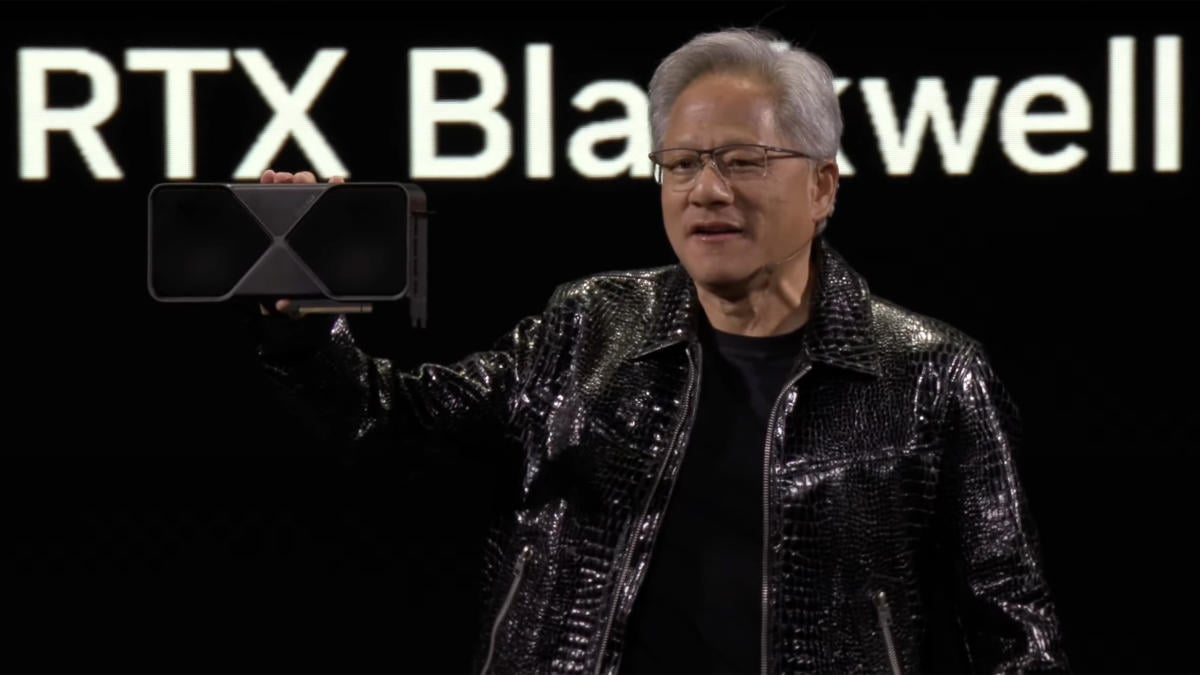

What’s with this new trend of CEOs wearing leather jackets, as if they’re cool people? Put your fucking suits back on, assholes.

I mean, Jensen was always pretty casual comparatively speaking.

lolwt? why do you even care?

Because they’re posers. Nobody likes a poser.

From google:

The RTX 4090 was released as the first model of the series on October 12, 2022, launched for $1,599 US, and the 16GB RTX 4080 was released on November 16, 2022 for $1,199 US.

So they dropped the 80 series in price by $200 while increasing the 5090 by $400.

Pretty smart honestly. Those who have to have the best are willing to spend more and I’m happy the 80 series is more affordable.

I’m happy the 80 series is more affordable

I’d hardly call $1200 affordable.

It’s $999 now which is more affordable than $1200

5080 is same price as the 4080 super, probably because the 4080 wasn’t selling.

Just using this thread as a reminder the new Intel Arc B580 is showing 4060 performance for only $250

That’s synthetic tests in favourable environment.

If I’m not mistaken Gamers Nexus showed this largely to be true. Even nipping at the bud of the 4070 on occasion.

B580 is a great deal. I’m hoping they release a B770 24GB and that Linux support for Battlemage is excellent by then.

That’s the exact scenario I optimize my games and system at large to operate in. Thanks bro

The prices are high, but what really is shocking are the power consumption figures. The 5090 is 575W(!!), while the 5080 is 360W, 5070Ti is 300W, and the 5070 is 250W.

If you are getting one of these, factor in the cost of a better PSU and your electric bill too. We’re getting closer and closer to the limit of power from a US electrical socket.

It’s clear what must be done - all US household sockets must be changed to 220V. Sure, it’ll be a notable expense, but it’s for the health of the gaming industry.

It’ll buy us about 8 more years. At this rate, the TGP is increasing at about 10% per year:

3090: Late 2020, 350W 4090: Late 2022, 450W 5090: Early 2025, 575W

Therefore, around 2037, a single 90-tier GPU will pop a 110V breaker, and by 2045, it will pop a 220V breaker too.

/s

Don’t be silly.

Just move your PC to your laundry room and plug it into the 240V dryer outlet.

1000W PSU pulls max 8.3A on a 120v circuit.

Residential circuits in USA are 15-20A, very rarely are they 10 but I’ve seen some super old ones or split 20A breakers in the wild.

A single duplex outlet must be rated to the same amperage as the breaker in order to be code, so with a 5090 PC you’re around half capacity of what you’d normally find, worst case. Nice big monitors take about an amp each, and other peripherals are negligible.

You could easily pop a breaker if you’ve got a bunch of other stuff on the same circuit, but that’s true for anything.

I think the power draw on a 5090 is crazy, crazy high don’t get me wrong, but let’s be reasonable here - electricity costs yes, but we’re not getting close to the limits of a circuit/receptacle (yet).

Actually the National Electric Code (NEC) limits loads for 15 Aac receptacles to 12 Aac, and for 20 Aac receptacles 16 Aac iirc because those are the breaker ratings and you size those at 125% of the load (conversely, 1/125% = 80% where loads should be 80% of the break ratings).

So with a 15 Aac outlet and a 1000 Wac load at minimum 95% power factor, you’re drawing 8.8 Aac which is ~73% of the capacity of the outlet (8.8/12). For a 20 Aac outlet, 8.8 Aac is ~55%% capacity (8.8/16).

Nonetheless, you’re totally right. We’re not approaching the limit of the technology unlike electric car chargers.

The NEC limits CONTINUOUS loads to 80%, not intermittent loads. Continuous loads are things like heaters, AC units, etc. Things plugged into the wall are generally not considered continuous loads, so your breakers in a residential home are usually not derated, and receptacles never are from what I’ve seen. (Although it could be argued that a gaming computer would be a continuous load, as it runs 3+ hours for many people, but there’s still no electrician that would treat it that way, probably ever, unless it was some kind of commercial space that rented gaming seats or something. Either way it would be planned in advance)

The rule that you’re describing is for the initial planning of the circuit. It’s for the rating of your wires and overcurrent protections, which is done at the time of installation, based on the expected continuous and intermittent loads. For residential planning nobody treats a standard branch circuit for wall receptacles as somewhere you’d derate, so your 15A circuit is a 15A circuit, you don’t need to do any more math on it and derate it further.

You could make the argument that people with 5090s do run their PCs longer than 3 hours since those folk are more prone to longer bouts of gaming to feel like they’re returning on their expensive investment. And as the capabilities of our PCs become more and more robust, it will likely mean that people will more and more need to consider whether the circuit they’re plugging into will take the load they’re giving it.

Doesn’t hurt to plan for the future regarding building wiring, since most tech folk do so regarding their PC builds.

But, up on further inspection… I may be inclined to agree with you. See this thread from licensed and qualified professionals in the space.

It seems that homeowners are given a special class of immunity when it comes to manifesting hazards associated with their use of electricity. Whether or not that immunity should be granted, given that improper use of electrical equipment in a household can lead to fires and cause undue harm to the community at large, I think is up for debate.

That’s just the GPU with efficient other parts. Now if we do 575W GPU + 350W CPU + 75W RGB fans + 200W monitors + 20% buffer, we are at 1440W, or 12A. Now we’re close to popping a breaker.

This makes me curious: What is the cheapest way to get a breaker that can handle more power? It seems like all the ways I can think of would be many 5090s in cost.

350W CPU?? Even a 14900k is only 250W, most are 120-180.

75W of fans???

I’m sure you could find parts with that much draw, but that is not normal.

How many RGB fans does this theoretical build have to use 75W alone?

How else are you gonna cool 925W in a PC form factor? Ever seen fans for server racks?

hire an electrician (or dependent on local laws DIY) to add a dedicated 240v 20a outlet with 12/2 wire.

Out of curiosity, how much does this cost with an electrician?

How far out are we from gpus that also dry your laundry

Anyone getting a 5090 is most definitely not someone who worries about the electric bill

I know plenty of people who’d get a 5090 and worry about the electric bill.

Going to need to run a separate PSU on a different branch circuit at this rate.

And this is BEFORE the tariffs!

But you see because of the tariffs the American gamers will just default to American GPUs, duh.

I’m not super informed about the developments but, won’t this be in the realm of possibility with the new Intel facilities being constructed?

As a result of the Biden admin’s chips and science act, is what I’m eluding to.

Oh. Well for America’s gamers, I sure hope so.

Won’t concern me.

Gotcha, now I’m curious. I’ll do no further research but, if I stumble upon something I’ll post it here

There’s gonna be as many tariffs as there were walls that got built and paid for by Mexico.

Not because it’s bad for the American people.

It’s because the same people in congress who would install tariffs are making hundreds of millions hand over first on insider trading stocks. They aren’t gonna fuck up the gravy train for Trumps dumb ass campaign ramblings.

Maybe. There’s not much precedent for what’s coming - Trump is FAR more influential to the GOP than he was in his first term. I certainly hope that nobody will be able to get anything done, but I’m also not counting on it.

Unfortunately Trump can unilaterally impose tariffs in a lot of cases. That’s why the price of olive oil soared in his first term.

He was free wheeling yolo the first term. This time he has masters he needs to obey. Elon for one ain’t allow tariffs on any electronics he needs for ai or hours cars. Nvidia should be safe.

I just…I just don’t need fps and resolution that much. Godspeed to those that feel they do need it.

VR enthusiasts can put it to use. The higher end headsets have resolutions of over 5000 x 5000 pixels per eye.

You are basically rendering the entire game twice, once for each eye, and the resolution is like eight times as many pixels compared to your typical 1080p game

No one should, video graphics haven’t progressed that far. Only the lack of optimisation has.

You’re missing a major audience willing to pay $2k for these cards, people wanting to run large AI language models locally.

What if I want a ton of VRAM for blender

It’ll crash due to an unrelated reason

That is another audience & good point. There are people that want these though for other uses than gaming.

sdklf;gjkl;dsgjkl;dsgjkl;dsgsjkl;g

Media production when speed and efficiency matters is probably part of a business that would have funding to justify the cost.

sdklf;gjkl;dsgjkl;dsgjkl;dsgsjkl;g

I’m willing, but unable :'(

Someday I’ll be able to run something cool like that Deepseek v3 model or something. Probably when they figure out how to run them well in regular RAM, I have a shit ton of that at my disposal. Stupid VRAM. (Maybe they’ll start coming out with GPUs with slotted VRAM lol)

I’m staying on 1440p deliberately. My 3080 is still perfectly fine for a few more years, at least current console gen.

I’ve ditched my gaming PC and am currently playing my favorite game (Kingdom Come Deliverance) on an old laptop. Which means I can’t go higher than 800x480.

And honestly, the immersion works. After a couple minutes I don’t notice it anymore.You’re not wrong. I just recently upgraded my whole machine going from a 3090 to a 4090 on 1440p and basically can’t tell the difference.

Welp, looks like I’ll start looking at AMD and Intel instead. Nvidia is pricing itself at a premium that’s impossible to actually meet compared to competitors.

There will be people that buy it. Professionals that can actually use the hardware and can justify it via things like business tax benefits, and those with enough money to waste that it doesn’t matter.

For everyone else, competitors are going to be much better options. Especially with Intel’s very fast progression into the dedicated card game with Arc and generational improvements.

575W TDP is an absolute fucking joke.

I really wanted a 512bit-bussy GPU for a decade+ … but perhaps never is just as good.

I don’t know a lot about computers, but I do know a fair amount about bussy. $2000 for 512 is a steal!

Sure hope I could buy two packs of 512 and screw them down in SLI mode.

I don’t know a lot about computers, but I do know a fair amount about bussy.

All Linux-related communities on lemmy.

5000 series cards are made for idiots

5000 series cards are made for professionals and idiots

I’m here to represent the professionals ∩ idiots. We exist too.

Although seeing those prices is reminding me my mobile 3070 has been perfectly usable

Some people don’t care about spending $2000 for whatever. I mean, I’m not one of those people but they probably exist.

Yeah, it’s all priorities. I don’t see myself buying a $2000 GPU any time soon, but if I was single and playing PC games every day in 4K or VR, I could get thousands of hours of use over the next few years from that GPU.

Compare that with other types of entertaining products and activities (vacations, cars, etc) and it starts to look not bad in comparison.

Still not in the plans for my particular situation though, lol.

I’m probably one of those people. I don’t have kids, I don’t care much about fun things like vacations, fancy food, or yearly commodity electronics like phones or leased cars, and I’m lucky enough to not have any college debt left.

A Scrooge McDuck vault of unused money isn’t going to do anything useful when I’m 6 feet underground, so I might as well spend a bit more (within reason*) on one of the few things that I do get enjoyment out of.

* Specifically: doing research on what I want; waiting for high-end parts to go on sale; never buying marked-up AIB partner GPUs; and only actually upgrading things every 5~6 years after I’ve gotten good value out of my last frivolous purchase.

My company could buy me this (for video editing), but I mostly need it for vram that should be cheap. I would like to be able to afford it without it doubling the price of my pc.

You can get an AMD Instinct MI60 with 32GB HBM2 VRAM on ebay for ~$400-$600

More money than sense, as they say.

Just sell drugs bro, I buy whatever the fuck I want from microcenter. Straight cash.

Why do people buy this stuff? It only takes like a year before it falls in price as the next one comes along. Gotta get that last 2FPS, I guess.

Video cards don’t fall in price anymore lol

Ok, I guess when I build my next computer I’ll be using the 4th best card.

it seems people realized this and the old cards aren’t even properly falling in price anymore, even on the used market

Yeah I’m never willing to afford the best. I usually build a new computer with second best parts. With these prices my next computer will be with third best stuff I guess.

The 2k USD price is surely only in order to make the cheaper cards appear reasonably priced.

I bought my 4080 super recently and hopes it last me a good +12 years like my old card did. These prices are insane!

I’m rocking a 1660su until I literally can’t anymore.

My old card literally died and was forced to get a new one.

Sleep tight, prince, my AMD R9 Fury x. You will be missed.

I’m still running a 1070ti, before that it was the R9 390 and R9 390X, both of them died though. One probably had it’s voltage regulator fail and the other probably had it’s chip die because it will boot windows but die as soon as it had to do any work

My question is will the 5080 perform half as fast as the 5090. Or is it going to be like the 4080 vs 4090 again where the 4080 was like 80% the price for 60% the performance?

You know it’s the latter… and that even those numbers are probably optimistic.

I think that at higher resolutions (4k) there is gonna be a bit bigger difference than in gen 40 bcs of 256bit vs 384bit mem bussy in 4080 vs 4090 compared to 256bit vs 512bit in 5080 vs 5090.

That memory throughput & bandwidth might not get such a big bump in the next gen or two.

mem bussy

well there’s a mental image