Hi all,

first off I’d just like to say how blown away I am by the potentials of Perchance. I bet to most of you this is baby stuff but for me this is my first step in to this world and its just incredible stuff.

So I have a question…more so to if its possible and then I’ll properly wrap my head around the coding of it.

I’m looking to create a short sequence of scenes, like a still animation. Some time the background will stay the same but the character would change pose say. Or maybe the background (say a kitchen scene as example) may change camera angle/view and the character would change position/pose. Im not looking to create frame by frame stuff. Just scene changes but retaining features through out. I totally can see it being possible to do, was just hoping to hear some advice from people that have much more experience than I do.

If any of that doesn’t make sense (most probably!) please just ask and I’ll try to better explain.

TIA

Sam

p.s. Oh I should probably state that I plan to use t2i to create the scenes, then overlay/combine character and adjust accordingly

@[email protected] @[email protected] @[email protected] So I wanted to give little update. Not only to keep you guys in the loop but to pull myself back in to check. There are so many rabbit holes I keep delving in to and the reality is, I think (bar a couple of bits) I have the functionality and tools here anyway. I’m going to develop the structure of it here. Or at least a base version to expand on.

My plan… Although it has been suggested that one generator would be sufficient, my idea is to use multiple gens. and funnel down the outputs. As an example…First phase, scene/background generation. All the variables available to create the initial style/scene. 1 image created and put to the side temporarily (Output = Image A). Continue on to character generation (initial variable being how many characters in the scene. In which more cycles would be added to accommodate character volume. The image created from this run is the base position character (Output = Image B). The overlay/combining images ‘How to’ is still in the works so we’ll just say that’s happening now. What I would like is for that to be a relatively straight forward process (Output = Image C). BUT…That’s not the end! The next generation is the image manipulation phase. So most of the required data will automatically be funnelled down from the previous. Initial selection to ascertain what outputs are changing (which will then automatically populate the variables that are the firm Un-changeable’s. The variants for Output A will be different to Output B (A’s being framing, focus, lighting etc, B’s being poses, focus) and input selections will be limited to suit (as the core data that’s already been funnelled and populated is fundamental to retain continuity so wont be amended in any way ). Output D &/or E generated. To then utilise the Output C generator again, maybe with a further addition of referencing the earlier Output Image C as well. My thinking to further ringfence what the intended style/feel is. (but maybe it would cause a negative effect…not sure). Output = Image F. This cycle can then be further repeated to create additional scenes.

I intend to utilise this system (creating scenes of multiple variations, all generated from the same core data) to be my method for creating ‘Acts’. Meaning all of the relevant data is still live, as well as being utilised that way too by funnelling it throughout. Once I’ve created a base model of this idea it’ll give me further insight in to what works, what doesn’t, what it needs, what it doesn’t etc etc. and if I’m barking up the wrong tree or not!

Would love to hear your feedback/suggestions/concerns/criticisms! In my head it works…but that doesn’t mean it actually works! lol!

S

@mindblown My head was a little bit wobbling reading all the technical details about the image generation, but anyways, that’s also kind of interesting, especially for this one…

I would like to see the progress on your generator though, just make sure to write the technical details maybe a bit more understandable for the less-into-AI-image people (like me).

Absolutely 👍 I hadn’t really thought that far ahead but as you’ve mentioned it and I’ve pondered I think I would initially provide a condensed version (The background gen, character gen and combination) publicly. Just until I’ve had some time to explore the boundaries of the original. But who knows…as and when the time comes I’ll be sure to be clearer with my explanation though 👍

sounds like a great starting direction with distinct goals.

i think it doesn’t matter if it actually works (tho it sounds both legit and doable), since you can adapt and make a ‘next best thing’ for any one part that doesn’t and some parts may even work better than you thought and with empowering nuances you don’t yet see until getting to it. aka sounds like a great path. nice getting it in a sequence and system.

i’ve been doing a similar first push in to node.js and databases. basically pushed way far and used alot of things and got some core things running successfully on that first push. then, yesterday, stood back, went over nearly the entire system i had made when exploring, and, now understanding it, remade it in my own way to do what i want how i want.

up to you how you learn, tho definite thumbs up to your direction and how you are getting in to it and that initial ‘info gathering in to deciding prioritizations upon clearer picture’ phase. will help when you ask

have fun delving in :)

Yeah I agree…what I’m trying to do is wrap my head around the components and then further expanding on those. In doing so new approaches/methods come in to the light. Example…earlier in my head I envisioned the ‘stacking’ of generators…that image is now evolving as I’m discovering input output formatting, advanced hierarchical lists, multiple sub listing etc etc.

That’s great man, nice work 👍 We sound very similar in our approaches. The last few hours I’ve pulled up generators that have applicable functions and taking a good look at how they’ve been coded. Just breaking it all down and wrapping my head around it.

Is there a way of viewing a plugins source code at all? Just I really want to look over a few and see how they’re put together. The interesting thing I’m seeing with coding is the multiple approaches for achieving the same result. Some look crisp and smooth running…some look convoluted and hard work for all involved even the AI! lol

If you mean how the AI generates the image, the code is server side. But, you can look at the code of the client side on Perchance. Go to a page, then click the ‘edit’ on the top navigation bar and it would open the code panels.

Ahhh now I understand. I initially thought all plugins were like the t2i one…so with this example below, where is the ‘pose-generator-simple’ code?

image = {import:text-to-image-plugin} pose = {import:pose-generator-simple} verb = {import:verb}If i paste it at the end of perchance.org in the address bar I get ‘random pose’ generator…so is {import:pose-generator-simple} using the generator at https://perchance.org/pose-generator-simple as the plugin? And therefore the html IS the code?

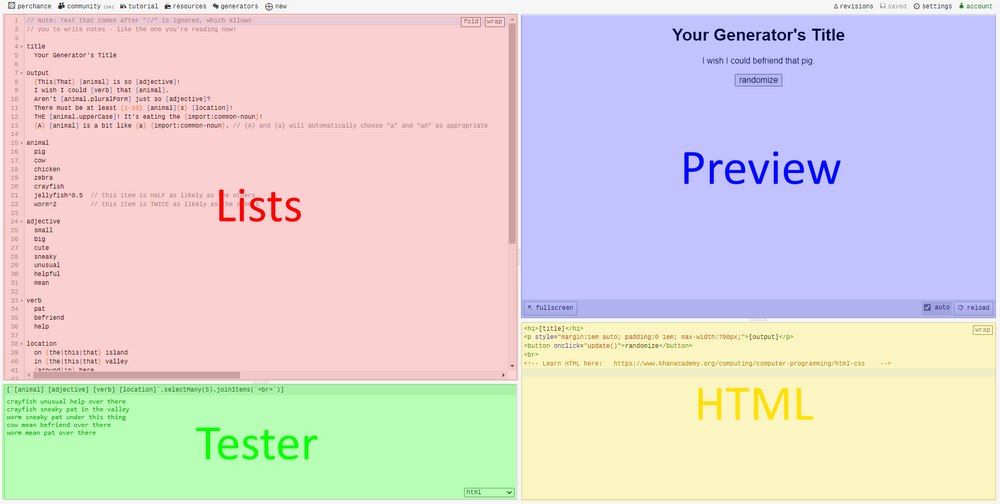

So, in Perchance there are two places to put codes in, the Lists Panel and the HTML Panel

Most of the time, you ‘import’ a ‘plugin’ on the Lists Panel e.g.

image = {import:text-to-image-plugin} output ...So, to see the code of the

text-to-image-pluginyou go to the perchance.org/text-to-image-plugin.In your example, you are importing the

text-to-image-plugin,pose-generator-simpleandverbgenerators. Then to see their code, you just add those ‘names’ to the ‘perchance.org/{name}’ to see their code.By Default, all generators in Perchance can be imported and the imported data can be specified.

On the

text-to-image-pluginit would only output the$output(...) =>which is a function, then to use it on your generator it would be[image(...)]since the output of thetext-to-image-pluginis a function, and you imported it into the namespaceimage(image = {import:text-to-image-plugin}).Im finding your physical description v2 image generator really interesting to analyse 👍

I’ve jumped on that KhanAcadamy course to help fill in blanks I’m unaware of 👍

And it all becomes instantly clearer. Thank you 😀👍

maybe this minimal example will help :)

https://perchance.org/littlestplugin

https://perchance.org/littlestpluginer

Woah that is minimal indeed! ;) Its cool though man I understand whats happening now. I presumed all plugins were programmed using jscript so didn’t make the connection that the generators were indeed “plugins” themselves. It totally makes sense now. 👍