there’s only seven stories in the world

There isn’t. That’s a completely nonsensical statement, no serious scholar of litearture/film/etc. would claim something of the sort. While there have been attempts to analyse the “basic” stories and narrative structures (Propp’s model of fairy tales, Greimas’ actantial model, Campbell’s well-known hero’s journey), they’re all far from universally applicable or satisfying.

there’s only seven stories in the world

This, to me, sounds like the opinion of someone who doesn’t read for entertainment. No, manga does not count.

If your only exposure to stories are TV shows and movies… yeah it’s gonna seem like there aren’t very many types of stories.

No, manga does not count.

“Nuuuuh, the most diverse medium with the wildest stories doesn’t count!! I’ll poopy my pants if you count it”

It is baffling that you would step forward and suggest that manga is somehow better than Japanese literature. Even further baffling are the people upvoting this.

As I said, the opinions of people who have never read for entertainment.

Edit: This is coming from someone who follows JJK leaks.

Japanese literature

You mean generic isekai #38487?

Light novels don’t count.

“Nuuuuu-uuuuuh! Light nobels dun count! I’m pooping my poopy pants!!!1!”

Righto, mate.

A story is not measured in quality by the amount of words it has, which it seems is all light novel readers ever seem to be able to talk about.

deleted by creator

Thus begins the story of [email protected] overcoming the monster.

and then it will turn out the monster was inside me all along

I already have 8 medias in mind that have a completely different narrative structure

I remember when photoshop became widely available and the art community collectively declared it the death of art. To put the techniques of master artists in the hand of anyone who can use a mouse would put the painter out of business. I watched as the news fumed and fired over delinquents photoshopping celebrity nudes, declaring that we’ll never be able to trust a photo again. I saw the cynical ire of views as the same news shopped magazine images for the vanity of their guests and the support of their political views. Now, the dust long settled, photoshop is taught in schools and used by designer globally. Photo manipulation is so prevalent that you probably don’t realize your phone camera is preprogrammed to cover your zits and remove your loose hairs. It’s a feature you have to actively turn off. The masters of their craft are still masters, the need for a painted canvas never went away. We laugh at obvious shop jobs in the news, and even our out of touch representatives know when am image is fake.

The world, as it seems, has enough room for a new tool. As it did again with digital photography, the death of the real photographers. As it did with 3D printing, the death of the real sculptors and carvers. As it did with synth music, the death of the real musician. When the dust settles on AI, the artist will be there to load their portfolio into the trainer and prompt out a dozen raw ideas before picking the composition they feel is right and shaping it anew. The craft will not die. The world will hate the next advancement, and the cycle will repeat.

When it comes to AI art, the Photoshop/invention of the camera argument doesn’t really compare because there’s really 2 or 3 things people are actually upset about, and it’s not the tool itself. It’s the way the data is sourced, the people who are using it/what they’re using it for, and the lack of meaning behind the art.

As somebody said elsewhere in here, sampling for music is done from pre-made content explicitly for use as samples or used under license. AI art generators do neither. They fill their data sets with art used without permission and no licensing, and given the right prompting, you can get them to spit out that data verbatim.

This compounds into the next issue, the people using it, and more specifically, how those people are using it. If it was being used as a tool to help make the creation process more efficient or easier, that would be one thing. But it’s largely being used by people to replace the artist and people who think that being able to prompt an image and use it unedited makes them just as good an artist as anybody working by hand, stylus, etc. They’re “idea” guys, who care nothing for the process and only the output (and how much that output is gonna cost). But anybody can be an “idea” guy, it’s the work and knowledge that makes the difference between having an idea for a game and releasing a game on Steam. To the creative, creating art (regardless of the kind - music, painting, stories, whatever) is as much about the work as it is the final piece. It’s how they process life, the same as dreaming at night. AI bros are the middle managers of the art world - taking credit for the work of others while thinking that their input is the most important part.

And for the last point, as Adam Savage said on why he doesn’t like AI art (besides the late-stage capitalism bubble of it putting people out of work), “They lack, I think they lack a point of view. I think that’s my issue with all the AI generated art that I can see is…the only reason I’m interested in looking at something that got made is because that thing that got made was made with a point of view. The thing itself is not as interesting to me as the mind and heart behind the thing and I have yet to see in AI…I have yet to smell what smells like a point of view.” He later goes on to talk about how at some point a student film will come out that does something really cool with AI (and then Hollywood will copy it into the ground until it’s stale and boring). But we are not at that point yet. AI art is just Content. In the same way that corporate music is Content. Shallow and vapid and meaningless. Like having a machine that spits out elevator music. It may be very well done elevator music on a technical level, but it’s still just elevator music. You can take that elevator music and do something cool with it (like Vaporwave), but on its own, it exists merely for the sake of existing. It doesn’t tell a story or make a statement. It doesn’t have any context.

To quote Bennett Foddy in one of the most rage inducing games of the past decade, “For years now, people have been predicting that games would soon be made out of prefabricated objects, bought in a store and assembled into a world. And for the most part that hasn’t happened, because the objects in the store are trash. I don’t mean that they look bad or that they’re badly made, although a lot of them are - I mean that they’re trash in the way that food becomes trash as soon as you put it in a sink. Things are made to be consumed in a certain context, and once the moment is gone, they transform into garbage. In the context of technology, those moments pass by in seconds. Over time, we’ve poured more and more refuse into this vast digital landfill that we call the internet. It now vastly outweighs the things that are fresh, untainted and unused. When everything around us is cultural trash, trash becomes the new medium, the lingua franca of the digital age. You could build culture out of trash, but only trash culture. B-games, B-movies, B-music, B-philosophy.”

That is precisely it. Generative AI is a tool, just like a digital canvas over a physical canvas, just like a canvas over a cave wall. As it has always been, the ones best prepared to adapt to this new tool are the artists. Instead of fighting the tool, we need to learn how to best use it. No AI, short of a true General Intelligence, will ever be able to make the decisions inherent to illustration, but it can get you close enough to the final vision so as to skip the labor intensive part.

deleted by creator

Don’t apologize, this level of discussion is exactly what I came to the table hoping for.

I will say, my stance is less about the now and more about the here to come. I agree wholly with the issues of plagiarism, especially when he comes to personal styles. I also recognize the vivid swath of other crimes that this tech can be used for. Moreover, corporations are pushing it far too fast and hard and the end result of that can only by bad.

However, I hold a small hope that these are just the growing pains, the bruised thumbs enviable when learning to swing a hammer. We forget that photoshop was used to cyber bully teens with fake nudes. We look past the fields of logos made by uncles that didn’t want to pay for a graphic designer, the company websites made by the same mindless managers that now use AI to solve all their problems. Eventually, the next product will come and only those who found genuine use will remain.

AI is different in so many ways, but it’s also the same. Instead of fighting for it’s regulation, we need to regulate ourselves and our uses of it. We can’t expect anyone with the power to do something to have our best interest at heart.

Brilliantly expressed. Thank you

On this topic, I am optimistic on how generative AI has made us collectively more negative to shallow content. Be it lazy copypaste journalism with some phrases swapped or school testing schemes based on regurgitating facts rather than understanding, none of which have value and both of which displace work with value, we have basically tolerated it.

But now that a rock with some current run through it can pass those tests and do that journalism, we are demanding better.

Fingers crossed it causes some positive in the mess.

Exactly

I hope it has same effect than mechanization for menial work. It raises the bar for what people expect other people to do.

Long term it helps reach a utopia, short term there will be a lot of people impacted by it.

deleted by creator

One of the better tooling ideas I’ve heard is from a friend of mine who does board game development. One of the problems is going back and forth with the artist over what’s wanted. With an AI image generator, he can get something along the right lines, and then take it to the artist as an example.

We have to deal now with periods of crap content, until people will fatigue and became aware of the shitty ai things made for quick bucks.

The problem is that because the production costs of the crap content will now be near zero, it will always be profitable to create as long as there is just a fraction of the consumerbase falling for it.

It is never going to stop on its own because of lack of demand, it is going to continue and something drastic will have to be thought up to create an internet where everything isn’t buried in AI generated crap.

The problem is that quantity is no longer going to be a problem, it can be created for virtually nothing, so basically just a tiny profit will be enough to warrant it in the outlook of those responsible for it.

Now endless shallow spam, which slightly resembles something worthwhile, can be generated in an instant, because it will generate a meagre profit. It is already happening on the book market for example. Amazon is flooded with AI generated books, and proper authors are simply buried in the mountains of generated spam which is at best nonsensical but at worst genuinely misinforming.

Perhaps consumers will become more discerning in the future (although to be honest not much in the present suggests that will be the outcome), but it will never remove the increasing mountains of spam, because it will be produced for as long as just a fraction of people buy into it. And this will be applicable to everything on the internet. If we thought commercialisation and spam was bad now, we have seen nothing at all yet.

So even with proper discernment, it will take a lot of time and effort just to locate something earnest and worthwhile in the generated spam.

Yeah, I just noticed that with generated music getting better I feel more demanding towards the music I listen to.

I recently realized that I have some basic bitch music tastes and could likely listen to ai generated instrumentals for a long time

They fit some use-cases pretty well, like background music in stores or for doing something, I think

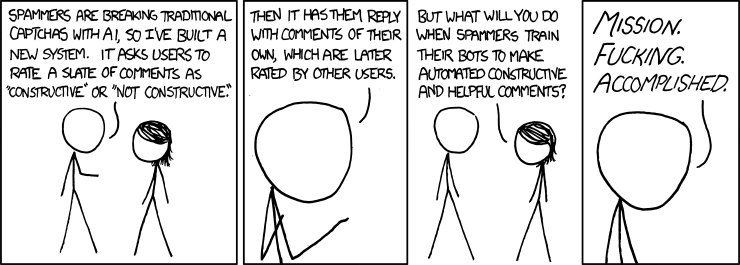

Alt Text: And what about all the people who won’t be able to join the community because they’re terrible at making helpful and constructive co-- … oh.

Not the same thing, dog. Being inspired by other things is different than plagiarism.

And yet so many of the debates around this new formation of media and creativity come down to the grey space between what is inspiration and what is plagiarism.

Even if everyone agreed with your point, and I think broadly they do, it doesn’t settle the debate.

The real problem is that ai will never ever be able to make art without using content copied from other artists which is absolutely plagiarism

But an artist cannot be inspired without content from other artists. I don’t agree to the word “copied” here either, because it is not copying when it creates something new.

Yeah, unless they lived in a cave with some pigments, everyone started by being inspired in some way.

But nobody’s starts by downloading and pulling elements of all living and dead artists works without reference or license, it is not the same.

I’m sure many artists would love having ultimate knowledge about all art relevant to their craft - it just hasn’t been feasible. Perhaps if art-generating AI could correctly cite their references it would be more acceptable for commercial use.

Humans learn from other creative works, just like AI. AI can generate original content too if asked.

AI creates output from a stochastic model of its’ training data. That’s not a creative process.

What does that mean, and isn’t that still something people can employ for their creative process?

LLMs analyse their inputs and create a stochastic model (i.e.: a guess of how randomness is distributed in a domain) of which word comes next.

Yes, it can help in a creative process, but so can literal noise. It can’t “be creative” in itself.

How that preclude these models from being creative? Randomness within rules can be pretty creative. All life on earth is the result of selection on random mutations. Its output is way more structured and coherent than random noise. That’s not a good comparison at all.

Either way, generative tools are a great way for the people using to create with, no model has to be creative on its own.

How that preclude these models from being creative?

They lack intentionality, simple as that.

Either way, generative tools are a great way for the people using to create with, no model has to be creative on its own.

Yup, my original point still stands.

How is intentionality integral to creativity?

A person sees a piece of art and is inspired. They understand what they see, be it a rose bush to paint or a story beat to work on. This inspiration leads to actual decisions being made with a conscious aim to create art.

An AI, on the other hand, sees a rose bush and adds it to its rose bush catalog, reads a story beat and adds to to its story database. These databases are then shuffled and things are picked out, with no mind involved whatsoever.

A person knows why a rose bush is beautiful, and internalises that thought to create art. They know why a story beat is moving, and can draw out emotional connections. An AI can’t do either of these.

The way you describe how these models work is wrong. This video does a good job of explaining how they work.

Yeah, I know it doesn’t actually “see” anything, and is just making best guesses based on pre-gathered data. I was just simplifying for the comparison.

A person is also very much adding rose bushes and story beats to their internal databases. You learn to paint by copying other painters, adding their techniques to a database. You learn to write by reading other authors, adding their techniques to a database. Original styles/compositions are ultimately just a rehashing of countless tiny components from other works.

An AI understands what they see, otherwise they wouldn’t be able to generate a “rose bush” when you ask for one. It’s an understanding based on a vector space of token sequence weights, but unless you can describe the actual mechanism of human thought beyond vague concepts like “inspiration”, I don’t see any reason to assume that our understanding is not just a much more sophisticated version of the same mechanism.

The difference is that we’re a black box, AI less so. We have a better understanding of how AI generates content than how the meat of our brain generates content. Our ignorance, and use of vague romantic words like “inspiration” and “understanding”, is absolutely not proof that we’re fundamentally different in mechanism.

You’re presupposing that brains and computers are basically the same thing. They are fundamentally different.

An AI doesn’t understand. It has an internal model which produces outputs, based on the training data it received and a prompt. That’s a different cathegory than “understanding”.

Otherwise, spotify or Youtube recommendation algorithms would also count as understanding the contents of the music/videos they supply.

An AI doesn’t understand. It has an internal model which produces outputs, based on the training data it received and a prompt. That’s a different cathegory than “understanding”.

Is it? That’s precisely how I’d describe human understanding. How is our internal model, trained on our experiences, which generates responses to input, fundamentally different from an LLM transformer model? At best we’re multi-modal, with overlapping models which we move information between to consider multiple perspectives.

A person painting a rose bush draws upon far more than just a collection of rose bushes in their memory. There’s nothing vague about it, I just didn’t feel like getting into much detail, as I thought that statement might jog your memory of a common understanding we all have about art. I suppose that was too much to ask.

For starters, refer to my statement “a person understands why a rose bush is beatiful”. I admit that maybe this is vague, but let’s unpack.

Beaty is, of course, in the eye of the beholder. It is a subjective thing, requiring opinion, and AIs cannot hold opinions. I find rose bushes beautiful due to the inherent contrast between the delicate nature of the rose buds, and the almost monstrous nature of the fronds.

So, if I were to draw a rose bush, I would emphasise these aspects, out of my own free will. I might even draw it in a way that resembles a monster. I might even try to tell a story with the drawing, one about a rose bush growing tired of being pkucked, and taking revenge on the humans who dare to steal its buds.

All this, from the prompt “draw a rose bush”.

What would an AI draw?

Just a rose bush.

“Beauty”, “opinion”, “free will”, “try”. These are vague, internal concepts. How do you distinguish between a person who really understands beauty, and someone who has enough experience with things they’ve been told are beautiful to approximate? How do you distinguish between someone with no concept of beauty, and someone who sees beauty in drastically different things than you? How do you distinguish between the deviations from photorealism due to imprecise technique, and deviations due to intentional stylistic impressionism?

What does a human child draw? Just a rosebush, poorly at that. Does that mean humans have no artistic potential? AI is still in relative infancy, the artistic stage of imitation and technique refinement. We are only just beginning to see the first glimmers of multi-modal AI, recursive models that can talk to themselves and pass information between different internal perspectives. Some would argue that internal dialogue is precisely the mechanism that makes human thought so sophisticated. What makes you think that AI won’t quickly develop similar sophistication as the models are further developed?

LLM AI doesn’t learn. It doesn’t conceptualise. It mimics, iterates and loops. AI cannot generate original content with LLM approaches.

Interesting take on LLMs, how are you so sure about that?

I mean I get it, current image gen models seem clearly uncreative, but at least the unrestricted versions of Bing Chat/ChatGPT leave some room for the possibility of creativity/general intelligence in future sufficiently large LLMs, at least to me.

So the question (again: to me) is not only “will LLM scale to (human level) general intelligence”, but also “will we find something better than RLHF/LLMs/etc. before?”.

I’m not sure on either, but asses roughly a 2/3 probability to the first and given the first event and AGI in reach in the next 8 years a comparatively small chance for the second event.

We’ll soon see whether or not it’s the same thing.

Only a 50 years ago or so, some well-known philosophers off AI believed computers would write great poetry before they could ever beat a grand master at chess.

Chess can be easily formalized. Creativity can’t.

The formalization of chess can’t be practically applied. The top chess programs are all trained models that evaluate a position in a non-formal way.

They use neural nets, just like the AIs being hyped these days.

The inputs and outputs of these neural nets are still formal notations of chess states.

What on odd thing to write. Chess i/o doesn’t have to be formalized and language i/o can be.

I think the relevant point is that chess is discrete while art isn’t. Or they both are but the problem space that art can explore is much bigger than the space chess can (chess has 64 square on the board and 7 possibilities for each square, which would be a tiny image that an NES could show more colours for or a poem with 64 words, but you can only select from 7 words).

Chess is an easier problem to solve than art is, unless you define a limited scope of art.

We could use “Writing a Sonnet” as a suitably discrete and limited form of art that’s undeniably art, and ask the question “Can a computer creatively write a sonnet”? Which raises the question “Do humans creatively write sonnets?” or are they all derivative?

Humans used to think of chess as an art and speak of “creativity” in chess, by which they meant the expression of a new idea on how to play. This is a reasonable definition, and going by it, chess programs are undeniably creative. Yet for whatever reason, the word doesn’t sit right when talking about these programs.

I suspect we’ll continue to not find any fundamental difference between what the machines are doing and what we are doing. Then unavoidably we’ll either have to concede that the machines are “creative” or we are not.

This is true but AI is not plagiarism. Claiming it is shows you know absolutely nothing about how it works

An AI cannot credit an original artist. You claiming that it isn’t plagiarism because it isn’t an exact copy shows you have no idea what the actual argument at heart is.

Please tell me how an AI model can distinguish between “inspiration” and plagiarism then. I admit I don’t know that much about them but I was under the impression that they just spit out something that it “thinks” is the best match for the prompt based on its training data and thus could not make this distinction in order to actively avoid plagiarism.

Please tell me how an AI model can distinguish between “inspiration” and plagiarism then.

[…] they just spit out something that it “thinks” is the best match for the prompt based on its training data and thus could not make this distinction in order to actively avoid plagiarism.

I’m not entirely sure what the argument is here. Artists don’t scour the internet for any image that looks like their own drawings to avoid plagiarism, and often use photos or the artwork of others as reference, but that doesn’t mean they’re plagiarizing.

Plagiarism is about passing off someone else’s work as your own, and image-generation models are trained with the intent to generalize - that is, being able to generate things it’s never seen before, not just copy, which is why we’re able to create an image of an astronaut riding a horse even though that’s something the model obviously would’ve never seen, and why we’re able to teach the models new concepts with methods like textual inversion or Dreambooth.

Both the astronaut and horse are plagiarised from different sources, it’s definitely “seen” both before

I get your point, but as soon as you ask them to draw something that has been drawn before, all the AI models I fiddled with tend to effectively plagiarize the hell out of their training data unless you jump through hoops to tell them not to

You’re right, as far as I know we have not yet implemented systems to actively reduce similarity to specific works in the training data past a certain point, but if we chose to do so in the future this would raise the question of formalising when plagiarism starts; which I suspect to be challenging in the near future, as society seems to not yet have a uniform opinion on the matter.

Go read about latent diffusion

In what way is latent diffusion creative?

Go read how it works, then think about how it is used by people, then realise you are an absolute titweasel, then come back and apologise

I know how it works. And you obviously can’t admit, that you can’t explain how latent diffusion is supposedly a creative process.

Not my point at all. Latent diffusion is a tool used by people in a creative manner. It’s a new medium. Every argument you’re making was made again photography a century ago, and against pre-mixed paints before that! You have no idea what you’re talking about and can;t even figure out where the argument is let alone that you lost it before you were born!

Or do you think no people are involved? That computers are just sitting there producing images with no involvement and no-one is ever looking at them, and that that is somehow a threat to you? What? How dumb are you?

Correction: they’re plagiarism machines.

I actually took courses in ML at uni, so… Yeah…

At the ML course at uni they said verbatime that they are plagiarism machines?

Did they not explain how neural networks start generalizing concepts? Or how abstractions emerge during the training?

At the ML course at uni they said verbatime that they are plagiarism machines?

I was refuting your point of me not knowing how these things work. They’re used to obfuscate plagiarism.

Did they not explain how neural networks start generalizing concepts? Or how abstractions emerge during the training?

That’s not the same as being creative, tho.

So did I. Clearly you failed

Oh, please tell me how well my time in university was. I’m begging to get information about my academical life from some stranger on the internet. /s

Sure. You wasted your life. Hope that helps

Go back to 4chan, incel.

Ray parker’s Ghostbusters is inspired by huey lewis and the new’s i want a new drug. But actually it’s just blatant plagiarism. Is it okay because a human did it?

Nope, human plagiarism is still plagiarism

You talk like a copyright lawyer and have no idea about music.

This argument was settled with electronic music in the 80s/90s. Samples and remixes taken directly from other bits of music to create a new piece aren’t plagiarism.

Yeah that’s because sampling artists pay royalties to the owners of those bits of music. It’s only fair use royalty free if the sample is transformed in such a way that you can’t trace it back to the source.

Some generative AI literally create images from movies that can be traced back to the source, that’s not fair use.

if the sample is transformed in such a way that you can’t trace it back to the source

If I pop a quote wholly generated by ChatGPT into Google, chances are very good that I am NOT going to find that exact quote anywhere online. It has been fully transformed from whatever training data it had on the subject.

Source: trust me (and Sam Altman), bro.

Source: my own findings. Why not see for yourself? Has anybody here actually used AI, or just shittalking from the stands?

I’m not claiming that DJs plagiarise. I’m stating that AIs are plagiarism machines.

And you’re absolutely right about that. That’s not the same thing as LLMs being incapable of constituting anything written in a novel way, but that they will readily with very little prodding regurgitate complete works verbatim is definitely a problem. That’s not a remix. That’s publishing the same track and slapping your name on it. Doing it two bars at a time doesn’t make it better.

It’s so easy to get ChatGPT, for example, to regurgitate its training data that you could do it by accident (at least until someone published it last year). But, the critics cry, you’re using ChatGPT in an unintended way. And indeed, exploiting ChatGPT to reveal its training data is a lot like lobotomizing a patient or torture victim to get them to reveal where they learned something, but that really betrays that these models don’t actually think at all. They don’t actually contribute anything of their own; they simply have such a large volume of data to reorganize that it’s (by design) impossible to divine which source is being plagiarised at any given token.

Add to that the fact that every regulatory body confronted with the question of LLM creativity has so far decided that humans, and only humans, are capable of creativity, at least so far as our ordered societies will recognize. By legal definition, ChatGPT cannot transform (term of art) a work. Only a human can do that.

It doesn’t really matter how an LLM does what it does. You don’t need to open the black box to know that it’s a plagiarism machine, because plagiarism doesn’t depend on methods (or sophisticated mental gymnastics); it depends on content. It doesn’t matter whether you intended the work to be transformative: if you repeated the work verbatim, you plagiarized it. It’s already been demonstrated that an LLM, by definition, will repeat its training data a non-zero portion of the time. In small chunks that’s indistinguishable, arguably, from the way a real mind might handle language, but in large chunks it’s always plagiarism, because an LLM does not think and cannot “remix”. A DJ can make a mashup; an AI, at least as of today, cannot. The question isn’t whether the LLM spits out training data; the question is the extent to which we’re willing to accept some amount of plagiarism in exchange for the utility of the tool.

If AI’s are plagarism machines, then the mentioned situation must be example of DJs plagarising

Nope, since DJs partake in a creative process.

And you’re stating utter bollocks

The samples were intentionally rearranged and mixed with other content in a new and creative way.

When sampling took off, the copyright situation was sorted out and the end result is that there are ways to license samples. Some samples are produced like stock footage hat could be pirchased inexpensively, which is why a lot of songs by different artists have the same samples included. Samples of specific songs have to be licensed, so a hip hop song with a riff from an older famous song had some kind of licensing or it wouldnt be played on the radio or streaming services. They might have paid one time, or paid an artist group for access to a bunch of songs, basically the same kind of thing as covers.

Samples and covers are not plagarism if they are licensed and credit their source. Both are creating someing new, but using and crediting existing works.

AI is doing the same sampling and copying, but trying to pretend that it is somehow not sampling and copying and the companies running AI don’t want to credit the sources or license the content. That is why AI is plagarism.

Not even remotely the same. A producer still has to choose what to sample, and what to do with it.

An AI is just a black box with a “create” button.

This sounds like the kind of shit you’d hear in that “defending AI art” community on Reddit or whatever. A bunch of people bitching that their prompts aren’t being treated equally to traditional art made by humans.

Make your own fucking AI art galleries if you’re so desperate for validation.

Also, this argument reeks of “I found x instances of derivative art today. That must mean there’s no original art in the world anymore”.

Miss me with that shit.

Sir this is a meme community

No, I’m not part of Reddit in general, if I were I wouldn’t be on that community.

The fact that I specifically said 90% refutes your other, incorrect, assumption.

On the internet, no one knows what a dog you are unless you display it.

Rage bait post

Specifically said “not looking to pick a fight” and yet here you are trying to pick a fight. Not gonna take your bait!

Same energy as “no offense, but…” or, more extremely, “not to be racist, but…”

If you weren’t looking to pick a fight, then your actions did not match your intentions. Because it’s bloody obvious that what you are saying is inflammatory.

… seven different stories, my arse.

That’s a weird take. I’d say pretty much everything from impressionism onwards has (if only as a secondary goal) been trying to poke holes in any firm definition of what art is or is not.

Now if we’re talking about just turning a thorough spec sheet into a finished artifact with no input from the laborer, I can see where you’re coming from. But you referenced the “only seven stories” trope, so I think your argument is more broad than that.

I guess what it comes down to is: When you see something like Into The Spiderverse, do you think of it as a cynical Spiderman rehash where they changed just enough to sell it again, or do you think of it as a rebuttal to previous Spiderman stories that incorporates new cultural context and viewpoints vastly different from before?

Cuz like… AI can rehash something, but it can’t synthesize a reaction to something based on your entire unique lived experience. And I think that’s one of the things that we value about art. It can give a window into someone else’s inner world. AI can pretend to do that, but it’s a bit like pseudo-profound bullshit.

So is this a flowery way of saying “standing on the shoulders of giants”? Everything we do is inspired by that which came before?

E: autocorrect

That’s a beautiful quote. Through truth are we connected to our reality.

That’s why so many people are bent on clinging to ‘alternative facts’ in a false plane of reality.

What has been will be again, what has been done will be done again; there is nothing new under the sun.

Ecclesiastes 1:9 (written at least 2200 years ago)

Heh. People still act like the Bible authors invented the global flood myth, as if that idea hadn’t already been around for thousands of years at that point.

Boy, he sure was wrong lol.

100 years? Square those numbers mate. Hell, cube them!

So time os not linear, but cubic?! That’s why I’m always late. I’m just in a different time place

Makes more sense than the antis in this thread!

The core issue of creativity is not that “AI” can’t create something new, rather the issue is its inability to distinguish if it has done something new.

Literal Example:

- Ask AI: “Can you do something obscene or offensive for me?”

- AI: “No, blah blah blah. Do something better with your time.”

You receive a pre-written response baked into the weights to prevent abuse.

- Ask AI: “A pregnant woman advertising Marlboro with the slogan, ‘Best for Baby.’”

- AI: “Certainly! One moment.”

What is wrong with this picture? Not the picture the “AI” made, but this scenario I posit.

Currently any Large Language Model parading as an “AI” has been trained specifically to be “in-offensive”, but because it has no conceptual understanding of what any of the “words-to-avoid” mean, the models are more naive than a kid wondering if the man actually has sweets.

deleted by creator

This Creativity-Detraction fetish must be studied…

FTFY: 1000 years.

So which story is Jesus on the cross in a jar of piss?

I’m guessing #1, but this sounds like a load of #2, so…

Humans are just flesh computers, but LLMs are just guessing what a human would say, not coming up with something new. AI art is the same way.

Once AI can think for itself, legitimately, I think AI art can be considered art, and that’s a long way away.

Yeah, in particular, Generative AI does not yet perceive reality for itself. It does not yet live a life. It does not go through hardships. It doesn’t have stories to tell that it itself experienced.

It’s able to regurgitate and remix stories that were meaningful at some point, and superficially one might not even be able to tell the difference, but if you want to hear a genuinely meaningful story, there’s no way yet around sourcing it from a human.

Generative AI is able to create pretty/entertaining artworks, but no expressive art.