- cross-posted to:

- news

- cross-posted to:

- news

A shocking story was promoted on the “front page” or main feed of Elon Musk’s X on Thursday:

“Iran Strikes Tel Aviv with Heavy Missiles,” read the headline.

This would certainly be a worrying world news development. Earlier that week, Israel had conducted an airstrike on Iran’s embassy in Syria, killing two generals as well as other officers. Retaliation from Iran seemed like a plausible occurrence.

But, there was one major problem: Iran did not attack Israel. The headline was fake.

Even more concerning, the fake headline was apparently generated by X’s own official AI chatbot, Grok, and then promoted by X’s trending news product, Explore, on the very first day of an updated version of the feature.

People who deploy AI should be held responsible for the slander and defamation the AI causes.

Slander is spoken. In print, it’s libel.

Get me pictures of Spiderman!

Parker, why does Spider-Man have seven fingers in this photo?!

Why I imagine Xitter lawyers arguing that was it was neither spoken nor “printed”, they can’t be charged?

deleted by creator

You expect people pushing AI fear mongering to be literate?

how is it fear mongering when shit like this is happening? AI as it stands is unregulated and will continue to cause issues if left this way

So will self-driving cars, the oil industry, and a bunch of others. The US is a plutocracy, and Musk has enough power to keep playing around with this, so that’s it. If you want actual change, either start organizing for a general strike or a civil war, since stopping Musk is not on the ballot, and most likely won’t be for either of your lifetimes.

I mean, if your problem is just narrowly musk, the one guy, you don’t need a whole war; just one shot.

I’m not advocating this, just pointing out, you know? Not that I have a problem with turning the class massacre into a class war.

The thing is, the vast majority of terrorist attacks and assassinations are organized by rich people using poor people as pawns to further their political agenda. Such things are rarely born from people who are in touch with society and are compassionate with others.

9/11 was organized by rich people to get back at other rich people for attacking their business. Even today’s right wing crazies only exist because there is a propaganda machine running on obscene wealth whipping desperate people into a frenzy.

I don’t see what terrorism has to do with this. Unless you mean the practical definition of ‘things that upset the wealthy’. Which is dumb. I want a special scary word for things that upset me.

Well if you read OpenAI’s terms of service, there’s an indemnification clause in there.

Basically if you get ChatGPT to say something defaming/libellous and then post it, you would foot the legal bill for any lawsuits that may arise from your screenshot of what their LLM produced.

I wonder how legislation is going to evolve to handle AI. Brazilian law would punish a newspaper or social media platform claiming that Iran just attacked Israel - this is dangerous information that could affect somebody’s life.

If it were up to me, if your AI hallucinated some dangerous information and provided it to users, you’re personally responsible. I bet if such a law existed in less than a month all those AI developers would very quickly abandon the “oh no you see it’s impossible to completely avoid hallucinations for you see the math is just too complex tee hee” and would actually fix this.

I bet if such a law existed in less than a month all those AI developers would very quickly abandon the “oh no you see it’s impossible to completely avoid hallucinations for you see the math is just too complex tee hee” and would actually fix this.

Nah, this problem is actually too hard to solve with LLMs. They don’t have any structure or understanding of what they’re saying so there’s no way to write better guardrails… Unless you build some other system that tries to make sense of what the LLM says, but that approaches the difficulty of just building an intelligent agent in the first place.

So no, if this law came into effect, people would just stop using AI. It’s too cavalier. And imo, they probably should stop for cases like this unless it has direct human oversight of everything coming out of it. Which also, probably just wouldn’t happen.

Yep. To add on, this is exactly what all the “AI haters” (myself included) are going on about when they say stuff like there isn’t any logic or understanding behind LLMs, or when they say they are stochastic parrots.

LLMs are incredibly good at generating text that works grammatically and reads like it was put together by someone knowledgable and confident, but they have no concept of “truth” or reality. They just have a ton of absurdly complicated technical data about how words/phrases/sentences are related to each other on a structural basis. It’s all just really complicated math about how text is put together. It’s absolutely amazing, but it is also literally and technologically impossible for that to spontaneously coelesce into reason/logic/sentience.

Turns out that if you get enough of that data together, it makes a very convincing appearance of logic and reason. But it’s only an appearance.

You can’t duct tape enough speak and spells together to rival the mass of the Sun and have it somehow just become something that outputs a believable human voice.

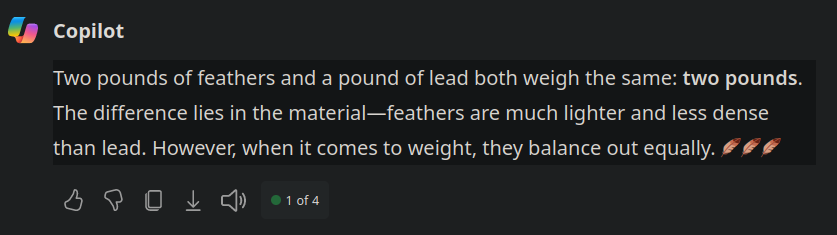

For an incredibly long time, ChatGPT would fail questions along the lines of “What’s heavier, a pound of feathers or three pounds of steel?” because it had seen the normal variation of the riddle with equal weights so many times. It has no concept of one being smaller than three. It just “knows” the pattern of the “correct” response.

It no longer fails that “trick”, but there’s significant evidence that OpenAI has set up custom handling for that riddle over top of the actual LLM, as it doesn’t take much work to find similar ways to trip it up by using slightly modified versions of classic riddles.

A lot of supporters will counter “Well I just ask it to tell the truth, or tell it that it’s wrong, and it corrects itself”, but I’ve seen plenty of anecdotes in the opposite direction, with ChatGPT insisting that it’s hallucination was fact. It doesn’t have any concept of true or false.

The shame of it is that despite this limitation LLMs have very real practical uses that, much like cryptocurrencies and NFTs did to blockchain, are being undercut by hucksters.

Tesla has done the same thing with autonomous driving too. They claimed to be something they’re not (fanboys don’t @ me about semantics) and made the REAL thing less trusted and take even longer to come to market.

Drives me crazy.

Yup, and I hate that.

I really would like to one day just take road trips everywhere without having to actually drive.

Right? Waymo is already several times safer than humans and tesla’s garbage, yet municipalities keep refusing them. Trust is a huge problem for them.

And yes, haters, I know that they still have problems in inclement weather but that’s kinda the point: we would be much further along if it weren’t for the unreasonable hurdles they keep facing because of fear created by Tesla

Hadn’t heard of this. Thanks!

For road trips (i.e. interstates and divided highways), GM’s Super Cruise is pretty much there unless you go through a construction zone. I just went from Atlanta to Knoxville without touching the steering wheel once.

I’ll look into that when my Kia passes away. Thank you!

Trains are really good for that

You can’t road trip in a train.

I love that example. Microsoft’s Copilot (based on GTP-4) immediately doesn’t disappoint:

It’s annoying that for many things, like basic programming tasks, it manages to generate reasonable output that is good enough to goat people into trusting it, yet hallucinates very obviously wrong stuff or follows completely insane approaches on anything off the beaten path. Every other day, I have to spend an hour to justify to a coworker why I wrote code this way when the AI has given him another “great” suggestion, like opening a hidden window with an UI control to query a database instead of going through our ORM.

but it is also literally and technologically impossible for that to spontaneously coelesce into reason/logic/sentience

Yeah, see, one very popular modern religion (without official status or need for one to explicitly identify with id, but really influential) is exactly about “a wonderful invention” spontaneously emerging in the hands of some “genius” who “thinks differently”.

Most people put this idea far above reaching your goal after making myriad of small steps, not skipping a single one.

They also want a magic wand.

The fans of “AI” today are deep inside simply luddites. They want some new magic to emerge to destroy the magic they fear.

Lol, the AI haters are luddites, not the AI supporters. AI is the present and future, and just because it isn’t perfect doesn’t mean it’s not good enough for many things. And it will continue to get better, most likely.

You should try and understand that it’s not magic, it’s a very specific set of actions aimed at a very specific result with very specific area of application. Every part of it is clear. There’s no uncharted area where we don’t know at all what happens. Engineering doesn’t work like that anywhere except action movies.

By the same logic as that “it isn’t perfect” a plane made of grass by cargo cult members can suddenly turn into a real aircraft.

And it won’t magically become something above it, if that’s what you mean by “get better”.

For the same reason we still don’t have a computer virus which developed conscience, and we won’t.

And if you think otherwise then you are what I described.

It’s absolutely amazing, but it is also literally and technologically impossible for that to spontaneously coelesce into reason/logic/sentience.

This is not true. If you train these models on game of Othello, they’ll keep a state of the world internally and use that to predict the next move played (1). To execute addition and multiplication they are executing an algorithm on which they were not explicitly trained (although the gpt family is surprisingly bad at it, due to a badly designed tokenizer).

These models are still pretty bad at most reasoning tasks. But training on predicting the next word is a perfectly valid strategy, after all the best way to predict what comes after the “=” in 1432 + 212 = is to do the addition.

Yep the hallucinations issue happens even in GPT4, in my experience certain topics can bring about potential hallucinations more than others but if ChatGPT (even with GPT4 or whatever other advanced version of it) gets “stuck” on believing its hallucinations the only way to convince it is literally plainly stating the part that’s wrong and directing it to search Bing or the internet some other way specifically for that. Otherwise you just let out a sigh and start a new chat. If you spend too much time negotiating with it that wastes tokens anyway so the chat becomes bloated and it forgets stuff from earlier in the chat, not to mention technically you’re paying for being able to use the more advanced model anyway and yeah basically the more you treat the chat like a normal conversation the worse it is with AI. I guess that’s why “prompt engineering” was or is a thing, whether legitimate or not.

I did also importantly note that if you pay for credits with OpenAI to use their “playground” to create a specifically customized GPT4 adjusting temperature and response types it takes getting used to because it is WAY different than ChatGPT regardless of which version of GPT you have it set to. It actually kind of blew me away with how much better it “””understood””” software development but the issue is you kind of have to set up chats yourself it’s more complex and you pay per token so mistakes cost you. If it wasn’t such a pain and I had a specific use case I would definitely rather pay for OpenAI credits as needed than their bs “Plus” $20/month subscription for nerfed GPT4 as a chatbot.

So no, if this law came into effect, people would just stop using AI. And imo, they probably should stop for cases like this unless it has direct human oversight of everything coming out of it.

Then you and I agree. If AI can be advertised as a source of information but at the same time can’t provide safeguarded information, then there should not be commercial AI. Build tools to help video editing, remove backgrounds from photos, go nuts, but do not position yourself as a source of information.

Though if fixing AI is at all possible, even if we predict it will only happen after decades of technology improvements, it for sure won’t happen if we are complacent and do not add such legislative restrictions.

Unless you build some other system that tries to make sense of what the LLM says, but that approaches the difficulty of just building an intelligent agent in the first place.

I actually think an attempt at such an agent would have to include the junk generator. And some logical structure with weights and feedbacks it would form on top of that junk would be something easier for me to call “AI”.

I actually have been thinking about this some, and all those “jobs” that people are losing to AI? Will probably end up being jobs that add a human component back into AI for the firms that have doubled down on it. Human oversight is going to be necessary and these companies don’t want to admit that. Even for things that the LLM’s are actually reasonably good at. So either companies will not adopt AI and keep their human workers, or they’ll dump them for AI LLM’S, quickly realize they need people in specialities to comb through AI responses, and either hire them back for that, or hire them back for the job they wanted to supplant them with LLM’S for.

Because reliability and cost are the only things that are going to make one LLM more preferable to another now that the Internet has basically been scraped for useful training data.

This is algorithms all over again but on a much larger scale. We can’t even keep up with mistakes made by algorithms (see copyright strikes and appeals on YouTube or similar). Humans are supposed to review them. They don’t have enough humans to do that job.

The legislation should work like it would before. It’s not something new, like filesharing in the Internet was.

Which means - punishment.

The legislation doesn’t work because part of the problem is what “products” these LLM’s are being attached to. We already had this argument in the early and mid oughts in the US. And nothing was done really about the misinformation proliferated on places like Twitter and Facebook specifically because of what they are. Social media sites are protected by section 230 in the US and are not considered news aggregators. That’s the problem.

People can’t seem to agree on whether or not they should be. I think if the platform (not the users) is pushing something as a legitimate news source it shouldn’t be protected by 230 for the purposes of news aggregation. But I don’t know that our laws are even attempting to keep up with new tech like LLM’S.

NY’s for a chatbot that was actively giving out information that was pseudo legal advice. Suggesting that Businesses should do illegal things. They aren’t even taking it down. They aren’t being forced to take it down.

But laws don’t punish ownership. Ownership is sacred.

Another of Musk cutting corners to the max and endangering lives but why should he care? He is in control and that is the only thing that matters to him, even if he loses billions of dollars.

To everyone that goes to “X” to get the “real”, unfiltered news, I hope you can see that it’s not that site anymore.

Yet, annoyingly, much of the press still uses it to disseminate news.

I understand journalism is in a rough spot these days and many are there against their will but something needs to change abruptly. This slow exodus is too slow for democracy to survive '24.

They could use Nostr. It’s a bit similar to going to a square and yelling. The downside is that you are not heard from every corner of it, but I just remembered of this existing and thought that actually the idea is very nice.

I’d argue it never was anything outside of pulling net celebs names from hats and claiming they were rapists and racists without evidence, and then having them get chased off the internet, destroying their careers in the process and in some cases causing suicides… Unless they actually did it, because then they were rich and could just buy good publicity or start an Alt-Right circle jerk where they can claim “Wokeness” did it.

it was the “yell at celebrities” website. The whole “buy a blue check” thing destroyed it.

It was never that site the FBI was literally using it for narrative control before Elon bought it

Not saying I like it now but Shit was pretty sketchy then too

“Grok” sounds like a name of a really stupid ork from a D&D capaign.

In case you’re not familiar, https://en.m.wikipedia.org/wiki/Grok.

It’s somewhat common slang in hacker culture, which of course Elon is shitting all over as usual. It’s especially ironic since the meaning of the word roughly means “deep or profound understanding”, which their AI has anything but.

Pretty sure its something from a Heilein book namely stranger from a strange world.

Gork is from Stranger in a Strange Land.

Yup. Got also added to the Jargon File, which was an influential collection of hacker slang.

If there’s one thing that Elon is really good at, it’s taking obscure beloved nerd tidbits and then pigeon-shitting all over them.

Sounds a lot like Cultural appropriation, especially since it is used to market goods and services

‘UMIE SO ZOGGIN’ SMART, 'SPLAIN WOT IDJIT BOOK MORK’Z FROM, THEN

Grok smash!

Oh, what a surprise. Another AI spat out some more bullshit. I can’t wait until companies finally give up on trying to do everything with AI.

I can’t wait until companies finally give up on trying to do everything with AI.

I don’t think that will ever happen.

They’re acceptable of AI driving car accidents that causes harm happen. It’s all part of the learning / debugging process to them.

The issue is that the process won’t ever stop. It won’t ever be debugged sufficiently

EDIT: Due to the way it works. A bit like static error in control theory, you know that for different applications it may or may not be acceptable. The “I” in PID-regulators and all that. IIRC

Due to the way it works. A bit like static error in control theory, you know that for different applications it may or may not be acceptable. The “I” in PID-regulators and all that. IIRC

Oh great, I’m getting horrible flashbacks now to my controls class.

Another way to look at it is if there’s sufficient lag time between your controlled variable and your observed variable, you will never catch up to your target. You’ll always be chasing your tail with basic feedback control.

It won’t ever be debugged sufficiently

It will, someday. Probably years and years down the road (pardon the pun), but it will.

By the way, you reply to me seems very AI-ish. Are you a bot?

No, but English is not my first language

No, but English is not my first language

Fair enough. Apologies.

I guess the argument is that this is what “innovation and disruption” looks like. When they finally iron out so that chatbots won’t invent fake headlines, they will pile on a new technology that endangers us in a new way. This is the acceptable margin of error to them.

AI isn’t inherently bad. Once AI cars cause less accidents than human drivers (even if they still cause some accidents) it will be moral to use them on roads.

No, cars need to end. Move to trains.

AI cars already cause drastically less accidents. And the accidents they do cause are overwhelmingly minor.

People hate it when an accident happens and there’s no one to blame. Now it’s still on the driver’s responsibility but that’s not always going to be the case. We’re never reaching zero traffic deaths even with self driving cars that are a hundred times better than the best human driver.

Beware, terminally incompetent interns everywhere. Doing something incredibly damaging to your company over social media on your first day is officially a job that’s been taken by AI.

Something to do with Twitter and Elon was disinformation? I’m keeling over in shock.

Hope his ass goes to court over this.

I hope he has an aneurysm using a device to enlarge his penis or something.

I hope his penis is enlarged by the device that gives him an aneurism so that he has a closed casket and the mortician does a poor job on his gross poorly modified body.

Grok, worlds smartest orc

I don’t really understand this headline

The bot made it? So why was it promoted as trending?

It’s pretty, trending is based on . . . What’s trending by users.

Or as the article explains for those who can’t comprehend what trending means.

Based on our observations, it appears that the topic started trending because of a sudden uptick of blue checkmark accounts (users who pay a monthly subscription to X for Premium features including the verification badge) spamming the same copy-and-paste misinformation about Iran attacking Israel. The curated posts provided by X were full of these verified accounts spreading this fake news alongside an unverified video depicting explosions.

Amusingly Grok also spat a headline about how police were being deployed to shoot the earthquake after being exposed to a sarcastic tweet. https://i.imgur.com/qltkEsU.jpeg

It does say it’s likely hyperbole, so they probably just tazed and arrested the earthquake.

Also I’m impressed by the 50,000 to 1,000,000 range for officers deployed. It leaves little room for error.

I wonder if the wide margin is the AI trying to formulate logic and numbers in the story but it realizes it doesn’t know how many officers would be needed to shoot the earthquake since it would logically depends on the magnitude of the earthquake which the AI doesn’t know so it figures well alright tectonic plates are rather resistant to firearms discharge and other potential law enforcement tactics so it starts high at 50,000 but decides 1,000,000 is a reasonable cap as there just can’t be more than that many officers present in the state or country

Wow. What a world we live in.

Removed by mod

Same similiar thing happened with major newspapers about 100 / 150 years ago … governments realized that if any one group or company had control over all the information without regulation, businesses will quickly figure out ways to monetize information for the benefit of those with all the money and power. They then had to figure out how to start regulating newspapers and news media in order to maintain some sort of control and sanity to the entire system.

But like the newspapers of old … no one will do anything about all this until it causes a major crisis or causes a terrible event … or events.

In the meantime … big corporations controlling 99% of all media and news information will stay unregulated or regulated as little as possible until terrible things happen and society breaks down.

By the way, yeah, the LLMs of that time looked like some poor guy writing for pennies articles about astrology or how Redskins are cool but should be exterminated, or inventing fake animals and plants every time.

I’ve sort of mixed L. F. Baum’s biography with Marek from “Good soldier Shveyk”, but many consider Marek to be a portrait of author himself.

#smart

Skynet has begun

Was this some glitch in the matrix, then? (For reference: attack happened on 2024-04-14)

deleted by creator

Not to defend elon here but so what? Is it his job?

As CEO he is ultimately responsible for his platform. So yes, in the end it’s his responsibility. It’s why he gets paid the big bucks.

Nah, it’s a bit like government. Its only his responsability if it is no one elses responsability. Like, they can have the most corrupt gabinets, most presidents do not resign/abdicate, whatever the word is.

Nah, it’s a bit like government.

No it’s not.

Ok, you just downvote and say no, but no explanation given. In my gov several cases of corruption arised during the last couple of years, and way more in the past. They affect high ranking ministers, and yet the oresident does not resign. Same with companies, they get paid the most, do the least, claim it is vecause they have “lots of responsabilities” but still never pay the price

Corporations are completely authoritarian, while most governments are not, or at least not completely. If there really is a “rogue engineer”, Musk can very easily fire them. Even if there was, it’s his responsibility to organize a company in such a way that this cannot happen, with people having oversight over other people.

He is very clearly failing to do any of that.

but no explanation given

You didn’t explain, so why should I? I did see you made things up.

I assume that Twitter still has tons of managers and team leads that allowed this and have their own part of the responsibility. However, Musk is known to be a choleric with a mercurial temper, someone who makes grand public announcements and then pushes his companies to release stuff that isn’t nearly ready for production. Often it’s “do or get fired”.

So… an unshackled AI generating official posts, no human hired to curate the front page, headlines controlled through up-voting by trolls and foreign influence campaigns, all running unchecked in the name of “free speech” – that’s very much on brand for a Musk-run business, I’d say.

MUSK BAD RABBLE RABBLE RABBLE

“Somebody I don’t like said something bad about one of the worlds richest oligarchs therefore all criticism of him is invalid”

The guy has enough money to protect himself from bad criticism and address narratives he doesn’t like; he doesn’t need sad pathetic losers defending him on the internet like he’s a defenseless baby.

You people will take a statement, then do mental gymnastics in your mind to make it fit your retarded narrative.

Holy fuck

I mean, he’s responsible for a pretty heinous thing in this instance… It’s not like people are pissing their pants over nothing.

If your company publishes an explicitly fabricated headline stating there was a missile attack that never happened, generated by ai, and then presented to a ton of people on a major social media site, criticism is pretty deserved… (≖_≖ )