- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

open-source […] a bunch of binary files

Please explain.

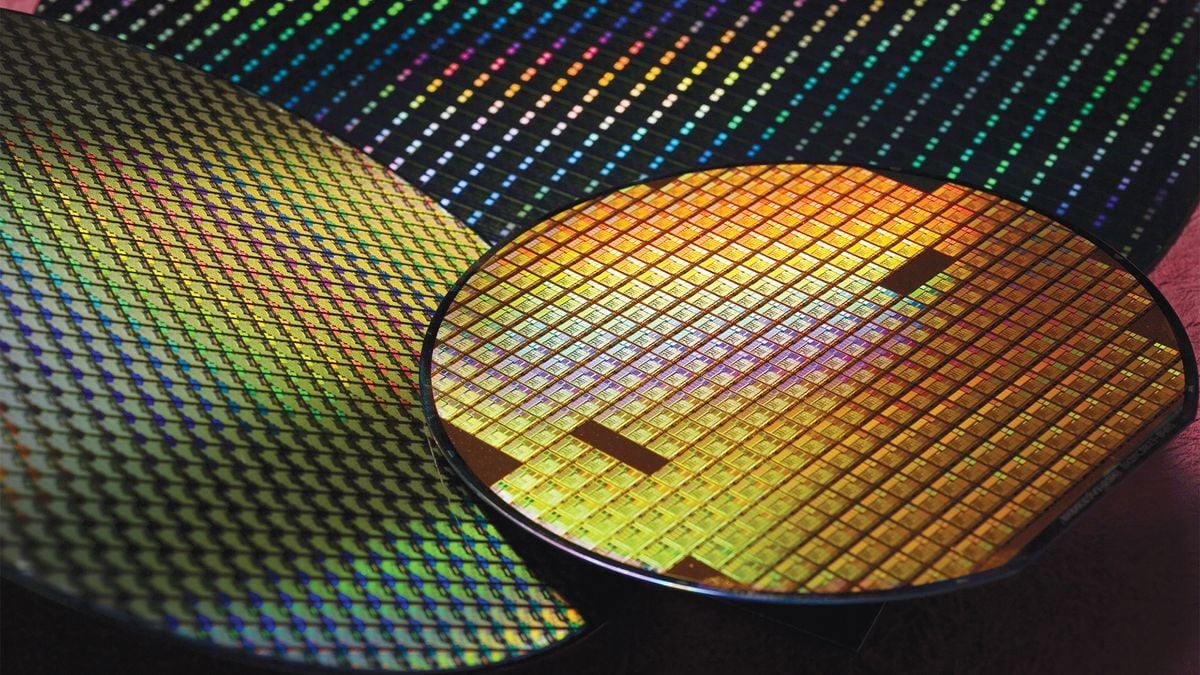

the semiconductor industry desperately needs to collect expert information. Many aging experts are retiring and taking their knowledge with them

So let’s feed their knowledge to a Large Language Model, instead of building a knowledge base.

That is without a doubt the single most stupid thing I’ve heard this entire week

Why? I got my wife a SemiKong and she loves it.

I love when it is clear that writer just copied and pasted a press release from a company with very little rewriting.

Automating hardware bugs, etc? An LLM is absolutely not the right tool for nanometer scale physics

Why do you say that?

I mean my intuition would tend to agree with you, but if it works… I could believe it.

So I’m just wondering why you would assert that this is a bad idea? What don’t I know?

SemiKong advertises a 20-30% reduction in time to market for new chip designs and a 20% improvement in first-time-right manufacturing scores.

Oh yeah? How is that accomplished when it can take a decade of development to get a new CPU out the door? Was this developed a decade ago?

You know there are simpler custom chips too right?

That said, it sounds a bit like bullshit to me too.

Totally but if we’re talking about simple custom chips, my point still stands as there is nothing to compare a custom chip to and the simplicity doesn’t seem to necessitate a LLM to map out.

That doesn’t follow at all…

the simplicity doesn’t seem to necessitate a LLM to map out.

So are you saying that if something is so simple that you could do it in a week, then it isn’t worth using a tool that would get it done in 4 days?

I mean, no that doesn’t necessitate use of a LLM. But by that logic, doing math never necessitates the use of a calculator, just do it on paper. Sure, you could…

I’m saying there are simple and difficult chips to map out and the simple ones shouldn’t need this by virtue of being simple.

Your analogy of using a computerized tool to complete a task doesn’t really hold as the math you use a calculator for wouldn’t be considered “simple math.”

No, the analogy is fine. If you have a task (math in the previous example) that takes some amount of time (doesn’t matter how much), is it worth using a tool (calculator) that makes it faster?

That was the analogy.

I mean … the kind of CPUs that take this much are probably unnecessary for many things. One can run quests, something like Doom, something like SW: Rebellion and SW: X-Wing Alliance on hardware from simpler times. One can also, surprisingly but not, do office tasks and listen to music on the same hardware. One can even render things like Babylon 5 computer-made parts on it. I would love to live in such a world. Also with modern processes and voltages such hardware could probably be insanely energy-efficient as compared to what we commonly use.

That said, I can understand machine generation and optimization of chip designs, and machine checking of them. Something they already do, I’m certain.

But what the hell would an LLM contribute there, seems unclear for me.