Well, time to look for used then. $549 for the lowest end card is almost what the highest used to cost

Or you could consider alternatives, since Nvidia abusing their market position and customers not caring is exactly why the prices keep going up.

I agree. Intel B580 seems okayish for low end gaming. Got any AMD recommendations to replace a GTX 1070?

Last I checked 7900 GRE looked good for perf/price ratio.

But I’d wait for AMD to finally announce details and pricing for new gen, I think it’s a bad moment to buy a new GPU until all Nvidia and AMD announcements are done.

Yeah I’m not in a hurry or anything, just trying to get a feel of what’s in the market

The problem with the B580 is the huge issue with driver overhead leading to worse performance when paired with lower or even midrange CPUs. Which is exactly what you’d usually pair it with

What’s a 8700K considered nowadays? And care to elaborate what driver overhead means? A link would also be fine, just trying to inform myself as much as possible :)

hardware unboxed for example did some benchmarks on the topic a few days ago. The issue wasn’t noticed at launch, where everyone tested with high end processors to eliminate any bottlenecks, but has recently been discovered.

I would say a 8700k is maybe lower midrange considering its been a while since it was released? Not sure if someone else tested it with older Intel CPUs, since here it is mostly with AMD stuff, but the problem still applies.

Thank you so much, will check that as soon as I can

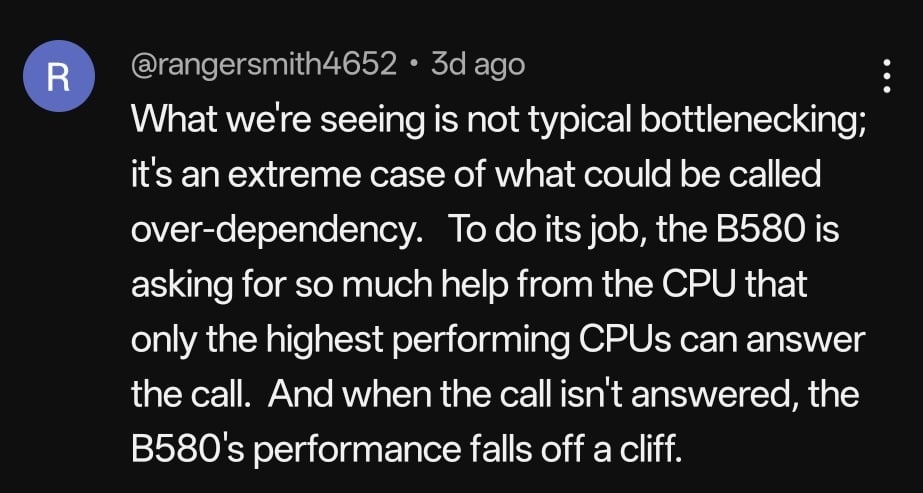

Edit: that was really useful, turns out older CPUs are not so feasible with Arc GPUs. Here’s a summary that I found quite simple and elegant from the comment section:

Yep, that’s pretty much the gist of it. Driver overhead isn’t something completely new, but with the B580 it certainly is so high that it becomes a massive problem in exactly the use case where it would make the most sense.

Another albeit smaller issue is the idle power draw. Here is a chart (taken from this article)

Because for a honest value evaluation that also plays a role, especially for anyone planning to use the card for a long time. Peak power draw doesn’t matter as much imo, since most of us will not push their system to its limit for a majority of the time. But idle power draw does add up over time. It also imo kind of kills it as a product for the second niche use besides budget oriented games, which would be for use in a homelab setting for stuff like video transcoding.

So as much as i am honestly rooting for Intel and think they are actually making really good progress in entering such a difficult market, this isn’t it yet. Maybe third time’s the charm.

That was the launch price of the 980 back in 2014

(Adjusting for inflation in the US, $550 in 2014 would be $730 today. Thus, approximately the 5070 Ti price.)

I guess my $170 1050ti will have to survive a bit longer, especially with current TDPs. My whole fucking computer uses 250W in games, monitor included

Still rocking a 1060 3gb myself. I think I paid $179 new

There is no likely scenario in which I would pay $2,000 for a video card.

I’m sure there would be a few, surely? Multi-million dollar lottery win; finding an old USB key with a bunch of bitcoin; discovering an oil/gas reserve on your property; finding a suspicious unattended briefcase filled with unmarked bills; an unexpected inheritance from a previously unknown great-aunt, provided you spend a night in her haunted manor…

…yoy know, just those usual circumstances that happen to all of us!

No, and you probably wouldn’t need one either since very few people actually need that type of computation power today.

The only argument here is that you have a special use case.

I self host my own llms and home assistant voice, having multiple models loaded at once is appealing. I am the exceedingly rare use case - and even I think it’s too much. For the same price I could get two 3000 series cards that would do what I would need.

AND 575 WATTS? Jesus dude that’s the most expensive space heater ever

Even I wouldn’t pay this ridiculous price, and I do animation (hence GPU rendering) for a living.

$2000… yea that’s a little ridiculous. That’s almost the cost of my entire build alone lmao