Unfortunately, they miscalculated and thought that single threaded performance (specifically higher clock speeds) would continue to advance at the same pace as it has been before the release of Crysis, but what ended up happening instead is more focus on multithreading by releasing CPUs with more cores with smaller IPC improvements comparitively.

IPC improvements were obviously still a thing, but it took a while for hardware to be able to run Crysis maxed out at good framerates.

It also wasn’t exactly the best optimized game either. Call of duty 4 came out the same year and looked spectacular without crushing your computer.

They weren’t even in the same league though graphically (on PC). Crysis really was that one game which blew everything else out of the water. In many ways it set the precedent for companies to implement ultra quality settings which only worked with the very best GPUs of each generation.

It helps Call of Duty 4 in that their levels were a lot more restricted and didn’t have all the physics and AI calculations that Crysis had to do. Even then, Crysis had better graphics and more advanced effects.

The weird thing is that it had low quality settings to make less powerful pcs be able to run it.

I finished the whole game on a core 2 duo, and some nvidia gpu from the same era I don’t even remember.

And you could fine tune basically any setting.

I wish they hyped that as cool too, I never even tried to run it lol

No one here is mentioning that Crysis released right when single core processors were maxing out their clock speed while dual and quad core processors were basically brand new. It wasn’t obvious to software developers that we wouldn’t have 12GHz processors in a couple years. Instead, the entire industry would shift direction to add more cores to boost performance rather than sizing up each core.

So a big bottleneck for Crysis was that it would max out single core performance on every PC for years because single core clock speed didn’t improve very much after that point.

The joke is i don’t think any “future pcs” can run crysis now since it’s not compatible with new windows versions iirc. I got a cobwebbed copy i can’t play in my steam library rn

It works on Linux on Proton, at least.

Linux is the future of gaming as well as the future of gaming’s past.

Still looking great at FHD resolution.

If you do that today gamers will cry about it being “unoptimized”

People complain about it because modern games run horribly compared to older games and don’t look any better. Modern games really are unoptimized.

If you get 20 fps on medium settings in crowded areas with latest RTX card, yeah, it’s unoptimized.

No, mate. Zalyssia just feels like that. We’re all very drunk.

Gamers back then were crying about it being unoptimized back then too. There is no care about what the game accomplishes, only that it has to run perfectly smooth on their hardware.

What’s the point in making a game that only works well in the future where other games look better or you stopped caring? I never played crisis then and I tried it recently and it felt outdated and cheap so I put it down

It worked well, it just had the capacity to upscale when the tech made it.

Like having one car and owning a two car garage, it doesnt not work but it does give you options.

So poorly optimised you need future technology to run it isn’t the future proofing strategy I’d go with, but ok…

That is not at all how it works or what they are saying. For the time, it was not poorly optimised. Even at low settings it was one of the best looking games released, and pushed a lot of modern tech we take for granted today in games.

Being designed to scale, does not mean its badly optimised.

So, I’ve seen this phenomenon discussed before, though I don’t think it was from the Crysis guys. They’ve got a legit point, and I don’t think that this article does a very clear job of describing the problem.

Basically, the problem is this: as a developer, you want to make your game able to take advantage of computing advances over the next N years other than just running faster. Okay, that’s legit, right? You want people to be able to jack up the draw distance, use higher-res textures further out, whatever. You’re trying to make life good for the players. You know what the game can do on current hardware, but you don’t want to restrict players to just that, so you let the sliders enable those draw distances or shadow resolutions that current hardware can’t reasonably handle.

The problem is that the UI doesn’t typically indicate this in very helpful ways. What happens is that a lot of players who have just gotten themselves a fancy gaming machine, immediately upon getting a game, go to the settings, and turn them all up to maximum so that they can take advantage of their new hardware. If the game doesn’t run smoothly at those settings, then they complain that the game is badly-written. “I got a top of the line Geforce RTX 4090, and it still can’t run Game X at a reasonable framerate. Don’t the developers know how to do game development?”

To some extent, developers have tried to deal with this by using terms that sound unreasonable, like “Extreme” or “Insane” instead of “High” to help to hint to players that they shouldn’t be expecting to just go run at those settings on current hardware. I am not sure that they have succeeded.

I think that this is really a UI problem. That is, the idea should be to clearly communicate to the user that some settings are really intended for future computers. Maybe “Future computers”, or “Try this in the year 2028” or something. I suppose that games could just hide some settings and push an update down the line that unlocks them, though I think that that’s a little obnoxious and would rather not have that happen on games that I buy – and if a game company goes under, they might never get around to being unlocked. Maybe if games consistently had some kind of really reliable auto-profiling mechanism that could go run various “stress test” scenes with a variety of settings to find reasonable settings for given hardware, players wouldn’t head straight for all-maximum settings. That requires that pretty much all games do a good job of implementing that, or I expect that players won’t trust the feature to take advantage of their hardware. And if mods enter the picture, then it’s hard for developers to create a reliable stress-test scene to render, since they don’t know what mods will do.

Console games tend to solve the problem by just taking the controls out of the player’s hands. The developers decide where the quality controls are, since players have – mostly – one set of hardware, and then you don’t get to touch them. The issue is really on the PC, where the question is “should the player be permitted to push the levers past what current hardware can reasonably do?”

I feel like instead of the “settings have been optimized for your hardware” pop up that almost always sets them to something that doesn’t account for the trade-off between looks and framerate that a player wants, there should be a “these settings are designed for future hardware and may not work well today” pop up when a player sets everything to max.

I’ve noticed some games also don’t actually max things out when you select the highest preset.

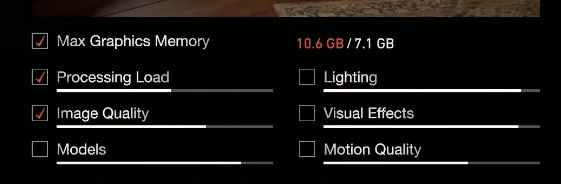

I also really like the settings menu of the RE engine games. It has indicators that aggregate how much “load” you’re putting on your system by turning each setting up or down, which lets you make more informed decisions on what settings to enable or disable. And it in fact will straight up tell you when you turn stuff too high, and warns you that things might not run well if you do.

Even if it can’t tell how much load you put on your system because that is a complex interaction of various bottlenecks, it would at least be nice if they labelled which settings are likely to contribute to the CPU, CPU, RAM, VRAM,… bottlenecks.

Obviously.

There are a total of seven indicators, the only one that is labeled with numbers is the one that estimates how much VRAM will be used.

The rest are just unlabeled bars for “Processing Load” and visual effects categories. They don’t ACTUALLY have anything to do with how much your system is able to do, they just indicate what a setting does in relation to themselves. (checkmarks show which bars the currently selected setting affects)

doesn’t account for the trade-off between looks and framerate that a player wants,

Yeah, I thought about talking about that in my comment too. Like, maybe a good route would be to have something like a target minimum FPS slider or something. That – theoretically, if implemented well – could provide a way to do reasonable settings on a limitred per-player basis without a lot of time investment by the player and without smacking into the “player expects maximum settings to work” issue.

There are also a few people who want the ability to ram quality way up and do not care at all about frame rate for certain things like screenshots, which complicates matters.

I think that one of the big problems is that if any games out there do a “bad” job of choosing settings, which I have seen many games do, it kills player trust in the “auto callibration” feature. So the developers of Game A are impacted by what the developers of Game B do. And there’s no real way that they can solve that problem.

Maybe if games consistently had some kind of really reliable auto-profiling mechanism that could go run various “stress test” scenes with a variety of settings to find reasonable settings for given hardware

…like most games from early 10’s? A lot of them had built-in benchmark that tested what your PC is capable of and then set things up for you.

Yeah, there are auto-calibration systems, but that’s why I’m emphasizing “reliably”. I’ve had some of them, for whatever reason, not ramp up quality settings on hardware a decade later even though it can run it smoothly, which is irritating. In fairness to the developers, they can’t test on future hardware, but I also don’t understand why that happens. Maybe there’s some degree of hard-coded assumptions that fall down for some reason down the line.

What they should probably do is is cap the settings to what computers at release time can handle, then patch it later with “graphics enhancements” that do nothing but raise the cap. They could even do it more than one. It keeps users’ expectations reasonable at launch and then lets the developers look like they’re going the extra mile to support an older product.

As a bonus, they could store the settings in a text file somewhere that more sophisticated users can easily edit to get max settings on day one.

As much as I find distasteful the idea of shipping “mandatory” patches for single player games years down the line to fix issues that should’ve been caught during QA… this might be a decent use case for them

I don’t like the idea of it needing to be patched in.

At launch advanced graphics mode settings could be something that is disabled by default but unlockable (via config.ini setting, console command, cheat code, whatever). Really the implementation isn’t what’s important, just that it is opt-in and the user knows that are leaving the normal settings and entering something that may not work as expected.

Then if they are still supporting the game later the defaults can be changed with a patch but if the devs don’t have that opportunity the community can still document this behaviour on sites like www.pcgamingwiki.com.

It was not poorly optimized, it was things like draw distance and texture resolution.

Bro long ago I saw a friend running crysis on those old 7" mini laptop, everything at low with decent performance. Imagine that.

Drama now is compatibility.