- cross-posted to:

- [email protected]

I took a web dev boot camp. If I were to use AI I would use it as a tool and not the motherfucking builder! AI gets even basic math equations wrong!

Can’t expect predictive text to be able to do math. You can get it to use a programming language to do it tho. If you ask it in a programmatic way it’ll generate and run it’s own code. Only way I got it to count the amount of r’s in strawrbrerry.

I love strawrbrerry mllilkshakes.

At the liberry!

Way better than wrasprbrerry

Was listening to my go-to podcast during morning walkies with my dog. They brought up an example where some couple was using ShatGPT as a couple’s therapist, and what a great idea that was. Talking about how one of the podcasters has more of a friend like relationship to “their” GPT.

I usually find this podcast quite entertaining, but this just got me depressed.

ChatGPT is by the same company that stole Scarlett Johansson’s voice. The same vein of companies that thinks it’s perfectly okay to pirate 81 terabytes of books, despite definitely being able to afford paying the authors. I don’t see a reality where it’s ethical or indicative of good judgement to trust a product from any of these companies with information.

I agree with you, but I do wish a lot of conservatives used chatGPT or other AI’s more. It, at the very least, will tell them all the batshit stuff they believe is wrong and clear up a lot of the blatant misinformation. With time, will more batshit AI’s be released to reinforce their current ideas? Yea. But ChatGPT is trained on enough (granted, stolen) data that it isn’t prone to retelling the conspiracy theories. Sure, it will lie to you and make shit up when you get into niche technical subjects, or ask it to do basic counting, but it certainly wouldn’t say Ukraine started the war.

It will even agree that AIs shouldn’t controlled by oligarchic tech monopolies and should instead be distributed freely and fairly for the public good, but the international system of nation states competing against each other militarily and economically prevents this. But maybe it will agree to the opposite of that too, I didn’t try asking.

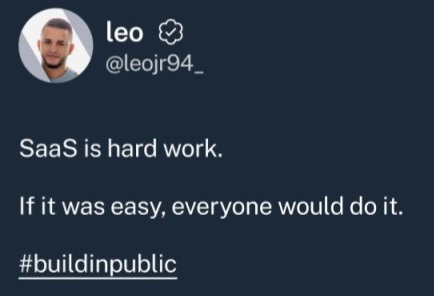

Ok, but what did they try to do as a SaaS?

Money.

AI can be incredibly useful, but you still need someone with the expertise to verify its output.

Holy crap, it’s real!

Devils advocate, not my actual opinion; if you can make a Thing that people will pay to use, easily and without domain specific knowledge, why would you not? It may hit issues at some point but by them you’ve already got ARR and might be able to sell it.

If you started from first principles and made a car or, in this case, told an flailing intelligence precursor to make a car, how long would it take for it to create ABS? Seatbelts? Airbags? Reinforced fuel tanks? Firewalls? Collision avoidance? OBD ports? Handsfree kits? Side impact bars? Cupholders? Those are things created as a result of problems that Karl Benz couldn’t have conceived of, let alone solve.

Experts don’t just have skills, they have experience. The more esoteric the challenge, the more important that experience is. Without that experience you’ll very quickly find your product fails due to long-solved problems leaving you - and your customers - in the position of being exposed dangers that a reasonable person would conclude shouldn’t exist.

Yeh, arguably and to a limited extent, the problems he’s having now aren’t the result of the decision to use AI to make his product so much as the decision to tell people about that and people deliberately attempting to sabotage it. I’m careful to qualify that though because the self evident flaw in his plan even if it only surfaced in a rather extreme scenario, is that he lacks the domain specific knowledge to actually make his product work as soon as anything becomes more complicated than just collecting the money. Evidently there was more to this venture than just the building of the software, that was necessary to for it to be a viable service. Much like if you consider yourself the ideas man and paid a programmer to engineer the product for you and then fired them straight after without hiring anyone to maintain it or keep the infrastructure going or provide support for your clients and then claimed you ‘built’ the product, you’d be in a similar scenario not long after your first paying customer finds out the hard way that you don’t actually know anything about your own service that you willingly took money for. He’s discovering he can’t actually provide the service part of the Software as a Service he’s selling.

Bonus points if the attackers use ai to script their attacks, too. We can fully automate the SaaS cycle!

That is the real dead Internet theory: everything from production to malicious actors to end users are all ai scripts wasting electricity and hardware resources for the benefit of no human.

Seems like a fitting end to the internet, imo. Or the recipe for the Singularity.

Not only internet. Soon everybody will use AI for everything. Lawyers will use AI in court on both sides. AI will fight against AI.

I was at a coffee shop the other day and 2 lawyers were discussing how they were doing stuff with ai that they didn’t know anything about and then just send to their clients.

That shit scared the hell out of me.

And everything will just keep getting worse with more and more common folk eating the hype and brainwash using these highly incorrect tools in all levels of our society everyday to make decisions about things they have no idea about.

I’m aware of an effort to get LLM AI to summarize medical reports for doctors.

Very disturbing.

The people driving it where I work tend to be the people who know the least about how computers work.

It was a time of desolation, chaos, and uncertainty. Brother pitted against brother. Babies having babies.

Then one day, from the right side of the screen, came a man. A man with a plastic rectangle.

lol thanks

This is the opposite of the singularity

It is a singularity, in the sense that it is an infinitely escalating level of suck.

Suckularity?

I never said it was going to be any good!

I am not a bot trust me.

The Internet will continue to function just fine, just as it has for 50 years. It’s the World Wide Web that is on fire. Pretty much has been since a bunch of people who don’t understand what Web 2.0 means decided they were going to start doing “Web 3.0” stuff.

The Internet will continue to function just fine, just as it has for 50 years.

Sounds of intercontinental data cables being sliced

That would only happen if we give power to our ai assistants to buy things on our behalf, and manage our budgets. They will decide among themselves who needs what and the money will flow to billionaires pockets without any human intervention. If humans go far enough, not even rich people would be rich, as trust funds, stock portfolios would operate under ai. If the ai achieves singularity with that level of control, we are all basically in spectator mode.

Someone really should’ve replied with

My attack was built with Curson

AI is yet another technology that enables morons to think they can cut out the middleman of programming staff, only to very quickly realise that we’re more than just monkeys with typewriters.

Well I think I am a monkey with a typewriter…

Yeah! I have two typewriters!

I was going to post a note about typewriters, allegedly from Tom Hanks, which I saw years and years ago; but I can’t find it.

Turns out there’s a lot of Tom Hanks typewriter content out there.

He donated his to my hs randomly, it was supposed to goto the valedictorian but the school kept it lmao, it was so funny because they showed everyone a video where he says not to keep the typewriter and its for a student

That’s … Pretty depressing.

i have a mobile touchscreen typewriter, but it isn’t very effective at writing code.

We’re monkeys with COMPUTERS!!!

To be fair… If this guy would have hired a dev team, the same thing could happen.

But then they’d have a dev team who wrote the code and therefore knows how it works.

In this case, the hackers might understand the code better than the “author” because they’ve been working in it longer.

True, any software can be vulnerable to attack.

but the difference is a technical team of software developers can mitigate an attack and patch it. This guy has no tech support than the AI that sold him the faulty code that likely assumed he did the proper hardening of his environment (which he did not).

Openly admitting you programmed anything with AI only is admitting you haven’t done the basic steps to protecting yourself or your customers.

Hilarious and true.

last week some new up and coming coder was showing me their tons and tons of sites made with the help of chatGPT. They all look great on the front end. So I tried to use one. Error. Tried to use another. Error. Mentioned the errors and they brushed it off. I am 99% sure they do not have the coding experience to fix the errors. I politely disconnected from them at that point.

What’s worse is when a noncoder asks me, a coder, to look over and fix their ai generated code. My response is “no, but if you set aside an hour I will teach you how HTML works so you can fix it yourself.” Never has one of these kids asking ai to code things accepted which, to me, means they aren’t worth my time. Don’t let them use you like that. You aren’t another tool they can combine with ai to generate things correctly without having to learn things themselves.

Coder? You havent been to university right?

100% this. I’ve gotten to where when people try and rope me into their new million dollar app idea I tell them that there are fantastic resources online to teach yourself to do everything they need. I offer to help them find those resources and even help when they get stuck. I’ve probably done this dozens of times by now. No bites yet. All those millions wasted…

I’ve been a professional full stack dev for 15 years and dabbled for years before that - I can absolutely code and know what I’m doing (and have used cursor and just deleted most of what it made for me when I let it run)

But my frontends have never looked better.

Ha, you fools still pay for doors and locks? My house is now 100% done with fake locks and doors, they are so much lighter and easier to install.

Wait! why am I always getting robbed lately, it can not be my fake locks and doors! It has to be weirdos online following what I do.

The difference is locks on doors truly are just security theatre in most cases.

Unless you’re the BiLock and it takes the LockPickingLawyer 3 minutes to pick it open.

To be fair, it’s both.

“If you don’t have organic intelligence at home, store-bought is fine.” - leo (probably)

Is the implication that he made a super insecure program and left the token for his AI thing in the code as well? Or is he actually being hacked because others are coping?

Nobody knows. Literally nobody, including him, because he doesn’t understand the code!

Nah the people doing the pro bono pen testing know. At least for the frontend side and maybe some of the backend.

But the things doing the testing could be bots instead of human actors, so it may very well be that no human does in fact know.

Thought so too, but nah. Unless that bot is very intelligent and can read and humorously respond to social media posts by settings its fake domain.

Good point! Thanks for pointing that out.

That’s fucking hilarious then.

rofl!

AI writes shitty code that’s full of security holes, and Leo here has probably taken zero steps to further secure his code. He broadcasts his AI written software and its open season for hackers.

Not just, but he literally advertised himself as not being technical. That seems to be just asking for an open season.

Potentially both, but you don’t really have to ask to be hacked. Just put something into the public internet and automated scanning tools will start checking your service for popular vulnerabilities.

He told them which AI he used to make the entire codebase. I’d bet it’s way easier to RE the “make a full SaaS suite” prompt than it is to RE the code itself once it’s compiled.

Someone probably poked around with the AI until they found a way to abuse his SaaS

Doesn’t really matter. The important bit is he has no idea either. (It’s likely the former and he’s blaming the weirdos trying to get in)

Hard to know the specifics of his insecure code but we know with certainty that it was highly insecure.

The fact that “AI” hallucinates so extensively and gratuitously just means that the only way it can benefit software development is as a gaggle of coked-up juniors making a senior incapable of working on their own stuff because they’re constantly in janitorial mode.

So no change to how it was before then

Different shit, same smell

Plenty of good programmers use AI extensively while working. Me included.

Mostly as an advance autocomplete, template builder or documentation parser.

You obviously need to be good at it so you can see at a glance if the written code is good or if it’s bullshit. But if you are good it can really speed things up without any risk as you will only copy cody that you know is good and discard the bullshit.

Obviously you cannot develop without programming knowledge, but with programming knowledge is just another tool.

I maintain strong conviction that if a good programmer uses llm in their work, they just add more work for themselves, and if less than good one does it, they add new exciting and difficult to find bugs, while maintaining false confidence in their code and themselves.

I have seen so much code that looks good on first, second, and third glance, but actually is full of shit, and I was able to find that shit by doing external validation like talking to the dev or brainstorming the ways to test it, the things you categorically cannot do with unreliable random words generator.That’s why you use unit test and integration test.

I can write bad code myself or copy bad code from who-knows where. It’s not something introduced by LLM.

Remember famous Linus letter? “You code this function without understanding it and thus you code is shit”.

As I said, just a tool like many other before it.

I use it as a regular practice while coding. And to be true, reading my code after that I could not distinguish what parts where LLM and what parts I wrote fully by myself, and, to be honest, I don’t think anyone would be able to tell the difference.

It would probably a nice idea to do some kind of turing test, a put a blind test to distinguish the AI written part of some code, and see how precisely people can tell it apart.

I may come back with a particular piece of code that I specifically remember to be an output from deepseek, and probably withing the whole context it would be indistinguishable.

Also, not all LLM usage is for copying from it. Many times you copy to it and ask the thing yo explain it to you, or ask general questions. For instance, to seek for specific functions in C# extensive libraries.

That’s why you use unit test and integration test.

Good start, but not even close to being enough. What if code introduces UB? Unless you specifically look for that, and nobody does, neither unit nor on-target tests will find it. What if it’s drastically ineffective? What if there are weird and unusual corner cases?

Now you spend more time looking for all of that and designing tests that you didn’t need to do if you had proper practices from the beginning.It would probably a nice idea to do some kind of turing test, a put a blind test to distinguish the AI written part of some code, and see how precisely people can tell it apart.

But that’s worse! You do realise how that’s worse, right? You lose all the external ways to validate the code, now you have to treat all the code as malicious.

For instance, to seek for specific functions in C# extensive libraries.

And spend twice as much time trying to understand why can’t you find a function that your LLM just invented with absolute certainty of a fancy autocomplete. And if that’s an easy task for you, well, then why do you need this middle layer of randomness. I can’t think of a reason why not to search in the documentation instead of introducing this weird game of “will it lie to me”

Any human written code can and will introduce UB.

Also I don’t see how you will take more that 5 second to verify that a given function does not exist. It has happen to me, llm suggesting unexisting function. And searching by function name in the docs is instantaneous.

I you don’t want to use it don’t. I have been more than a year doing so and I haven’t run into any of those catastrophic issues. It’s just a tool like many others I use for coding. Not even the most important, for instance I think LSP was a greater improvement on my coding efficiency.

It’s like using neovim. Some people would post me a list of all the things that can go bad for making a Frankenstein IDE in a ancient text editor. But if it works for me, it works for me.

Any human written code can and will introduce UB.

And there is enormous amount of safeguards, tricks, practices and tools we come up with to combat it. All of those are categorically unavailable to an autocomplete tool, or a tool who exclusively uses autocomplete tool to code.

Also I don’t see how you will take more that 5 second to verify that a given function does not exist. It has happen to me, llm suggesting unexisting function. And searching by function name in the docs is instantaneous.

Which means you can work with documentation. Which means you really, really don’t need the middle layer, like, at all.

I haven’t run into any of those catastrophic issues.

Glad you didn’t, but also, I’ve reviewed enough generated code to know that a lot of the time people think they’re OK, when in reality they just introduced an esoteric memory leak in a critical section. People who didn’t do it by themselves, but did it because LLM told them to.

I you don’t want to use it don’t.

It’s not about me. It’s about other people introducing shit into our collective lives, making it worse.

You can actually apply those tools and procedures to automatically generated code, exactly the same as in any other piece of code. I don’t see the impediment here…

You must be able to understand that searching by name is not the same as searching by definition, nothing more to add here…

Why would you care of the shit code submitted to you is bad because it was generated with AI, because it was copied from SO, or if it’s brand new shit code written by someone. If it’s bad is bad. And bad code have existed since forever. Once again, I don’t see the impact of AI here. If someone is unable to find that a particular generated piece of code have issues, I don’t see how magically is going to be able to see the issue in copypasted code or in code written by themselves. If they don’t notice they don’t, no matter the source.

I will go back to the Turing test. If you don’t even know if the bad code was generated, copied or just written by hand, how are you even able to tell that AI is the issue?

There is an exception to this I think. I don’t make ai write much, but it is convenient to give it a simple Java class and say “write a tostring” and have it spit out something usable.

It’ll just keep better at it over time though. The current ai is way better than 5 years ago and in 5 years it’ll be way better than now.

Past performance does not guarantee future results

My hobby: extrapolating.

That’s certainly one theory, but as we are largely out of training data there’s not much new material to feed in for refinement. Using AI output to train future AI is just going to amplify the existing problems.

Just generate the training material, duh.

DeepSeek

This is certainly the pattern that is actively emerging.

I mean, the proof is sitting there wearing your clothes. General intelligence exists all around us. If it can exist naturally, we can eventually do it through technology. Maybe there needs to be more breakthroughs before it happens.

Everything possible in theory. Doesn’t mean everything happened or just about to happen

“more breakthroughs” spoken like we get these once everyday like milk delivery.

That’s your interpretation.

that’s reality. Unless you’re too deluded to think it’s magic.

No i meant to say you’re interpretation of what I said.

To get better it would need better training data. However there are always more junior devs creating bad training data, than senior devs who create slightly better training data.

And now LLMs being trained on data generated by LLMs. No possible way that could go wrong.

Yes, yes there are weird people out there. That’s the whole point of having humans able to understand the code be able to correct it.

Chatgpt make this code secure against weird people trying to crash and exploit it ot

beep boop

fixed 3 bugs

added 2 known vulnerabilities

added 3 race conditions

boop beebRoger Roger