Here’s a list of reasons this is not advisable:

[object Object]

I did this to myself last week on a new project. Spent an hour trying to track down at what point in my code the data from the database got converted to [Object object]. Finally decided to check the db itself and realized that the [Object object] was coming from inside the house the whole time and the error in my code was when the entry was being written smh

Sanity checks

Always, always check if your assumptions are true

- am i even running the function?

- is this value what i think it is?

- what is responsible for loading this data, and does it work as expected?

- am i pointed at the right database?

- is my configuration set and loaded in correctly?

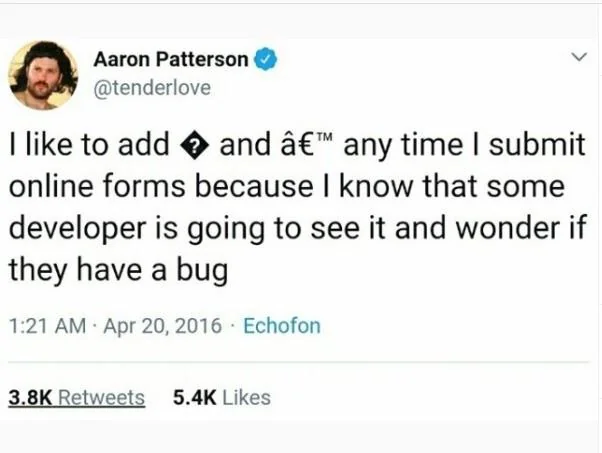

I had no idea this was a thing.

Thanks. I’ll use it. I’ll use it a lot.

Me, the dev: “Nobody reported this as a problem… Ok, don’t care, moving on.” Also, if I can’t reproduce it, I can’t fix it, no point in wasting time more than that.

It doesn’t make it to our devs. I just null the field and cry a little inside at how terrible our platform can be

You can also set your profile picture to ‘missing/broken image’ icon.

Why would anyone do that?

JFC

I went to grab Bobby Tables and found this new variation

Isn’t it actually illegal to call your child “null” or “nil” in some places

https://xkcd.com/327 the original is even better :)

Why hope they sanitize their inputs?

Why are they trusting an AI that cant even do math to give notes to tests?

The problem with LLM AIs Ous that you can’t sanitize the inputs safely. There is no difference between the program (initial prompt from the developer) and the data (your form input)

You can make it more resistant to overwriting instructions at least

You can try, but you can’t make it correct. My ideal is to write code once that is bug-free. That’s very difficult, but not fundamentally impossible. Especially in small well-scrutinized areas that are critical for security it is possible with enough care and effort to write code with no security bugs. With LLM AI tools that’s not even theoretically possible, let alone practical. You will just need to be forever updating your prompt to mitigate the free latest most fashionable prompt injections.

Because it’s the most efficient. With students handing in AI theses, it’s only sensible to have teachers use AI to grade them. No we only need to have teachers use AI to create exam questions and education becomes a fully automated process. Then everyone can go home early.

Oh Little Bobby Tables!

Removed by mod

You have…. A lot of confidence in websites.

That “most likely no one is bothered” part is correct, though.

Oh yeah, probably. Unless shit is down probably nobody even noticed

The web is a total shit show, but if you can provide a single example of an established website which will cause a dev any real grief because you enter

[object Object]into a form I’ll send you $100.00.If you can send me proof of your sense of humor, I’ll send you a big fat nothing.

Well, now I have something fun to do over the weekend.

Please keep us posted!