Speedy thing goes in, speedy thing comes out.

Yay I can’t wait for Comcast to implement this so you can blow through your 1.2 TB data cap in a second so they can charge you $10 per every 50 GB that it goes over.

It still shocks me that they cap usage. There is no reason at all to do this. Why are they doing it?

Their network is under provisioned. They sell an apartment building 300mbps connections to all 8 tenants, but only have a 1Gb connection. To make sure that link isn’t always saturated, they impose a data cap to make you not want to use the bandwidth you’re paying for. On top of that everyone’s connection is crippled during hours like the evening when everyone is using it. As a bonus, they can sell you cable TV on top, so you don’t hit your data cap watching shows.

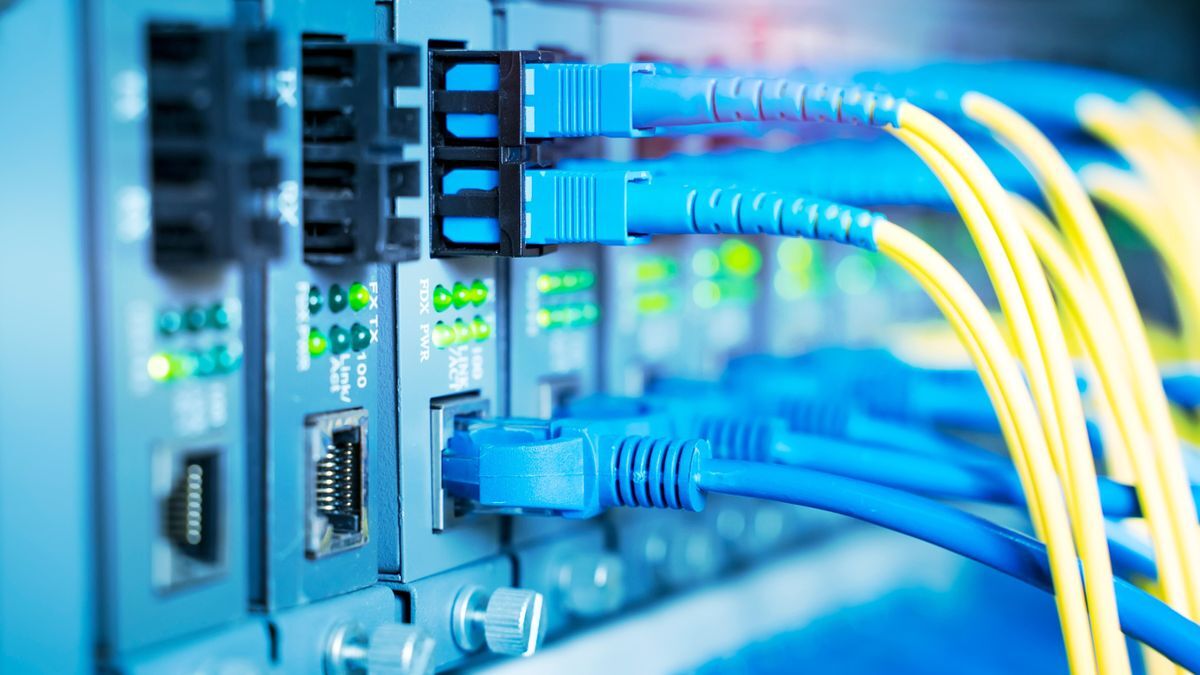

I build ISP and private data networks for a living.

A contention ratio for residential circuits of 3 to 1 isn’t bad at all. You’d have to get pretty unlucky with your neighbors being raging pirates to be able to tell that was contended at all. Any data cap should scare the worst of the pirates away, so you probably won’t be in that situation.

If you can feel the circuit getting worse at different times of the day then the effective contention ( taking into account further upstream ) is probably more like 30 to 1 than 3 to 1.

Wouldn’t two Steam users downloading a game be enough to notice?

QoS is a thing, so it depends.

Yeah, stream is faster than most Linux torrents in my experience

Steam can do pretty well filling a tail circuit, probably better on average. But a torrent of a large file with a ton of peers when your client has the port forward back into the client absolutely puts more pressure on a tail circuit. More flows makes the shaping work harder.

Sometimes we see an outlier in our reporting and it’s not obvious if a customer has a torrent or a DDoS directed at them for the first few minutes.

Depends. If steam is pulling a full 300mbps on both connections there would still be 40% of the bandwidth available.

No, if two 300 megabit tails are shaped correctly, a third user shouldn’t notice that the 1G backhaul has got a bunch of use going on.

If you do, there’s something wrong or you aren’t really getting the 1G for some reason. Not generally a concern in a carrier platform.

I cringe every time I hear people choosing LTE / 5G for home connection over DSL / fiber. Here ISP’s can’t legally have a mimimum bandwidth less than 70% of the nominal bandwidth for fiber / copper described in the contract.

But they can sell as many mobile subscriptions as they please and they sure like selling them.

Are you kidding? Lol. It’s money. The answer is always money.

Queue nip flaps and rubbing.

deleted by creator

The only reason its ever been, money

Because businesses exist to make money, so they have to balance charging as much money to the customers as they can without losing them to a competitive company. That used to mean that they had to treat customers with respect and make them want to stay with the business, but now they’ve realized that they can just pay lawmakers to let them have a monopoly, allowing them to charge as much money to the customers as they want without worrying that they’ll leave, since there’s either no competition for them to leave to, or the competition is using the same strategy, so leaving wouldn’t fix anything anyway. Free market, baby!

ISP shittiness aside, ISPs do actually pay for Internet backbone access by the byte. Usually there are peering agreements saying “you take 1tb of traffic from us, and we’ll take 1tb of traffic from you”, whether that traffic is destined for one of their customers (someone on Comcast scrolling Instagram), or they’re just providing the link to the next major node (Comcast being the link between AT&T’s segment of the US backbone and Big Mike’s Internet out in podunk Nebraska).

And normally that works pretty well, until power users start moving huge amounts of data and unbalancing the traffic.

That depends on where those bytes go, though. There is also the concept of “settlement-free peering” and content caches that are located in the ISP network.

For example we have a Google Global Cache instance in our network, so most Google traffic is served from there and we don’t pay anyone per byte, we only pay for the power and space. Same for Akamai. Then for Microsoft, Cloudflare and Facebook we have peering links, where we can send and receive data related to their services freely, without balance requirements.

Of course this is only possible for larger networks (peering with everyone is not feasible) and we still pay for the other traffic, but it takes care of a lot of the volume.

It’s illegal for them to cap it in some jurisdictions (e.g. Massachusetts, where I live).

/thread

deleted by creator

This’ll bring their fax machines up to the current century for sure.

For those wanting a bit of a summary.

transmitting up to 22.9 petabits per second (Pb/s) through a single optic cable composed of multiple fibers

The breakthrough isn’t things moving faster but more fibers per cable. So you can transfer more bits in parallel.

That’s still a good breakthrough because, for lots of reasons, packing more fibers in isn’t as straight forward as one would think.

The breakthrough isn’t things moving faster but more fibers per cable.

No, it’s actually more cores per fiber, and using those very well for space division multiplexing on top of the normal wavelength division multiplexing. They are talking about 22.9 Pb/s per fiber, not cable, the Tom’s Hardware article is just wrong.

Cables can already contain hundreds of fibers, for example 576 here or into the thousands if you use stacks of ribbon cables in the subunits, for example 3456 here

Here’s a source that backs up what you’re talking about and proving that the TomsHardware article is wrong: https://www.nict.go.jp/en/press/2023/11/30-1.html

Thank you!

Yes, thanks to all for contributing and assisting. I am grateful for the clarification and leg work. Folks say reddit had this, and lemmy has less, so every time I see it, I make sure to appreciate it.

Am I to understand that the cable use has multiple cores within a single cladding? Interesting approach…

Now we get to classify them as singlemode, multimode, and multiestmode.

Yeah. Check this comment

This is really interesting. Thank you for providing good insight!

What’s the use of high speed when videos are pixeleted 😅😅😅😅😅

haha the joke is porn haha guys get it i have a porn addiction look at me ahahhaahha

How did you extract that from the post lmao

I was wondering that, too. Was it the emojis or projection? Throwing this one in my “small mysteries of the universe” bin

Some people are just as if not more obsessed with “porn addiction” than so called “coomers” are obsessed with porn.

Wallstreet just put in a bulk order.

The financial types are generally more interested in hollow core fiber, to get their latencies even further down for high frequency trading. Because light travels at almost c in hollow core but only at 2/3 c in fiber core.

So is hollow core fiber a vacuum or is it just hollow? I’ve never heard of that tech.

Think glass pipe versus glass cylinder or rod. Though, I don’t think the hollow core is fully empty, it has some structure to it.

Source: https://www.rp-photonics.com/hollow_core_fibers.html

I work with fibre and have never stopped to think if it is hollow or not. Thanks for this

How do you weld that?

Absolutely no idea.

I think that both hollow core fibers filled with air and others with just vacuum exist, but it’s a bit far from my operational reality, so I’m not that sure. I just read in industry news that euNetworks has deployed 45 km of Lumenisity hollowcore fiber and that Microsoft bought Lumensity

So is hollow core fiber a vacuum or is it just hollow? I’ve never heard of that tech.

The properties of light in air is pretty similar to that of a vacuum. Air has a lot more empty space than a solid or liquid.

Actually Wall Street intentionally increases their latency

Some guy figured out that trades were getting sniped due to some locations having more latency than others relative to the trade location, so he developed a solution that intentionally lags the connection on different wires so that everyone gets their trade updates simultaneously and can’t snipe each other to up the prices on other people’s buys.

Is this a Portal reference? I remember hearing it from GLADOS!

Indeed it is

Yay for Portal and Portal 2!!!

I heard the voice line as I was reading it. Excellent and memorable game series.

I heard GLADOS as I was reading that too. I loved both games. Took me a long time to beat the single player of Portal 2, but damn it was worth it

It was really worth it to complete the single player and replay again with developer commentary with the first Dev recording is Gabe Newell.

that’s a lot of floppies

22Pb/s FTTH when?

You might have it in 20 years The question is… When will we get servers that support that speed? XD

in a few hundred years if ever. I doubt that the average person will ever need more than 1 Tb/s

to give you crazy numbers:

1 second of a raw 32k video with 12bit color at 60fps is around 50 GB/s

Aside from me obviously joking about home 22Pb/s. I’ll tell you a story.

In the early 90s. I was gifted a modem. I used it to connect to BBS systems. It had two outgoing call speeds. 300bit/s sync and 1200/75bps. And since I’d never connected my computer to the outside world, the whole thing seemed amazing. Many BBS’ had modems that couldn’t cope with 1200/75, it was an odd speed. So, I had to swap to 300 for those. But it was still an amazing time. But, file sizes were pretty small. It really wasn’t a huge problem.

In the mid 1990s, when I had a 28.8k modem (and later 56k) to connect to the internet (and by the way, paying for the phone calls too). The always on 64k leased line (£1k per month) seemed like a dream. The 10Mbit coax ethernet at the office seemed like light speed. Hell, it was faster than the local hard disk (caching improved that, mind you). I remember in the office where we had an ISDN modem setup, chaining them together to get 128Kbit seemed like light speed. Later we got a 256Kbit leased line in the office, and it was amazing in terms of speed. I actually ran effectively a mini ISP that some of us connected to, to get free internet. It had 4 USR Courier modems.

Then, in 1999 I was lucky enough to be enrolled on a trial. A trial for 2Mbit ADSL. It was amazing, 2Mbit down, 256Kbit up. This was groundbreaking. Web pages loaded instantly. Everything was so much faster. LANs in the office and at home was up to 100Mbit, and that seemed pretty damn fast. We’d be sure we’d not need more than that.

Then, 8Mbit DSL arrived. Again, amazing leap forward. Gigabit LAN became the norm, and again, who would have thought it would be too slow for anything? After all, we were all using hard disks, and they really weren’t that fast after all. ADSL2+ arrived with speeds up to 24Mbit, and those of us unable to get the higher sync rates started to suffer the internet being just a bit too slow.

Then we got VDSL and faster cable internet. 80Mbit, 100Mbit, 150Mbit. These seemed overkill for many. But pretty soon we were downloading 4k video and 150GB games onto 5GB/s+ SSD drives. This started to feel slow to some.

Now we’re at a place where FTTH 1Gbit is becoming quite common. Many ISPs here in the UK are offering packages with speeds between 2 to 5Gbit/s too. The tech they use is apparently good for up to 50Gbit/s.

Now, with this history of speed increases leading to demand increases. Why do you think it will stop at 1Tb/s? Maybe we cannot currently imagine why we’d need such speeds. But, someone will find a way to fill such connections. Don’t limit yourself to just expanding what we do now.

Maybe you’re right, but I honestly would never say never when it comes to computing.

I can see many future domestic uses that might need multiple terabytes per sec of speed. Especially everything around AI and machine learning. Imagine your device having instant access to multiple terabytes of a shared global library of machine learning data. Its a bit dystopic but thats another topic.

Another thing you can imagine is being able to play games directly “from the cloud” but processing them on your hardware. Imagine you have an xbox, but don’t have any games stored on it. Everything you play is fetched in real time from the server but still processed and computed on your hardware. So you get crisp clear image and visuals but games can now be so realistic that they themselves need 1-2TB of storage to store the whole game. So, with enough internet speed, we wouldn’t need to worry about storage space anymore and how big a game is. You just buy the game and click play and play instantly, but still computing the game locally. Or, if you still need to download and store the game, having a game be a 700GB download might be as “casual” as a 5GB game is nowadays (remember when we got shocked that games started to be more than 1-2GB? Now, 80GB is common). And you will be able to download the whole game in a few minutes. Game worlds can be huge and still be downloaded in minutes. Storage is also evolving day by day so 10TB of SSD might actually be kinda cheap in 10 years.

You can also imagine games so complex that they can now comunicate between players huge amounts of data so we could even share the same angle every leaf in a tree is moving to the wind so all players on that server would see the same exact movement of the leaves. The bandwidth is so high that you can actually start to share all of the most insignificant details between a multiplayer world.

You can also imagine Netflix being able to stream 8K 60fps HDR to your phone as easily as nowadays it streams 720p.

Virtual Reality. It needs insane amounts of bandwidth for anything. If you want to share a truly multiplayer VR experience you need all the bandwidth you can get. VR cloud gaming could be a thing since they could now transmit 2x 4K 60fps streams with minimal latency.

These are all casual domestic uses for unlimited bandwidth applications. Don’t worry, we will always need more and more bandwidth, because with more bandwidth always comes new tech, and with new tech the need for more bandwidth also increases. Infinite cycle.

Now imagine all of that plus more direct peer to peer connections to get the lowest latency!

that’s a good point! I guess I’ve never personally experienced those extremes you have (I was born in the late 90s), I think my first internet connection was ISDN or maybe the early days of ADSL, because I remember the day my family got 7Mbit and we were mesmerized by how fast it was

640KB of memory is enough for anybody.

This is just what I need for my goal of backing up both the Internet Archive and Wikipedia on local storage every day.

If you really are, then you should be doing daily incrementals and fulls every couple of weeks. I can’t imagine the incrementals for those are more than a few dozen GB, but I guess I’m not familiar with the size of Internet Archive.

Isn’t optical just as much about the end points as the cables?

Yes, to get their speeds they used the usual wavelength division multiplexing, except over an insane 750 wavelength channels, space division multiplexing over the 38 corse with 3 modes, and 256 QAM with dual-polarization in each

Yeah… mhmm… right. I know some of these words!

For perspective, at work for our production network through Switzerland, we use at most 16 QAM with dual-polarization, and at most 88 channels (except we never utilize more than maybe 10). With just normal single mode single core fibers. This paper has just everything blown up in all directions of cool.

Yes, but the fiber has become an issue. They’re doing QAM signaling in fiber now.

optical fiber speed record

Isn’t that simply the speed of light, always? ;-)

Nope, if we are talking about the actual speed of the signal optical fiber is relatively slow at ~1/3 c, compared to air or copper where it’s almost c. They’re using ‘speed’ meaning bandwidth. A van full of sd cards would have a massive bandwidth, but a very slow actual speed

Actually it’s about 2/3 c, the refractive index of normal telco fibers (G.652 and G.655) is around 1.47

Cool information, thanks!

It is pretty confusing that we refer to the volume of data as speed in networks.

We don’t. The measure is bits/s, which is a speed because it’s measured relative to time. 1 TB is a volume/amount, 1TB/s is a speed.