- cross-posted to:

- [email protected]

- cross-posted to:

- [email protected]

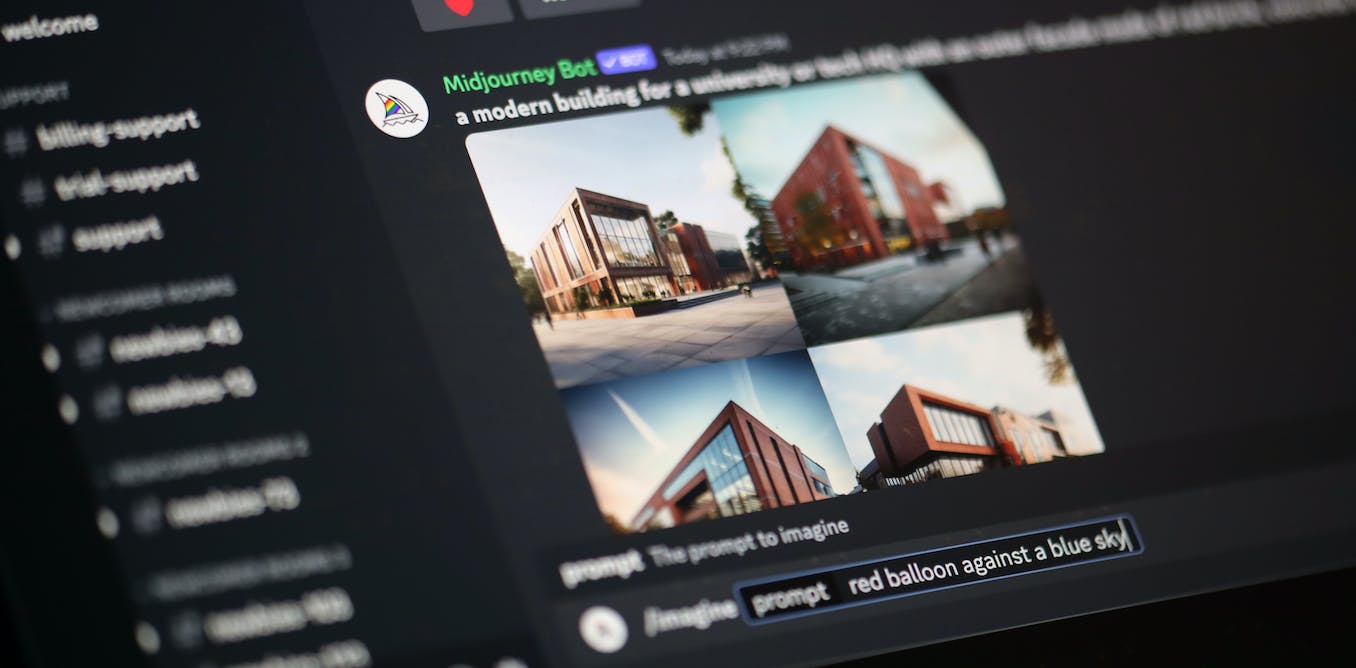

Data poisoning: how artists are sabotaging AI to take revenge on image generators::As AI developers indiscriminately suck up online content to train their models, artists are seeking ways to fight back.

This system runs on the assumption that A) massive generalized scraping is still required B) You maintain the metadata of the original image C) No transformation has occurred to the poisoned picture prior to training(Stable diffusion is 512x512). Nowhere in the linked paper did they say they had conditioned the poisoned data to conform to the data set. This appears to be a case of fighting the last war.

It is likely a typo, but “last AI war” sounds ominous 😅

Takes image, applies antialiasing and resize

Oh, look at that, defeated by the completely normal process of preparing the image for training

Unfortunately for them there’s a lot of jobs dedicated to cleaning data so I’m not sure if this would even be effective. Plus there’s an overwhelming amount of data that isn’t “poisoned” so it would just get drowned out if never caught

Imagine if writers did the same things by writing gibberish.

At some point, it becomes pretty easy to devalue that content and create other systems to filter it.

if writers did the same things by writing gibberish.

Aka, “X”

I mean isn’t that eventually going to happen? Isn’t ai going to eventually learn and get trained from ai datasets and small issues will start to propagate exponentially?

I just assume we have a clean dataset preai and messy gross dataset post ai… If it keeps learning from the latter dataset it will just get worse and worse, no?

Not really. It’s like with humans. Without the occasional reality checks it gets weird, but what people chose to upload is a reality check.

The pre-AI web was far from pristine, no matter how you define that. AI may improve matters by increasing the average quality.

deleted

nightshade and glaze never worked. its scam lol

Shhhhh.

Let them keep doing the modern equivalent of “I do not consent for my MySpace profile to be used for anything” disclaimers.

It keeps them busy on meaningless crap that isn’t actually doing anything but makes them feel better.

Artists and writers should be entitled to compensation for using their works to train these models, just like any other commercial use would. But, you know, strict, brutal free-market capitalism for us, not the mega corps who are using it because “AI”.

Let’s see how long before someone figures out how to poison, so it returns NSFW Images

You can create NSFW ai images already though?

Or did you mean, when poisoned data is used a NSFW image is created instead of the expected image?

Definitely the last one!

companies would stumble all over themselves to figure out how to get it to stop doing that before going live. source: they already are. see bing image generator appending “ethnically ambiguous” to every prompt it receives

it would be a herculean if not impossible effort on the artists’ part only to watch the corpos scramble for max 2 weeks.

when will you people learn that you cannot fight AI by trying to poison it. there is nothing you can do that horny weebs haven’t already done.

It can only target open source, so it wouldn’t bother corpos at all. The people behind this object to not everything being owned and controlled. That’s the whole point.

The Nightshade poisoning attack claims that it can corrupt a Stable Diffusion in less than 100 samples. Probably not to NSFW level. How easy it is to manufacture those 100 samples is not mentioned in the abstract

yeah the operative word in that sentence is “claims”

I’d love nothing more than to be wrong, but after seeing how quickly Glaze got defeated (not only did it make the images nauseating for a human to look at despite claiming to be invisible, not even 48 hours after the official launch there was a neural network trained to reverse its effects automatically with like 95% accuracy), suffice to say my hopes aren’t high.

You seem to have more knowledge on this than me, I just read the article 🙂

This doesn’t actually work. It doesn’t even need ingestion to do anything special to avoid.

Let’s say you draw cartoon pictures of cats.

And your friend draws pointillist images of cats.

If you and your friend don’t coordinate, it’s possible you’ll bias your cat images to look like dogs in the data but your friend will bias their images to look like horses.

Now each of your biasing efforts become noise and not signal.

Then you need to consider if you are also biasing ‘cartoon’ and ‘pointillism’ attributes as well, and need to coordinate with the majority of other people making cartoon or pointillist images.

When you consider the number of different attributes that need to be biased for a given image and the compounding number of coordinations that would need to be made at scale to be effective, this is just a nonsense initiative that was an interesting research paper in lab conditions but is the equivalent of a mouse model or in vitro cancer cure being taken up by naturopaths as if it’s going to work in humans.

So it sounds like they are taking the image data and altering it to get this to work and the image still looks the same just the data is different. So, couldn’t the ai companies take screenshots of the image to get around this?

Not even that, they can run the training dataset through a bulk image processor to undo it, because the way these things work makes them trivial to reverse. Anybody at home could undo this with GIMP and a second or two.

In other words, this is snake oil.

The general term for this is adversarial input, and we’ve seen published reports about it since 2011 when ot was considered a threat if CSAM could be overlayed with secondary images so they weren’t recognized by Google image filters or CSAM image trackers. If Apple went through with their plan to scan private iCloud accounts for CSAM we may have seen this development.

So far (AFAIK) we’ve not seen adversarial overlays on CSAM though in China the technique is used to deter trackng by facial recognition. Images on social media are overlaid by human rights activists / mischief-makers so that social media pics fail to match secirity footage.

The thing is like an invisible watermark, these processes are easy to detect (and reverse) once users are aware they’re a thing. So if a generative AI project is aware that some images may be poisoned, it’s just a matter of adding a detection and removal process to the pathway from candidate image to training database.

Similarly, once enough people start poisoning their social media images, the data scrapers will start scaning and removing overlays even before the database sets are sold to law enforcement and commercial interests.

Feeding garbage to the garbage. How fitting.

Fighting the uphill battle to irrelevance …

Man, whenever I start getting tired by the amount of Tankies on Lemmy, the linux users and decent AI takes in users rejuvenates me. The rest of the internet has jumped full throttle on the AI hate train

The “AI hate train” is people who dislike being replaced by machines, forcing us further into the capitalist machine rather than enabling anyone to have a better life

No disagreement, but it’s like hating water because the capitalist machine used to run water mills. It’s a tool, what we hate is the system and players working to entrench themselves and it. Should we be concerned about the people affected? Yes, of course, we always should have been, even before it was the “creative class” and white collar workers at risk. We should have been concerned when it was blue collar workers being automated or replaced by workers in areas with repressive regimes. We should have been concerned when it was service workers being increasingly turned into replaceable cogs.

We should do something, but people are titling at windmills instead of the systems that oppress people. We should be pushing for these things to be public goods (open source like stability is aiming for, distributed and small models like Petals.dev and TinyML). We should be pushing for unions to prevent the further separation of workers from the fruits of their labor (look at the Writer’s Guild’s demands during their strike). We should be trying to only deal with worker and community cooperatives so that innovations benefit workers and the community instead of being used against them. And much more! It’s a lot, but it’s why I get mad about people wasting their time being made AI tools exist and raging against them instead of actually doing things to improve the root issues.

The “AI hate train” runs on fear and skips stops for reason, headed for a fictional destination.

Not saying that there aren’t people like that, but this ain’t it. This tool specifically targets open source. The intention is to ruin things that aren’t owned and controlled by someone. A big part of AI hate is hyper-capitalist like that, though they know better than saying it openly.

People hoping for a payout get more done than people just being worried or frustrated. So it’s hardly a surprise that they get most of the attention.

Thing is, its capitalism thats our enemy, not the tech that is freeing us up from labour. Its not the tech thats the problem, its our society, and if fucking sucks that I’m just as poor as the rest of you, but because I finally have a tool that lets me satisfactorily lets me access my creativity, I’m being villianized by the art community, even though the tech I am using is open source and no capitalist is profiting off of me

Data poisoning isn’t limited to just AI stuff and you should be doing it at every opportunity.

if it would work lol

Just don’t out your art to public if you don’t want someone/thing learn from it. The clinging to relevance and this pompous self importance is so cringe. So replacing blue collar work is ok but some shitty drawings somehow have higher ethical value?

“Just don’t make a living with your art if you aren’t okay with AI venture capitalists using it to train their plagiarism machines without getting permission from you or compensating you in any way!”

If y’all hate artists so much then only interact with AI content and see how much you enjoy it. 🤷♂️

using it to train their plagiarism machines

That’s simply not how AI works, if you look inside the models after training, you will not see a shred of the original training data. Just a bunch of numbers and weights.

| Just a bunch of numbers and weights

I agree with your sentiment, but it’s not just that the data is encoded as a model, but it’s extremely lossy. Compression, encoding, digital photography, etc is just turning pictures into different numbers to be processed by some math machine. It’s the fact that a huge amount of information is actually lost during training, intentionally, that makes a huge difference. If it was just compression, it would be a gaming changing piece of tech for other reasons. YouTube would be using it today, but it is not good at keeping the original data from the training.

Rant not really for you, but in case someone else nitpicks in the future :)

If the individual images are so unimportant then it won’t be a problem to only train it on images you have the rights to.

They do have the rights because this falls under fair use, It doesn’t matter if a picture is copyrighted as long as the outcome is transformative.

I’m sure you know something the Valve lawyers don’t.

It has literally nothing to do with plagiarism.

Every artist has looked at other art for inspiration. It’s the most common thing in the world. Literally what you do in art school.

It’s not an artist any more than a xerox machine is. It hasn’t gone to art school. It doesn’t have thoughts, ideas, or the ability to create. It can only take and reuse what has already been created.

The ideas are what the prompts and fine tuning is for. If you think it’s literally copying an existing piece of art you just lack understanding because that’s not how it works at all.

It has nothing to do with AI venture capitalists. Also not every profession is entitled to income, some are fine to remain as primarily hobbies.

AI art is replacing corporate art which is not something we should be worried about. Less people working on that drivel is a net good for humanity. If can get billions of hours wasted on designing ads towards real meaningful contributions we should added billions extra hours to our actual productivity. That is good.

The ratio of using AI to replace ad art:fraud/plagiarism has to be somewhere around 1:1000.

“Actual productivity” is a nonsense term when it comes to art. Why is this

less “meaningful” than this?

less “meaningful” than this?

Without checking the source, can you even tell which one is art for an ad and which isn’t?

I would assume the first to be an ad, because most of depicted people look happy

I’m not sure what’s your point here? Majority of art is drivel. Most art is produced for marketing. Literally. If that can be automated away what are we losing here? McDonald’s logos? Not everything needs to be a career.

What a shitty shitty shitty take

Nah. In literally being proven right real time. You can set a reminder or something :)

Not sure how you can be “right” to generally just shit on the concept of art and think it’s better replaced by ai.

Also not every profession is entitled to income

Yes it is. Otherwise it is not a profession. People go to school for years to become professional artists. They are absolutely entitled to income.

But hey, you want your murals painted by robots and your wall art printed out, have fun. I’m not interested in your brave new world.

The robots are far better at giving me what I want than humans ever were, so yeah, I I ironically am stoked for robot wall art and murals

I’m literally a professional artist lol

So you think you’re not entitled to income from your work? That doesn’t sound like something a professional would say. “I’m obsolete, don’t pay me.”

Nah I understand what’s going on. AI is not replacing real artists. It’s replacing sweatshops. And even when it will eventually replace most of art grunt work we’ll find something more interesting to do like curate the art, mix, match, add extra meta layers and so on.

This closed mind protectionism is just silly. Not only it’s not sustainable because you will never win it’s also incredibly desperate. No real artist would cry and whine here when given this super power.

Also pay is not everything in life. Maybe think about that for a second when you discuss art

Pay is not everything in life, but it does buy things like paint and canvases.

And I really have to question a self-proclaimed professional artist saying, again, that artists do not deserve to be paid for their work.

The idea that you would actually object to replacing labor with automation, but think replacing art with automation is fine, is genuinely baffling.

Except the “art” ai is replacing is labor. This snobby ridiculous bullshit that some corporate drawings are somehow more important than other things is super cringe.

Right, if you post publicly, expect it to be used publicly

Yeah, no. There’s a difference between posting your work for someone to enjoy, and posting it to be used in a commercial enterprise with no recompense to you.

Wait until you find out how human artists learn.

They learn completely different from an AI model, considering an AI model cannot learn

Prove it.

And you don’t see how those two things are different?

And you don’t see how those two things are similar?

How are you going to stop that lol it’s ridiculous. Would you stop a corporate suit from viewing your painting because they might learn how to make a similar one? It’s makes absolutely zero sense and I can’t believe delulus online are failing to comprehend such simple concept of “computers being able to learn”.

Ah yes, just because lockpickers can enter a house suddenly everyone’s allowed to break and enter. 🙄

What a terrible analogy for learning 🙄

It’s not learning

It is. You should try it sometimes.

You’re not learning, that much is obvious.

Computers can’t learn. I’m really tired of seeing this idea paraded around.

You’re clearly showing your ignorance here. Computers do not learn, they create statistical models based on input data.

A human seeing a piece of art and being inspired isn’t comparable to a machine reducing that to 1’s and 0’s and then adjusting weights in a table somewhere. It does not “understand” the concept, nor did it “learn” about a new piece of art.

Enforcement is simple. Any output from a model trained on material that they don’t have copyright for is a violation of copyright against every artist who’s art was used illegally to train the model. If the copyright holders of all the training data are compensated and have opt-in agreed to be used for training then, and only then would the output of the model be able to be used.

they create statistical models based on input data.

Any output from a model trained on material that they don’t have copyright for is a violation of copyright

There’s no copyright violation, you said it yourself, any output is just the result of a statistical model and the original art would be under fair use derivative work (If it falls under copyright at all)

Considering most models can spit out training data, that’s not a true statement. Training data may not be explicitly saved, but it can be retrieved from these models.

Existing copyright law can’t be applied here because it doesn’t cover something like this.

It 100% should be a copyright infringement for every image generated using the stolen work of others.

You can get it to spit out something very close, maybe even exact depending on how much of your art was used in the training (Because that would make your style influence the weights and model more)

But that’s no different than me tracing your art or taking samples of your art to someone else and paying them to make an exact copy, in that case that specific output is a copyright violation. Just because it can do that, doesn’t mean every output is suddenly a copyright violation.

It’s literally in the name. Machine learning. Ignorance is not an excuse.

That’s just one of the dumbest things I’ve heard.

Naming has nothing to do with how the tech actually works. Ignorance isn’t an excuse. Neither is stupidity

And yet you wield both!

Are you actually suggesting that if I post a drawing of a dog, Disney should be allowed to use it in a movie and not compensate me?

Everyone should be assumed to be able to look at it, learn from it, and add your style to their artistic toolbox. That’s an intrinsic property of all art. When you put it on display, don’t be surprised or outraged when people or AIs look at it.

AI does not learn and transform something like a human does. I have no problem with human artists taking inspiration, I do have a problem with art being reduced to a soulless generation that requires stealing real artists work to create something that isn’t original.

-

you don’t know how humans learn and transform something

-

regardless, it does learn and transform something

-

AI does not learn and transform something like a human does.

But they do learn. How human-like that learning may be isn’t relevant. A parrot learns to talk differently than a human does too, but African greys can still hold a conversation. Likewise, when an AI learns how to make art by studying what others have made, they may not do it in exactly the same way a human does it, but the products of the process are their own creations just as much as the creations of human artists that parrot other human artists’ styles and techniques.

Ofc not, that’s way different, that’s beyond the use of public use.

If I browse to your Instagram, look at some of your art, record some numbers about it, observe your style and then leave that’s perfectly fine right? If I then took my numbers and observations from your art and everybody else’s that I looked and merged them together to make my own style that would also be fine right? Well that’s AI, that’s all it does on a simple level

But they are still profiting off of it. Dall-E doesn’t make images out of the kindness of OpenAI’s heart. They’re a for-profit company. That really doesn’t make it different from Disney, does it?

Sure, Dall-E has a profit motive, but then what about all the open source models that are trained on the same or similar data and artworks?

You’ve strayed very far from:

if you post publicly, expect it to be used publicly

What is the difference between Dall-E scraping the art and an open source model doing it other than Dall-E making money at it? It’s still using it publicly.

I didn’t really stray far, you brought up that Dall-E has a profit motive and I acknowledged that yea that was true, but there also open source models that don’t