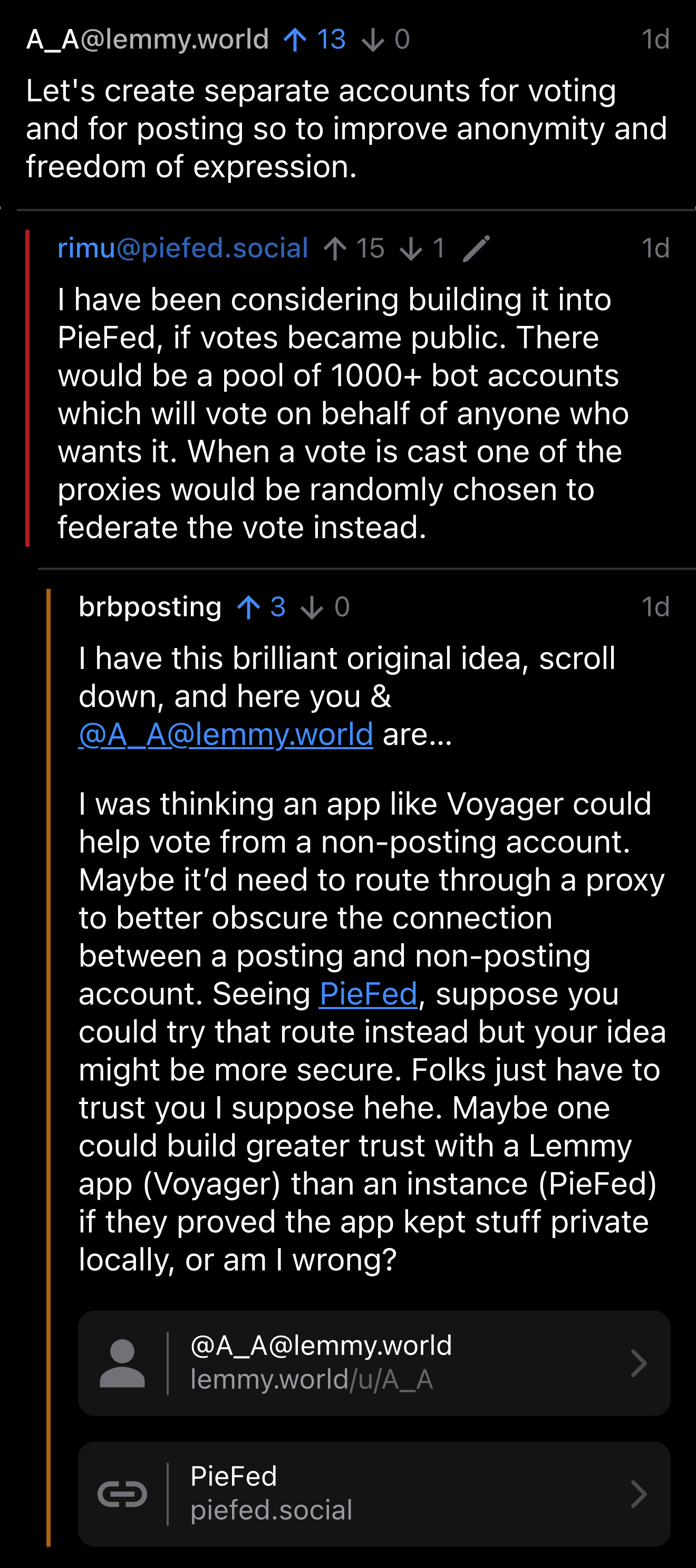

We had a really interesting discussion yesterday about voting on Lemmy/PieFed/Mbin and whether they should be private or not, whether they are already public and to what degree, if another way was possible. There was a widely held belief that votes should be private yet it was repeatedly pointed out that a quick visit to an Mbin instance was enough to see all the upvotes and that Lemmy admins already have a quick and easy UI for upvotes and downvotes (with predictable results ). Some thought that using ActivityPub automatically means any privacy is impossible (spoiler: it doesn’t).

As a response, I’m trying this out: PieFed accounts now have two profiles within them - one used for posting content and another (with no name, profile photo or bio, etc) for voting. PieFed federates content using the main profile most of the time but when sending votes to Mbin and Lemmy it uses the anonymous profile. The anonymous profile cannot be associated with its controlling account by anyone other than your PieFed instance admin(s). There is one and only one anonymous profile per account so it will still be possible to analyze voting patterns for abuse or manipulation.

ActivityPub geeks: the anonymous profile is a separate Actor with a different url. The Activity for the vote has its “actor” field set to the anonymous Actor url instead of the main Actor. PieFed provides all the usual url endpoints, WebFinger, etc for both actors but only provides user-provided PII for the main one.

That’s all it is. Pretty simple, really.

To enable the anonymous profile, go to https://piefed.social/user/settings and tick the ‘Vote privately’ checkbox. If you make a new account now it will have this ticked already.

This will be a bit controversial, for some. I’ll be listening to your feedback and here to answer any questions. Remember this is just an experiment which could be removed if it turns out to make things worse rather than better. I’ve done my best to think through the implications and side-effects but there could be things I missed. Let’s see how it goes.

Cool solution. It’s great to have multiple projects in the fediverse that can experiment with different features/formats.

For those who are concerned about possible downsides, I think it’s important to understand that

- PieFed has a small userbase

- Rimu is an active admin, so if you are attempting to combat brigading or other bad behavior and this makes it more difficult, just send them a DM and they will be happy to help out

This is a good environment to test this feature because Rimu can keep a close watch over everything. We can’t become paralyzed by the hypothetical ways that bad actors might abuse new features or systems. The only way forward is through trial and error, and the fact that PieFed exists makes that process significantly faster and less disruptive.

This is an attempt to add more privacy to the fediverse. If the consequences turn out for the worse, then we can either try something else, or live with the lack of privacy. Either way, we’ll be better off than having never tried anything at all.

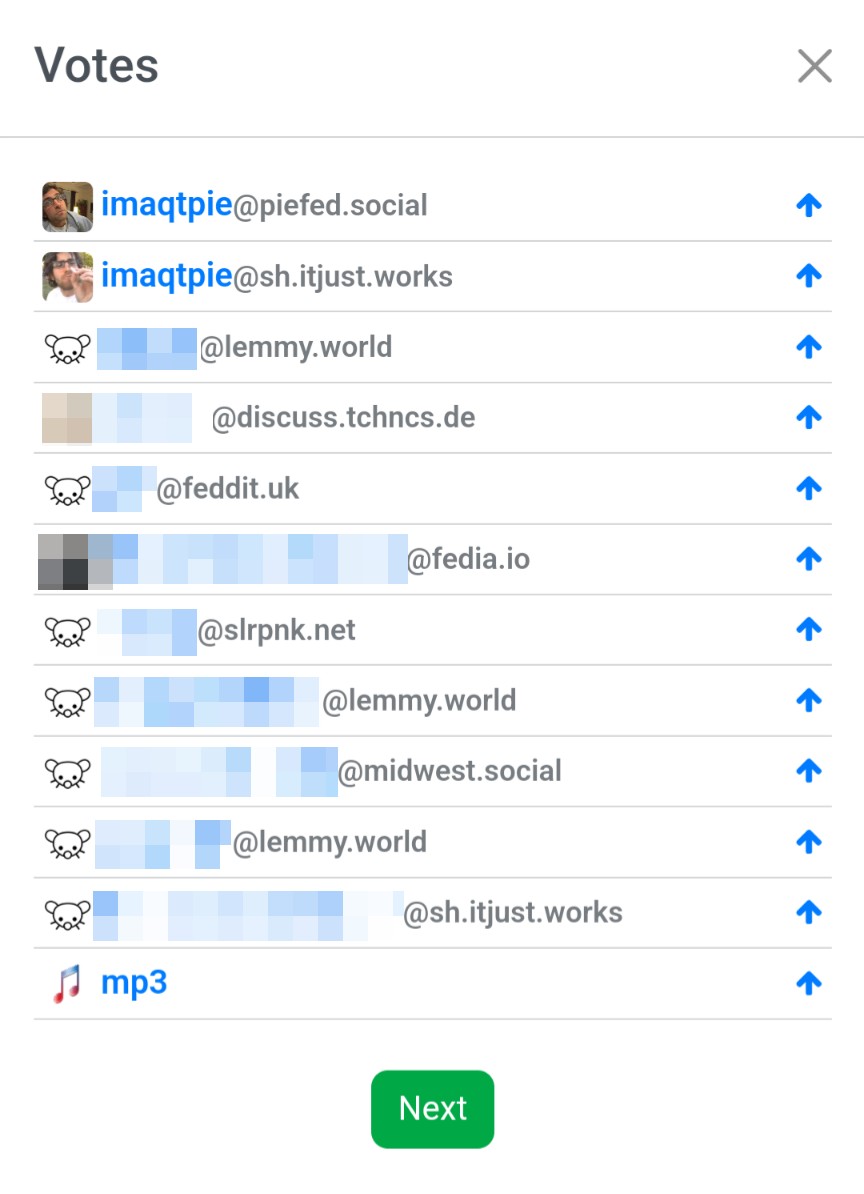

Just upvoted myself but nobody else knows 🤫

Edit: Actually I forgot to toggle the setting before voting on my own comment, so admins will see my @[email protected] account upvoted the parent comment. Worth noting that it needs to be manually enabled.

Then I turned the setting on and voted on a bunch of other comments in this post. My anonymized voting account appears as @[email protected], admins should be able to see it by checking the votes in this thread.

Point being, you can still track serial downvoters and harassment just as easily. But now you will need to take an extra step and message the instance admin (Rimu) and ask that they either reveal the identity of the linked profile or deal with it themselves. And that’s a good thing, imho.

Point being, you can still track serial downvoters and harassment just as easily. But now you will need to take an extra step and message the instance admin (Rimu) and ask that they either reveal the identity of the linked profile or deal with it themselves. And that’s a good thing, imho.

This puts the privacy shield in the hands of a users instance admin. I like that approach, but I’m sure others will disagree.

This is more or less how it worked on Reddit. The admins handled vote spam or abuse, there was absolutely no expectation for moderators to have that information because the admins were dealing with the abuse cases. Moderators only concerned themselves with content and comments, the voting was the heart of how the whole thing works, and therefore only admins could see and affect them. Least privilege, basically.

I think a side effect of this, though, is that it increases the responsibility on admins to only federate with instances that have active and cooperative admins. It increases their responsibilities and demands active monitoring, which isn’t a bad thing, but I worry about how the instances that federates openly by default will continue to operate.

If you have to trust the admins, how do you handle new admins, or increasingly absent ones? What if their standards for what constitutes “harassment” don’t match yours? Does the whole instances get defederated? What if it’s a large instance, where communities will be cut off?

I don’t ask any of this as a way to put down this effort because I very, very much want to see this change, but there’s gonna be hurtles that have to be overcome

Ultimately I think the best solution would need assistance from the devs but I’m lieu of that, we have to make due.

You wouldn’t dare!

You don’t even need to message an admin. You can just ban the agent doing the voting.

Hey, Lemmy admin here. If I ban an anonymous account, does the account it’s tethered to also get banned?

No but perhaps it should!

PieFed lacks an API, making it an unattractive tool for scripting bots with. I don’t think you’ll see any PieFed-based attacks anytime soon.

What about PieFed-based shitty humans?

PieFed tracks the percentage of downvotes vs upvotes (calling it “Attitude” in the code and admin UI ), making it easy to spot people who downvote excessively and easy to write functionality that deals with them. Perhaps anonymous voting should only be available to accounts with a normal attitude (within a reasonable tolerance).

Wow your documentation is so much better than ours.

That’s nice of you to say. I’ve tried to focus well on certain areas that seem important but I really admire the breadth of https://join-lemmy.org/docs/ which I could never hope to cover.

Do you have a link? The Piefed docs page is empty for me.

Ah fuck! I mistook the piefed docs for the pixelfed docs.

Yes but … Navigation icon at the top right of the pages leads to these :

https://join.piefed.social/docs/piefed-mobile/ https://join.piefed.social/docs/developers/ https://join.piefed.social/docs/admin-guide/ https://join.piefed.social/docs/installation/

https://join.piefed.social/2024/06/22/piefed-features-for-growing-healthy-communities/I swear there was documentation there.

I was wondering what attitude was, but I never got around to checking it out in the documentation. I was wondering why PieFed insisted my attitude wasn’t 100%. Makes sense now - I guess it just isn’t!

(maybe a clickable question mark next to the attitude score explaining briefly what it is could be useful at some point)

So no app?

Kind of but technically, no. Please see https://join.piefed.social/docs/piefed-mobile/

Do you really think it would matter to a malicious botter if they have a documented API or simply look at the requests the browser makes?

If the pseudo account is banned for it’s vote choices, does that really address the issue of vote-banning?

Do you ben based on voting behaviour?

If the same account is voting in the same direction on every single post and comment in an entire community in a matter of seconds while contributing neither posts nor comments? Yes, vote manipulation.

If one user is following another around, down voting their content across a wide range of topics? Yes, targeted harassment.

Would banning the voting half of the pseudonymous account not mitigate the immediate issue? Then asking their instance admin to later lookup and ban the associated commentating account.

Well, doesn’t that fly in the face of federated autonomy and privacy?

On one end, if it’s my instance and I want to ban a user, I want the whole fucking user banned – not just remove their ability to vote anonymously. If one of my communities or users is being attacked, it’s my responsibility to react. If I can’t remove the whole problem with a ban, then I have to remove the whole problem with a de-federation. (A thing I fundamentally don’t want to do.)

On the other, if some other admin says, “one of your users is being problematic, please tell me who they are,” I’m going to tell that other admin to fuck right off because I just implemented a feature that made their votes anonymous. I’m not about to out my users to some rando because they’re raining downvotes on [email protected].

It’s a philosophical difference of opinion.

On one end, if it’s my instance and I want to ban a user, I want the whole fucking user banned – not just remove their ability to vote anonymously.

I mean, is that truly the case? If a user only engages in vote manipulation, but otherwise they have insightful comments/posts, is it really that big of a deal that you will ban only their option to vote?

I think you’re conflating my two separate concerns. One’s automated vote manipulation. The other is targeted harassment.

Looks like it’s kinda hard to spin up a piefed bot. Not impossible, but it’s a bitch without an API.

If I have an insightful contributer who’s going out of their way and outside of their normal communities to be a dick to another user, maybe they’re not so insightful after all. Or they’ve got a great reason!

Either way, I want to be able to point to their behavior - without the extra step of having to de-anonymize their activity - and tell them to chill the fuck out or get the fuck out. Out means out. Totally and forever.

Looks like it’s kinda hard to spin up a piefed bot. Not impossible, but it’s a bitch without an API.

What you would actually want to do if you want to bot is take one of the existing apps and modify it to make spamming easy.

Either way, I want to be able to point to their behavior - without the extra step of having to de-anonymize their activity - and tell them to chill the fuck out or get the fuck out. Out means out. Totally and forever.

I can see why you would want that, but my question is is that such a big deal compared to people being harassed for their voting? I don’t think user privacy should be violated - especially en masse / by default just because of some (in my opinion fairly minor) moderation concerns.

And if they are a dick overall, then you will figure it out anyway, ban their “main” account and that will prevent them from voting, too (unless the instance is malicious, but then a malicious instance can do much more harm in general).

But if the only bad behavior is voting and you can that agent then you’ve solved the core issue. The utility is to remove the bad behavior, no?

No, the utility is to remove bad users.

To prevent them from engaging in bad behavior.

Sure, but by the same token, mods are just as capable of manipulation and targeted harassment when they can curate the voting and react based on votes.

On reddit, votes are only visible to the admins, and the admins would take care of this type of thing when they saw it (or it tripped some kind of automated something or other). But they still had the foresight not to let moderators or users see those votes.

Complete anonymity across the board won’t work but they’re definitely needs to be something better than it is now.

mods are just as capable of manipulation and targeted harassment when they can curate the voting and react based on votes

I’m not sure what you’re trying to say.

I’m speaking as an admin, not as a mod. I own the servers. I have direct access to the databases. When law enforcement comes a’knockin’, it’s my ass that gets arrested. I have total control over my instances and can completely sever them from the fediverse if I feel it necessary. Mods are mall cops that can lock posts and deal with problem users one at a time.

On reddit, votes are only visible to the admins, and the admins would take care of this type of thing when they saw it (or it tripped some kind of automated something or other)

There are no built in automations. Decoupling votes from the users that cast them interferes with my ability to “take care of this type of thing.”

Yeah, I see that and it does concern me now that it has been brought up.

However. In the last 6 months of being active in the ‘Lemmy.world defense hq’ matrix room where we coordinate admin actions against bad people, vote manipulation has come up once or twice. The other 99% of the time it’s posts that are spam, racist or transphobic. The vote manipulation we found detected using some scripts and spreadsheets, not looking at the admin UI. After all, using code is the only way to scan through millions of records.

Downvote abuse/harassment coming from PieFed will be countered by monitoring “attitude” and I have robust tools for that. I can tell you with complete confidence that not one PieFed user downvotes more than they upvote. I can provide 12 other accounts on Lemmy instances that do, tho. Lemmy’s lack of a similar admin tool is unfortunate but not something I can do anything about.

What I’ve done with developing this feature is taken advantage of a weakness of ActivityPub - anyone can make accounts and have them do stuff. Even though I’ve done it in a very controlled and limited way and released all the code for it, having this exposed feels pretty uncomfortable. There were many many people droning on about “votes must be public because they need to come from an account” blah blah and that secure safe illusion has been ripped away now. That sucks, but we were going to have to grapple with it eventually one way or another.

Anyway. I’m not wedded to this or motivated by a fixed ideology (e.g. privacy über alles) so removing this is an option. It didn’t even take that long to code, I spent more time explaining it than coding it.

I think a ban based on those criteria should apply to main acct but I’m not sure how it’s implemented.

Is that really harassment considering Lemmy votes have no real consequences besides feels?

It’s against the CoC of programming.dev and we have issued warnings to abusers before. Last warning given for that was 13 days ago and was spotted by a normal user.

I think you forgot to say what is against the CoC. It’s implied though.

Vote manipulation

This is quite a smart solution, good job !

You’re a hero for making this happen in… 24 hours? 48?

The issue won’t go away, we’ll see how well everyone else deals with it, but this is a super strong argument for your system / server.

(Advertise it. Advertise it HARD. “piefed, we have private votes”.)

Dude this is genius

I am interested to see how it plays out but the idea of the instance admin being able to pierce the veil and investigate things that seem suspect (and being responsible for their instance not housing a ton of spam accounts just as now) seems like a perfect balance at first reading

Edit: Hahaha now I know Rimu’s alter ego because he upvoted me. Gotcha!

It wasn’t me, haha

While not a perfect solution, this seems very smart. It’s a great mitigation tactic to try to keep user’s privacy intact.

Seems to me there’s still routes to deanonymization:

- Pull posts that a user has posted or commented in

- Do an analysis of all actors in these posts. The poster’s voting actor will be over represented (if they act like I assume most users do. I upvote people I reply to etc)

- if the results aren’t immediately obvious, statistical analysis might reveal your target.

Piefed is smaller than lemmy, right? So if only one targeted posting account is voting somewhat consistently in posts where few piefed users vote/post/view, you got your guy.

Obviously this is way harder than just viewing votes. Not sure who would go to the trouble. But a deanonymization attack is still possible. Perhaps rotate the ids of the voting accounts periodically?

It will never be foolproof for users coming from smaller instances, even with changing IDs. If you see a downvote coming from PieFed.social you already have it narrowed down to not too many users, and the rest you can probably infer based on who contributes to a given discussion.

Still, I think it’s enough to be effective most of the time.

Yea, I agree. It’s good enough. Sorry, I didn’t mean to sound like it was a bad solution, it’s just not perfect and people ought to be aware of limitations.

I used a small instance in my example so the problem was easier to understand, but a motivated person could target someone on a large instance, too, so long as that person tended to vote in the posts they commented on.

Just for example (and I feel like I should mention, I have no bad feelings towards this guy), Flying Squid on lemmy.world posts all over the place, even on topics with few upvotes. If you pull all his posts, and all votes left in those posts from all users, I bet you could find one voter who stands out from the crowd. You just need to find the guy following him everywhere: himself.

I mean, if he tends to leave votes in topics he comments on, which I assume he does.

It would have to be a very targeted attack and that’s much better than the system lemmy uses right now. I’m remembering the mass tagger on Reddit, I thought that add on was pretty toxic sometimes.

Also, it just occurred to me, on Lemmy, when you post you start with one vote, your own. I can even remove this vote (and I’ll do it and start this post off with score 0). I wonder how this vote is handled internally? That would be an immediate flaw in this attempt to protect people’s privacy.

Yeah, I think your point is absolutely well made. And it’s a good reason to, even if features like this are implemented widely, we shouldn’t boast too much about voting being anonymous. It’s just too difficult or impossible to make it bullet proof.

I don’t think the automatic upvotes to your own posts count as real upvotes. At least they don’t federate, so they shouldn’t pose too much of a problem. I think they’re just there to keep people from trying to upvote their own content.

It could be mitigated further by having a different Actor per community you engage in, but that is definitely a bigger change in how voting works currently, and might have issues detecting vote brigading.

Not familiar with how piefed handles it specifically but aren’t posts/comments self-upvoted by default?

You could probably figure it out pretty easily just by looking at a user’s posts, no?

(This is unless piefed makes it so the main actor up votes their own posts, and the anonymous actor upvotes others’ posts, but then it would still be possible to do analysis on others’ comments to get a pretty accurate guess)

Nice, @[email protected], we feeling heard? 😉

Great trial! Will see if you end up feeling a need to iterate sometime later!

@[email protected] did great on my simple idea and this is very nice to know. He did not implement his other idea of having a pool of available robots that would vote one time each for each one vote of each one user(s) … also I thought it might be implemented in the frontend but he did implement it in the backend.

i did not say before but i was also thinking about complex mechanisms involving some kind of coin that would represent voting power that could be spent when voting or accumulated maybe with some loss of value with time … but then i read about the many research paper that were published about such things … in conclusion i believe we will see many other iterations of such social media.

thanks for the feedback 😌

I use people upvoting bigoted and transphobic content to help locate other bigoted and transphobic accounts so I can instance ban them before they post hate in to our communities.

This takes away a tool that can help protect vulnerable communities, whilst doing nothing to protect them.

It’s a step backwards

whilst doing nothing to protect them

Well it also takes away a tool that harassers can use for their harassing of individuals, right? This does highlight the often-requested issue of Lemmy needs better/more moderation tools though.

If public voting data becomes a thing across the threadiverse, as some lemmy people want.

Which is why I think the appropriate balance is private votes visible to admins/mods.

Admins only. Letting mods see it just invites them to share it on a discord channel or some shit. The point is the number of people that can actually see the votes needs to be very small and trusted, and preferably tied to a internal standard for when those things need acted upon.

The inherent issue is public votes allow countless methods of interpreting that information, which can be acted on with impunity by bad actors of all kinds, from outside and within. Either by harassment or undue bans. It’s especially bad for the instances that fuck with vote counts. Both are problems.

I can see this argument, at least in general. As for community mods, I feel like it’d be generally fruitful and useful for them to be and feel empowered to create their own spaces. While I totally hear your argument about the size of the “mod” layer being too large to be trustworthy, I feel like some other mitigating mechanisms might be helpful. Maybe the idea of a “senior” mod, of which any community can only have one? Maybe “earning” seniority through being on the platform for a long time or something, not sure. But generally, I think enabling mods to moderate effectively is a generally good idea.

It actually adds a tool for harassers, in that targeted harassment can’t be tied back to a harasser without the cooperation of their instance admin.

In reality, I think a better answer might be to anonymize the username and publicize the votes.

Hmm, yes.

PieFed tracks the percentage of downvotes vs upvotes (calling it “Attitude” in the code and admin UI), making it easy to spot people like this and easy to write functionality that deals with them. Perhaps anonymous voting should only be available to accounts with a normal attitude (within a reasonable tolerance).

PieFed tracks the percentage of downvotes vs upvotes (calling it “Attitude” in the code and admin UI)

That’s cool. I wonder what my attitude is and I wonder how accurate the score is, if our federations don’t overlap super well. What happens if I have a ton of interactions on an instance that yours is completely unaware of?

(I think “Attitude” is a perfect word, because it’s perceptive. Like, “you say they’re great but all I see them do is get drunk and complain about how every Pokemon after Mewtwo isn’t ‘legit’,” sort of thing.)

I’ve intentionally subscribed to every active community I can find (so I can populate a comprehensive topics hierarchy ) making piefed.social get a fairly complete picture. Your attitude is only 3% below the global average, nowhere near the point where I’d take notice.

Feels to me that being able to link what people like/dislike to their comments and username is much more dangerous than just being able to downvote all their comments.

And I’d hope that in this new suggestion an admin would still be able to ban the user even if they only knew the anonymous/voter ID, though that’s probably an interesting question for OP.

I’m going to have to come up with set criteria for when to de-anonomize, aren’t I. Dammit.

In the meantime, get in touch if you spot any bigot upvotes coming from PieFed.social and we’ll sort something out.

The problem is, it’s more than just the upvote. I don’t ban people for a single upvote, even on something bigoted, because it could be a misclick. What I normally do is have a look at the profiles of people who upvote dogwhistle transphobia, stuff that many cis admins wouldn’t always recognise. And those upvotes point me at people’s profiles, and if their profile is full of dog whistles, then they get pre-emptively instance banned.

Ahh, right, got it.

Let’s keep an eye on this. I am hopeful that with PieFed being unusually strong on moderation in other respects that we don’t harbor many people like that for long.

This is great

So you can still ban the voting agent. Worst case scenario you have to wait for a single rule breaking comment to ban the user. That seems like a small price to pay for a massive privacy enhancement.

I don’t think you do. Admins can just ban the voting agent for bad voting behavior and the user for bad posting behavior. All of this conflict is imagined.

Yea, which is why I think the obvious solution to the whole vote visibility question is to have private votes that are visible to admins and mods for moderation purposes. It seems like the right balance.

It will be difficult to get the devs of Lemmy, Mbin, Sublinks, FutureProject, SomeOtherProject, etc to all agree to show and hide according to similar criteria. Different projects will make different decisions based on their values and priorities.

…and it still doesn’t solve the issue that literally anyone can run their own instance and just capture the data.

The OP discusses exactly a solution to the anyone setting up an instance to capture the data, because the users home instance federates their votes anonymously.

There maybe flaws in it, not that’s exactly what it aims to solve.

Plus, if you know your votes are public, maybe it’ll incentivise some people to maybe skip upvoting that kind of content. People use anonymity to say and promote absolute vile things that would never dare say or support openly otherwise.

The problem with this approach is trust. It works for the users, but not admins. If I run a PieFed instance with this on, how can lemmy.world for example can trust my tiny instance to be playing by the rules? I went over more details in this other comment.

Sure, right now admins can contact you, for your instance. But you can’t really do that with dozens of instances and hundreds of instances. There’s a ton of instances we tolerate the users, but would you trust the admin with anonymous votes? Be in constant contact with a dozen instance admins on a daily basis?

It’s a good attempt though. Maybe we’re all pessimistic and it will work just fine!

I can only respond in general terms because you didn’t name any specific problems.

Firstly, remember than each piefed account only has one alt account and it’s always the same alt account doing the votes with the same gibberish user name. If the person is always downvoting or always voting the same as another person you’ll see those patterns in their alt and the alt can be banned. It’s an open source project so the mechanics of it cannot be kept secret and they can be verified by anyone with intermediate Python knowledge.

Regardless, at any kind of decent scale we’re going to have to use code to detect bots and bad actors. Relying on admins to eyeball individual posts activity and manually compare them isn’t going to scale at all, regardless whether the user names are easy to read or not.

Firstly, remember than each piefed account only has one alt account and it’s always the same alt account doing the votes with the same gibberish user name. It’s an open source project so the mechanics of it cannot be kept secret and they can be verified by anyone with intermediate Python knowledge.

That implies trust in the person that operates the instance. It’s not a problem for piefed.social, because we can trust you. It will work for your instance. But can you trust other people’s PieFed instances? It’s open-source, I could just install it on my server, change the code to make me 2-3 alt accounts instead. Pick a random instance from lemmy.world’s instance list, would you blindly trust them to not fudge votes?

The availability of the source code doesn’t help much because you can’t prove that it’s the exact code that’s running with no modifications, and marking people running modified code as suspicious out of the box would be unfair and against open-source culture.

I also see some deanonymization exploits too: people commonly vote+comment, so with some time, you can do correlation attacks and narrow down the accounts. So to prevent that, you’d have to remove the users mapping 1:1 to a gibberish alt by at least letting the user rotate them on demand, or rotate them on a schedule, and now we can’t correlate votes to patterns anymore. And everyone’s database endlessly fills up with generated alt accounts (that you can’t delete).

If the person is always downvoting or always voting the same as another person you’ll see those patterns in their alt and the alt can be banned.

Sure, but you lose some visibility into who the user is. Seeing the comments is useful to get a better grasp of who they are. Maybe they’re just a serial fact checker and downvoting misinformation and posting links to reputable sources. It can also help identify if there’s other activity beside just votes, large amounts of votes are less suspicious if you see the person’s also been engaging with comments all day.

And then you circle back to, do you trust the instance admin to investigate or even respond to your messages? How is it gonna go when a big, politically aligned instance is accused of botting and the admin denies the claims but the evidence suggests it’s likely? What do we do with Threads or even an hypothetical Twitter going fediverse, with Elon still as the boss? Or Truth Social?

The bigger the instance, the easier it is to sneak a few votes in. With millions of user accounts, you can borrow a couple hundred of your long inactive user’s alts easily and it’s essentially undetectable.

I’m sorry for the pessimism but I’ve come to expect the worst from people. Anything that can be exploited, will be exploited. I do wish this problem to be solved, and it’s great that some people like you go ahead and at least try to make it work. I’m not trying to discourage anyone from experimenting with that, but I do think those what-ifs are important to discuss before everyone implements it and then oops we have a big problem.

The way things are, we don’t have to put any trust in an instance admin. It might as well not be there, it’s just a gateway and file host. But we can independently investigate accounts and ban them individually, without having to resort to banning whole instances, even if the admins are a bit sketchy. Because of the inherent transparency of the protocol.

Yes. You’re going to have to trust someone, eventually. People can modify the Lemmy source code, too. Well, I can’t because Rust looks like hieroglyphics to me but you get the idea.

I’d rather this than have to trust Lemmy admins not to abuse their access to voting data - https://lemm.ee/comment/13768482

You can even question if the compiled version running on an instano is the same as the version posted to GitHub. There’s no way to even check what’s running on the server you don’t have access to.

Trust is necessary at some level if your going to participate on any hosted or federated service as you pointed out.

This is literally already the Lemmy trust model. I can easily just spin up my own instance and send out fake pub actions to brigade. The method detecting and resolving this is no different.

It will be extremely obvious if you see 300 user agents voting but the instance only has 100 active users.

Awesome! This is the exact stopgap implementation I was arguing for, and I’m surprised how many people kept insisting it was impossible. You should try and get this integrated into mainline Lemmy asap. Definitely joining piefed in the meantime though.

Very interesting development, I’ll be curious to see how it ends up working out.

You keep delivering, thank you so much!

Look mom, I’m famous!

Why do you downvote all the stuff anyways?

PieFed shows us that he has an “attitude” of -40%, which I guess means that of 200 catloaf votes 140 will point downwards. So I guess at least it’s nothing personal, he or she is just an active downvoter of things. I guess we all enjoy spending our time differently.

A cool potential feature would be weighted downvotes - giving downvotes form users with higher attitude scores (in PieFed terms) greater significance. But I’m derailing.

I’ve always wanted to ask such a person what their deal is. I mean they could be miserable, or one of the people who always complain about everything. Or it’s supposed to be some form of trolling that no one gets… Maybe I shouldn’t ask because it’s not gonna be a healthy discussion… And I don’t care if that happens in an argument. But I really wonder why someone downvotes something like an innocent computer question. Or some comment with correct and uncontroversial advise. Or other people during a healty conversation. It doesn’t happen often to me, but I had all of that happen. And maybe thoughts like this lead to the current situation. And some people think about exposing such people and some think it should be protected.

And i think weighing the votes is a realistic idea. We could also not count votes of people with bad attitude at all.

Then again, if there’s a method to it and logic behind it, maybe these active downvoters are doing everybody a favour by screening content and downvoting things they consider to be of little value?

I don’t know. It would be interesting to hear their motivation for sure.

I’ve always wanted to ask such a person what their deal is.

I can’t answer for other people but I’m probably in the “low attitude” group, since my older account is at -9% and the current one at +42%. And at least for me it’s the result of two factors.

One of them is that old Reddit habits die hard. In Reddit I used to have uBlock Origin hiding the voting buttons from the platform, as a way to avoid contributing with it altogether except in ways that subjectively benefitted me, such as commenting (as I’m verbose, I feel good writing). The exception to the above was typically things so stupid/reddit-like/idiotic that I couldn’t help but downvote.

Another is that my “core” values is rather different from what most people in social networks value. As such, a lot of posts/comments are from my PoV overrated (that get downvoted) or underrated (that get upvoted). And due to sorting algorithms I’m seeing high score comments more often, so this yields a higher amount of downvotes.

Its strange to see one of my posts being used as a reference. All I was trying to do was share something cool.

I do agree though. When up/downvotes (especially downvotes) are fully public, it leads to trolls getting angry and lashing out on individuals in a semi-public way. And if you can see ALL of that individuals voting patterns, then we get people strategically making tools to go after people that vote certain ways. Theres a reason anonymous voting is a thing outside of the internet as well.

If this goes live in lemmy.world i will be looking at other places to post/interact with. Love lemmy (and contributed to the codebase as a dev) but I cant be bothered with trolls.

It’s vice versa. In the old good times there was a saying “don’t feed the troll”. Just block him. Downvoting is just a cheap solution for people who cannot justify their argument. Btw, I love to read downvoted comments which are by default ‘hidden’. Most of them are trash but sometimes it’s a valid point but not the very popular one

I’m not sure what you are suggesting. Are you suggesting never using downvotes?

Yes, exactly my thoughts on this. Downvoting is only a measure of crowd censorship based on opinion popularity. If you see some trolls, just block them but don’t hide their posts for other ones who may think on that person views otherwise

Downvotes are part of the whole curation aspect of the site, and it’s a valid part of the democratic system. For all the whining about being “censored” because you got downvoted, there’s countless cases where downvotes influence the sorting algorithm positively.

Garbage shouldn’t sit on the same level as fluff comments no one bothered to vote on.

Millions flies cannot be mistaken. Democratic mob cannot be mistaken. Mobs have never lynched anybody. How ignorant you are in your ego with your “whining” argument

So why be on lemmy then? I really don’t agree with showing votes.

I think it’s better to present a valid point against somebody’s statement than straight down voting without giving a reason “just because don’t like him”. I think it would create positive discussion environment in lemmy. We are here after all to exchange ideas on lemmy. Aren’t we?

While engaging in discussion can be beneficial, it’s important to recognize that not everyone is comfortable/interested in debating every point they disagree with. Downvoting allows users to express their disagreement without feeling pressured to engage in a back-and-forth that might not be constructive.

Additionally, some statements may not merit a detailed response, especially if they are inflammatory, misleading, or irrelevant. Encouraging only counter-arguments could lead to an environment where people feel obligated to justify every opinion, which stifles participation rather than promote a positive discussion.

Is it possible for an instance to send out false vote data that can’t be verified? Lemmy doesn’t seem like a plausible target for it at the moment (and i dont pretend to know how this works beyond a conceptual level) but I can imagine a bad actor at some point seeking to manipulate voting.

I guess that can happen now anyway as the bad actor can just create their own instance with as many fake accounts as they like. Ultimately it’s still on other instance admins to block the dodgy ones either way.

Yes, a fake instance can spam votes over federation. But usually it’s pretty obvious and easy to block.