Lemmy when discussing health care: Karl Marx

Lemmy when discussing creative works: Ayn Rand

because I don’t make art to sell, I’d love to train an Ai on my pics or songs and then see what it can make when given cool prompts :)

But I’m far from the competitive capitalism scene so I more view such an activity with a sense of wonder instead of anything to do with a loss of paid work.

When I was making an android game I wanted to make art so i made an ai art gen on Perchance. OP would hate it most of all since a large part of it is the combining of different artist styles. I personally love being able to combine my 5 fav artists and see what prompts become with them combined.

I recently realized the artist Hannah Yata results in cool trippy pics. I then went to her site and yeah her pics are really like that. She’s one of maybe 8 artists I’ve recently found a special connection to that I would not have known about otherwise.

so yeah ai art may be bad for struggling professional artists but for people that are not big money game studios yet, ai art basically allows having nonstockimage art in projects legally. I can 100% say ai art empowers me to have visuals where I could not have before unless i used stock(gross) images or had starting wealth to pay artists. So if you focus on artists losing, also focus on the poor but smart kid in some poverty place who is now that much more empowered to make something on their phone and legitly escape poverty.

There was a wealth barrier to visual art; now there isn’t.

Entrenched struggling professional artists cry. People needing art that weren’t wealthy enough to pay for it win.

When drugs become fabricateable at home by anyone, drug companies will also cry. People that weren’t wealthy enough to pay for them win.

Same thing.

Poor artists.

But when you’re the one no longer paywalled it’s a different story.

You’re doing the corkboard thing in the post. This requires a lot of specific details and assumptions and benefit of the doubt, none of which can be applied to AI generation writ large.

I’m glad your ends are not nefarious. I’m glad you found a new artist you like. But you have to understand that you are not the norm.

Plaigerism isn’t the problem. This society that makes living so hard that you need to snatch every crumb, that’s the problem.

Great artists have been stealing and sampling since forever. It really isn’t a big deal unless you’re broke.

The way some people defend AI generated images reminds me of the way some people defend the act of tracing other people’s art without the artist’s permission and uploading it while claiming they made it.

Some of that ai art is pretty awsome actually.

It’s not ‘art’ ffs. It’s an image. Sure, it may look nice, but it has 0 meaning, thought, or emotion behind it. This is just another scheme by the rich to milk us some more, as it promotes the idea that art is just a pretty image people pay to look at (consoooom). That’s NOT what art is. Art is made by a living being, go pick up a pencil.

Art theft is the antithesis of awesome.

What if an artist makes awsome art from pieces of other people’s art? Is that awesome or not?

It is, it’s playful and meaningful and awsome. It’s my favourite kind of art. It’s what the biggest artists do. Art works like memes. When you don’t let people derive from each other, you suffocate art.

10 hours of jingling keys to be amazed by on YouTube dot com

I don’t understand what you’re saying.

If you think that images created by a generative model are amazing, you might also be interested in 10 hours of keys jingling.

That might be interesting actually. I wonder what you would feel like after listening to 10 hours of keys jingling.

Where do I get the jingling keys?

Ah, an insult. How novel.

As someone who is largely around the art community admiring and sharing thier work, the fact that I could confuse AI Generated Images and thusly falsely share or save them has been such a huge anxiety of mine every since 2022

One easy way to check is the look for JPEG artifacts that doesn’t make any sense. A lot of the systems were trained with images stored as JPEGs, so the output will have absurd amounts of JPEG artifacting that will show up in ways that make no sense for something that actually went through JPEG compression, such as having multiple grids of artifacts that don’t line up or of wildly different scales.

Confuse AI with what?

How do you “falsely share or save”? I think every time I shared or saved something it worked. I could be wrong.

(I’m in art too. Or was. I’m sorta changing these days.)

I’m really bad at noticing small details. Luckily 99% of AI artists use the same art style (with more or less Pixar influence for humans) so I can still spot AI imagery from a mile away

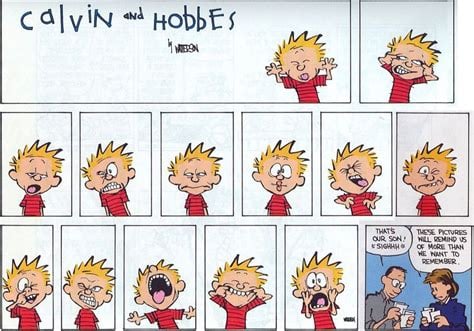

And the face is always one of these.

All of these faces make physical sense, while AI art often doesn’t.

Or you only notice the obvious ones and are oblivious to all the ones you have not recognized

I’ve had moments where they admitted to Generating the Images in thier Bio, yet even with that knowledge I could not tell. I reccon this is much more of an issue in the Anime Artist scene where there are more varied Art styles to steal and replicate…

I only consume garbage slop when it’s manmade. A song with 57 kajillion views is real art. A movie with Dwayne Johnson is real art. Only rich people should be able to subject everyone to their limited imagination. Now that regular people can create slop my delicate capitalist machines that shit out content for me to consume are being disrupted. I’m too lazy and dumb to form personal connections with other humans so these fake ass systems are the only way I can get content. And you just can’t tell if it’s human anymore, it’s so sad.

This is an interesting take honestly. A lot of art is made without much care or creativity. That isn’t a bad thing. So why should AI “art” be considered inherently bad?

AI plagiarism wouldn’t be a problem if it weren’t for intellectual copyright and capitalism. Ironically, the status quo of AI art being public domain is absolutely based, as the fruits of our stolen labor belong to us. The communists and anarchists should totally make nonprofit AI art that nobody is allowed to own. Reclaiming AI would be awesome!

Unfortunately, tech bros want to enslave all artists along with the rest of the workers, so they’ll rewrite copyright law to turn AI into their exclusive property. It’ll be an exception with no justification besides “greed=good”

It’s random slop shat out by a machine. Art requires a living, breathing human with thoughts, emotions, and experiences, otherwise it’s just a pile of shit.

AI is a tool. The product can be a random slop if you give it sloppy instructions, or someone can realize this way their great artistic idea that they would not be able to make real otherwise. The pictures don’t just generate themselves, you know? It’s living priple who tell the machine what’s on their minds. If your mind is creative, the results can be good.

It’s only immoral, not inherently of lower quality. Aesthetics and ethics aren’t about what actually is, but about what should be. Even if an AI and a person produce the same image, the AI isn’t a living, breathing human. AI art isn’t slop because of its content, but because of the economic context. That’s a far better reason to hate it than its mistakes and shortcomings.

AIs take away attribution as well as copyright. The original authors don’t get any credit for their creativity and hard work. That is an entirely separate thing from ownership and property.

It is not at all OK for an AI to take a work that is in the public domain, erase the author’s identity, and then reproduce it for people, claiming it as its own.

AI can do much more than “reproduce”.

Is one of those things giving attribution? If I ask for a picture of Mount Fuji in the style of a woodblock print, can the AI tell me what its inspirations were?

it can tell you its inspiration about as well as photoshop’s content-aware fill, because it’s sort of the same tech, just turned to 11. but it depends.

if a lot of the training data is tagged with the name of the artist, and you use the artist’s name to get that style, and the output looks made by that artist, you would be fairly sure who to attribute. if not, you would have to do a mathematical analysis of the model. that’s because it’s not actually associating text with images, the text part is separate from the image part and they only communicate through a sort of coordinate system. one part sees text, the other sees shapes.

also, the size of the training dataset compared to the size of the finished model means that there is less than one bit stored per full image. the fact that some models can reproduce input images almost exactly is basically luck, because none of the original image is in there. it just pulls together everything it knows to build something that already exists.

Even in a hypothetical utopia, the thought of a sea of slop drowning the creative world makes my skin crawl. Imagine putting your heart and soul into something only to watch some machine liquify it into an ugly paste in a nanosecond, then it goes on to do the same thing a million times in a row. It’s hard enough to get noticed in this world, and now every passion project has to compete with the diseased inbred freak clones of other passion projects? It makes me feel so goddamn angry that some asshole felt the need to invent such a thing, and for what? What problem does it solve? Why do you need to use up a cities worth of water to make a six fingered Sailor Moon?

I generally agree (especially with the current critique of using up water/power just for one image)

But I can’t get behind “this tool will make people who don’t use it feel bad”. The same arguments were levied against Photoshop and now it’s a tool in the arsenal. The same arguments were levied against the camera. And I could see the same argument against the printing press (save those poor monks doing calligraphy)

The goal of “everything shall be AI” is fucked and clearly wrong. That doesn’t mean there isn’t any use for it. People who wanna crank out slop will give up when there’s no money in it and it doesn’t grant them attention.

And I say this as someone who despises how every website has an AI chatbot popping up when I visit their site and every search engine is offloading actually visiting and reading pages to AI summaries

This is where I’m coming from. Generative AI is pretty cool and useful, but it has severe limitations that most people don’t comprehend. Machine learning can automate countless time consuming tasks. This is especially true in the entertainment industry, where it’s just another tool for production to use.

Businesses fail to understand is that it cannot perform deductive tasks without necessarily making errors. It can only give probable outputs, not outputs that must be correct based on the input. It goes against the very assumptions we make about computer logic, as it doesn’t work on deductive reasoning.

Generative AI works by emulating biological intelligence, taking principles of neuroscience to solve problems quickly and efficiently. However, this gives AI similar weaknesses to our own minds, imagining things and baking in bias. It can never give the accurate summaries Google hopes it can, as it will only ever tell us what it thinks we want to hear. They keep misusing it in ways that either waste everyone’s time, or do serious harm.

Im sorry but if your arguments is that “AI is doomed because current LLMs are only good at fuzzy, probabilistic, outcomes”, then you do not understand current AI or computer science or why computer scientists are impressed by modern AI.

Discrete concrete logic is what computers have always been good at. That is easy. What has been difficult, is finding a way for computers to address fuzzy, pattern matching, probabilistic problems. The fact that Neural Networks are good at those is precisely what has Computer Scientists excited about AI.

I’m not saying it’s doomed! I literally said that it’s cool and useful. It’s a revolutionary technology in many respects, but not for everything. It cannot replace the things computers have always been good at, but business people don’t seem to realize that. They assume that it can fix anything, not understanding that it will only make certain things worse. The trade-off is counterproductive for tasks where you need consistent indexing.

For instance, Google’s search AI turns primary sources into secondary or tertiary sources by trying to cut corners. I have zero trust in anything it tries to tell me, while all the problems it had before AI have continued to worsen. They could’ve used machine learning to better understand search queries, or diversify results to compensate for vagueness in language, or to fucking combat SEO, but they instead clog up the results with even more bullshit! It’s a war against curiosity at this point! 😫

Eh. Without the economic incentive, we wouldn’t be getting a sea of slop. The energy concerns are very real though.

You sound like my grandparents complaining about techno musicians sampling music instead of playing it themselves.

Good art can be created with any medium. You view AI as replacing art, future musicians will understand it and use it to create art.

Yep this was inevitable.

The sad thing is there is currently a vibrant open source scene around generative ai. There is a strong media campaign against it, as to manipulate the general population so they clamor for a strengthening of copyrights laws.

This won’t lead to these tools disappearing, it will just force them behind pricey and censored subscription models while open source options wither and die.

They do indeed want to enslave us, and will do it with the help of people like OP.

IP, like every part of capitalism, has been totally turned against the artists it claimed to protect. If they want it to only be a chain that binds us, we need to break it. They had their chance to make it work for workers, and they squashed it. If we can’t buy into the system, we have every reason to oppose it.

On a large scale, this will come in the form of “crime,” not revolutionary action. With no social contract binding anyone voluntarily, people will do what they must to serve their own interests. Any criminal activity that weakens the system more than the people must be supported whole heartedly. Smuggling and theft from the wealthy; true Robin Hood marks; are worthy of support. Vengeance from those scarred by the system is more justice than state justice. Revolution isn’t what the fat cats need to fear.

I need someone to train with. You or anyone else in WV?

Western Victoria?

Nah I’m in the US.

We name a lot of shit after the people who used to live here, instead of a fucking monarch.

WV used to live near you? Who’s that?

How do you continue to be so awesomeand wise? Teach me your ways.

Eleuther AI

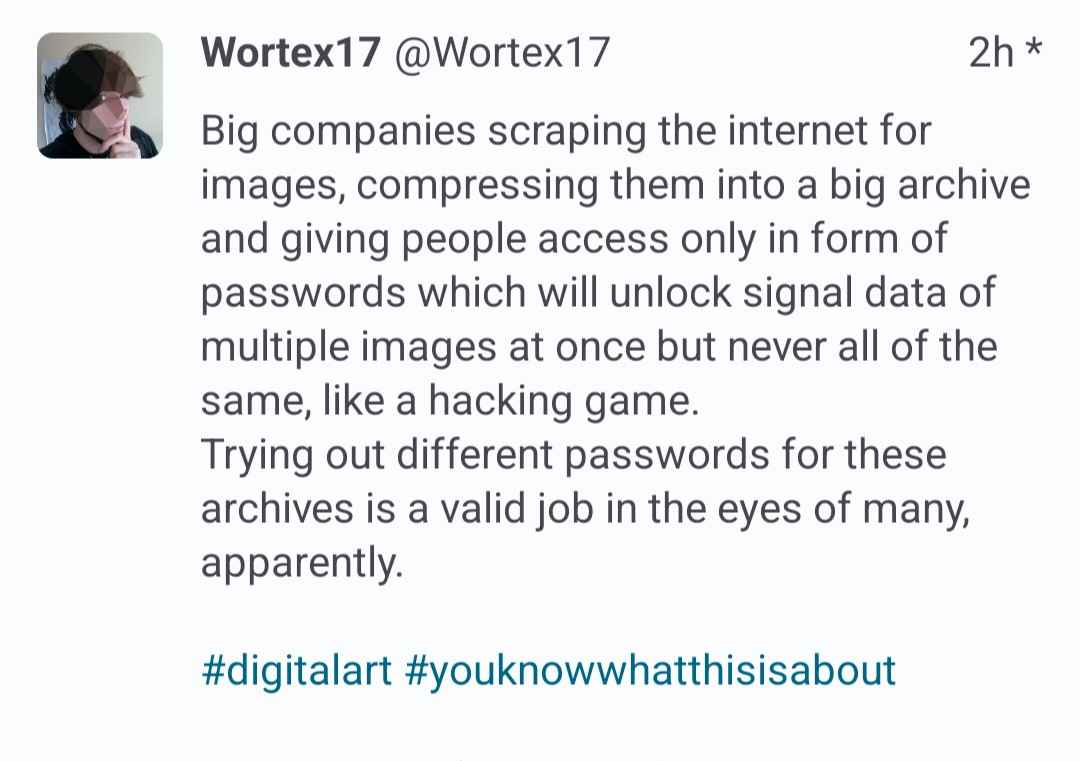

If you look at it from this perspective, it sounds way more obvious. I like this PoV.

It’s not just compressing images in an archive. The AI model is trained off the data, but it doesn’t contain the data.

That is one hell of a garden path sentence

Tech bro: I don’t know you stranger. But here is the source code of my lifelong project, have fun and do whatever you want with it

Etsy Artist: NO, you cannot have the raw files of your wedding pictures, are you insane? THOSE ARE MINE AND ONLY MINE!. I want to be paid for anytime you vaguely look in the direction of anything I done, FOREVER!

But you are telling me the former is the greedy bad guy and the later is the light for the revolution or something.

I’ll go all in:

Yes, art has always been derivative. One artist inspires the other, borrows from the other, reacts on the ither. That’s the way it works. The copyright laws we have now are pushing all life out of art in the name of making money.

I’m inclined to say that TechBros are usually not the ones whose work they give away for free*, and they really care more about profits than anything.

* there are a multitude of ways to provide information but making sure it’s useless, for AI models that usually comes in a way of providing the source code but not training data or architecture, so that you’ll need to do most of the work again. A lot of them don’t do even that.

Please note, this comment is off topic to the OP post and is only about your idealistic view of TechBros

Tech bro: I don’t know you stranger. But here is the source code of my lifelong project, have fun and do whatever you want with it

Hello Spez ohh hi mark, does this guy talking about you?

Yeah, you need to be the right kind of neural network before you’re allowed to learn from other artists.

Unironically yes. Art is a part of the human condition. If you think that’s something that should be automated, then you don’t understand why art has value. Doubly so considering you feel keen on dehumanizing the people who make it. Humans have hopes and dreams. Computers don’t.

Art can have value to both its creator and its viewer. Present-day AIs have no self-awareness and cannot derive any value of that sort from the art they create, but that art’s human viewers can still derive value from it. Humans already derive value from viewing beautiful things (sunsets, flowers, etc.) which have no self-aware creator (unless you’re religious).

With that said, the topic here is plagiarism, with the implication (if I understand you correctly) that an AI cannot create anything truly original because it does not experience the human condition. I don’t think that’s the case, but even if it is then “truly original” is still a very high standard that most human art does not meet. If I paint a ballerina in the style of Degas, I have created something with little artistic worth but that doesn’t imply that I have plagiarized Degas. Why should an AI be held to a higher standard than that?

Because it’s not a human and possesses no self awareness. Humans take inspiration, machines copy. When people tell stories, they have to think about what they’re doing and why. Everything in a work of fiction is intentionally put there by the author. Computer programs do what they are programmed to do, which in this case is copy shit other people made. That’s what it’s designed to do. You’re speaking about the technology as if it were anything more than that, as if it were a person who were capable of knowing the difference. It doesn’t know the meaning of terms like “homage” or “adaptation”. It does not think about what it spits out at all. It’s sole function is to do what you ask of it, and it does that using data stolen from other people. That’s not even getting into the whole spyware thing tech bros keep trying to normalize.

You cannot be both pro-art and pro-“AI”. Full fucking stop.

To create an AI image there must be a human being with an idea. The human being wants to create a Degas style ballerina. The idea was created by their organic brain. Then they take some tool and make the idea come true. The tool can be a brush with paint or AI.

Art is about how it was made, not about the emotions it illicites from the viewer.

I’ll add that the way art is made is craft, not art. Art is the idea behind the craft. AI skips the crafty part, not the art part.

Why not both?

Because its gatekeeping. Making blanket statement that a piece can’t be considered art because of certain tools used in its making goes against the whole principle imo.

My comment above is sarcastic btw, I’m not sure if it came off that way. I’m mocking his “you can’t be pro ai and pro art” bit and his whole rant in general. I find it completely asinine when people try to define art to suit their purposes and draw lines between what is or isn’t.

Ah, the artist’s favorite pastime, drawing arbitrary lines.

It could rule tho. It just needs more development. We didn’t put men on the moon in a day.

It could give prettier results but that doesn’t solve the ethical issues (and even for the prettier part I can see there being fundamental limits)

Raising ethical issues in this culture is like handing out speeding tickets at a racetrack.

Also, we’ve married technology. We couldn’t stop its progress even if we wanted to.

It’s a computer program that turns the hard work of artists and utterly ridiculous amounts of water into samey, uncanny abominations. To compare that to the moon landing? I don’t even have words for that.

To compare that to the moon landing?

I don’t think they’re comparing it to the moon landing. If I say “Rome wasn’t built in a day” I’m not taking about Rome.

Then say that instead. That’s a common saying most people are aware of. I’ve seen Silicon Valley types promise all kinds of shit before, so this just fits right in.

Removed by mod

AI stuff is banned in this community.

AI generated art doesn’t meet the definition of plagiarism though?

plagiarize: : to steal and pass off (the ideas or words of another) as one’s own : use (another’s production) without crediting the source.

Since almost no one actually consented to having their images used as training data for generative art, and since it never credits the training data that was referenced to train the nodes used for any given generation; it is using another persons production without crediting the source, and thus is text book plagiarism.

Every living artist uses other people’s art as training data without their consent. That’s the way art works and it’s ok. Please let’s not consider every artist has to pay for every piece of art they ever layed their eyes on to be allowed to create art themselves.

So does that mean that any artist which has viewed another piece of art and learned from it, and used that knowledge in their own works, has therefore committed plagiarism by not asking for permission or crediting every work they’ve ever seen?

I’m an author and one of the most common pieces of advice for authors is to read more. Reading other authors’ works teaches a lot about word choice, character development, world building, etc. How is that any different from an AI model learning from art pieces to make its own?

plagiarize: : to steal and pass off (the ideas or words of another) as one’s own : use (another’s production) without crediting the source.

Since almost no one actually consented to having their images used as training data for generative art, and since it never credits the training data that was referenced to train the nodes used for any given generation; it is using another persons production without crediting the source, and thus is text book plagiarism.

AI systems like generative art models are trained on large datasets to recognize patterns, styles, and structures, but the output they create does not directly copy or reproduce the original data. Instead, the AI generates new works by synthesizing learned features. This is more akin to how a human artist might create something inspired by various influences. If the generated image does not directly replicate any specific piece of the training data, it cannot be considered “using another’s production without crediting the source.”

Also AI platforms like Midjourney do not “reference” specific works in a way that can be credited. The training process distills millions of examples into mathematical representations, not a library of individual artworks. Crediting every source is not only infeasible and impractical, it is also not analogous to failing to attribute a specific inspiration or idea, which is a cornerstone of plagiarism.

Plagiarism is defined in academic settings very precisely. Getting ideas and structure from others rarely meets the standard. Why? Because we do this all the time. Also, plagiarism is 100% legal, because of course it is! Imitation is often a good thing.

Get over it already.

Honestly, I get it. Getting mad about generative AI technology existing gives the same vibes as the people who were mad about Napster existing. Like… sorry friend, cat is out of the bag. We have to learn how to live with it now.

Is that cat out of the bag? The original Napster was much easier than piracy today IMO.

Maybe when it isn’t an active threat to me having food.

Once I stop seeing this garbage daily, sure~

The buzz over AI art and especially AI wiritng? Sure did! Lots of snake oil there, not so interesting.