- cross-posted to:

- videos

- jpegxl

- [email protected]

- cross-posted to:

- videos

- jpegxl

- [email protected]

TL:DW, JPEG is getting old in the tooth, which prompted the creation of JPEG XL, which is a fairly future-proof new compression standard that can compress images to the same file size or smaller than regular JPEG while having massively higher quality.

However, JPEG XL support was removed from Google Chrome based browsers in favor of AVIF, a standalone image compression derived from the AV1 video compression codec that is decidedly not future-proof, having some hard-coded limitations, as well as missing some very nice to have features that JPEG XL offers such as progressive image loading and lower hardware requirements. The result of this is that JPEG XL adoption will be severely hamstrung by Google’s decision, which is ultimately pretty lame.

This is why Google keeps getting caught up in monopoly lawsuits.

Modern Google is becoming the Microsoft of the 90s

And they’ll make eleventy bajillion dollars in the meantime, plenty of money to pay their inevitable punitive “fines.”

Hell old MSs penalty was giving free licenses in markets it never had a grip on, so its “lock 'em in!” model meant the “penalty” benefited them!

Which is funny and said because Microsoft is also the Microsoft of the 90s.

Microsoft is still like this

I tried JPEG XL and it didn’t even make my files extra large. It actually made them SMALLER.

False advertising.

I think you took the wrong enlargement pill.

Just set the pills to wumbo.

Jpeg XL isn’t backwards compatible with existing JPEG renderers. If it was, it’d be a winner. We already have PNG and JPG and now we’ve got people using the annoying webP. Adding another format that requires new decoder support isn’t going to help.

“the annoying webp” AFAIK is the same problem as JPEG XL, apps just didn’t implement it.

It is supported in browsers, which is good, but not in third party apps. AVIF or whatever is going to have the same problem.

Jpeg XL isn’t backwards compatible with existing JPEG renderers. If it was, it’d be a winner.

According to the video, and this article, JPEG XL is backwards compatible with JPEG.

But I’m not sure if that’s all that necessary. JPEG XL was designed to be a full, long term replacement to JPEG. Old JPEG’s compression is very lossy, while JPEG XL, with the same amount of computational power, speed, and size, outclasses it entirely. PNG is lossless, and thus is not comparable since the file size is so much larger.

JPEG XL, at least from what I’m seeing, does appear to be the best full replacement for JPEG (and it’s not like they can’t co-exist).

It’s only backwards compatible in that it can re-encode existing jpeg content into the newer format without any image loss. Existing browsers and apps can’t render jpegXL without adding a new decoder.

Existing browsers and apps can’t render jpegXL without adding a new decoder.

Why is that a negative?

Legacy client support. Old devices running old browser code can’t support a new format without software updates, and that’s not always possible. Decoding jxl on a 15yo device that’s not upgradable isn’t good UX. Sure, you probably can work around that with slow JavaScript decoding for many but it’ll be slow and processor intensive. Imagine decoding jxl on a low power arm device or something like a Celeron from the early 2010s and you’ll get the idea, it will not be anywhere near as fast as good old jpeg.

But how is that different to any other new format? Webp was no different?

Google rammed webp through because it saved them money on bandwidth (and time during page loading) and because they controlled the standard. They’re doing the same thing with jpeg now that they control jpegli. Jpegli directly lifts the majority of features from jpegxl and google controls that standard.

That’s a good argument, and as a fan of permacomputing and reducing e-waste, I must admit I’m fairly swayed by it.

However, are you sure JPEG XL decode/encode is more computationally heavy than JPEG to where it would struggle on older hardware? This measurement seems to show that it’s quite comparable to standard JPEG, unless I’m misunderstanding something (and I very well might be).

That wouldn’t help the people stuck on an outdated browser (older, unsupported phones?), but for those who can change their OS, like older PC’s, a modern Linux distro with an updated browser would still allow that old hardware to decode JPEG XL’s fairly well, I would hope.

Optimized jpegxl decoding can be as fast as jpeg but only if the browser supports the format natively. If you’re trying to bolt jxl decoding onto a legacy browser your options become JavaScript and WASM decoding. WASM can be as fast but browsers released before like 2020 won’t support it and need to use JavaScript to do the job. Decoding jxl in JavaScript is, let’s just say it’s not fast and it’s not guaranteed to work on legacy browsers and older machines. Additionally any of these bolt on mechanisms require sending the decoder package on page load so unless you’re able to load that from the user’s cache you pay the bandwidth/time price of downloading and initializing the decoder code before images even start to render on the page. Ultimately bolting on support for the new format just isn’t worth the cost of the implementation in many cases so sites usually implement fallback to the older format as well.

Webp succeeded because Google rammed the format through and they did that because they controlled the standard. You’ll see the same thing happen with the jpegli format next, it lifts the majority of its featureset from jpegxl and Google controls the standard.

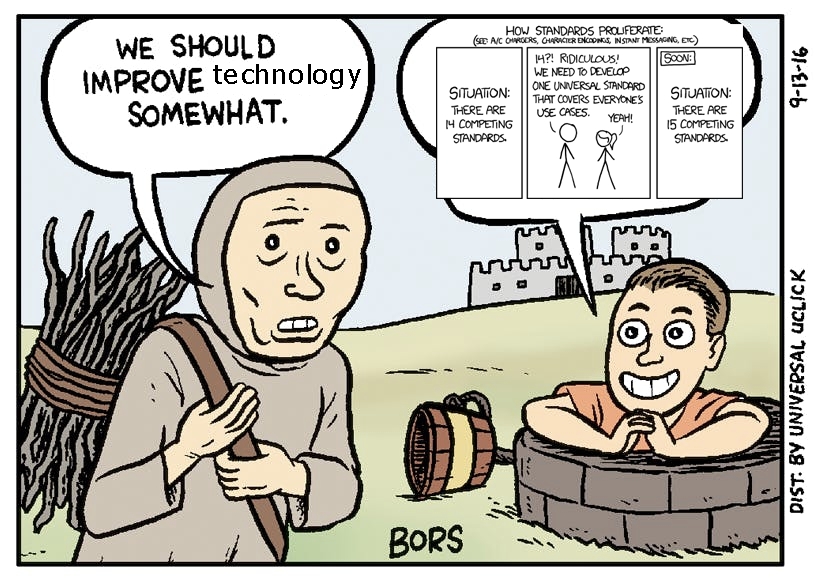

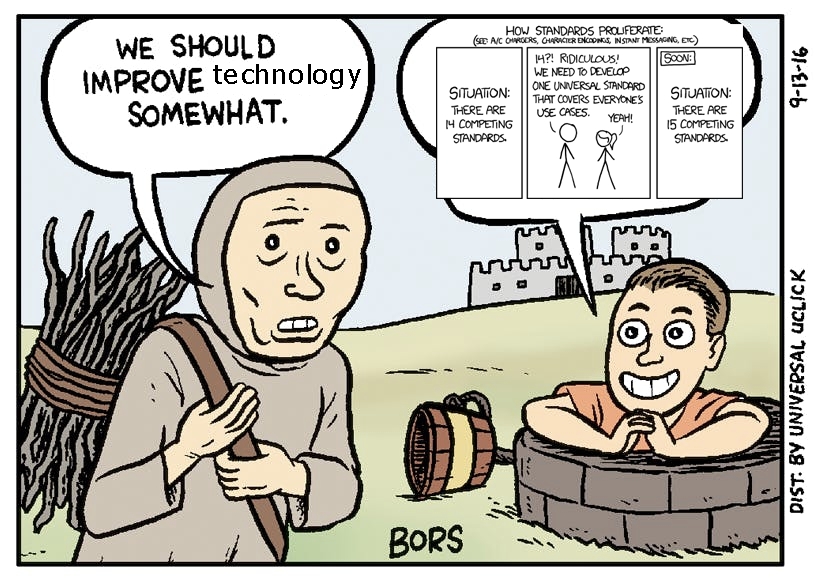

The video actually references that comic at the end.

But I don’t see how that applies in your example, since both JPEG and JPEG XL existing in parallel doesn’t really have any downsides, it’d just be nice to have the newer option available. The thrust of the video is that Google is kneecapping JPEG XL in favor of their own format, which is not backwards compatible with JPEG in any capacity. So we’re getting a brand new format either way, but a monopoly is forcing a worse format.

The other thing is, disk space and internet speeds just keep getting cheaper so… Why change just for disk space?

Adding more decoders means more overheads in code size, projects dependencies, maintanance, developer bandwidth and higher potential for security vulnerabilities.

The alternative is to never have anything better, which is not realistic

Yes, it means more code, but that’s an inevitability. We already have lots of legacy stuff, like, say, floppy disk drivers

A balance has to be struck. The alternative isn’t not getting anything better, it’s being sure the benefits are worth the costs. The comment was “Why is [adding another decoder] a negative?” There is a cost to it, and while most people don’t think about this stuff, someone does.

The floppy code was destined to be removed from Linux because no one wanted to maintain it and it had such a small user base. Fortunately I think some people stepped up to look after it but that could have made preserving old software significantly harder.

If image formats get abandoned, browsers are going to face hard decisions as to whether to drop support. There has to be some push-back to over-proliferation of formats or we could be in a worse position than now, where there are only two or three viable browser alternatives that can keep up with the churn of web technologies.

I mean, the comic is even in the OP. The whole point is that AVIF is already out there, like it or not. I’m not happy about Google setting the standards but that has to be supported. Does JPEGXL cross the line where it’s really worth adding in addition to AVIF? It’s easy to yes when you’re not the one supporting it.

They’re confusing backwards and forwards compatible. The new file format is backwards compatible but the old renderers are not forward compatible with the new format.

JPEG XL in lossless mode actually gives around 50% smaller file sizes than PNG

Oh damn, even better than the estimates I found.

My understanding is that webp isn’t actually all that bad from a technical perspective, it was just annoying because it started getting used widely on the web before all the various tools caught up and implemented support for it.

It’s certainly not bad. It’s just not quite as good.

I just wish more software would support webp files. I remember Reddit converting every image to webp to save on space and bandwidth (smart, imo) but not allowing you to directly upload webp files in posts because it wasn’t a supported file format.

If webp was just more standardized, I’d love to use it more. It would certainly save me a ton of storage space.

So… your solution is to stick with extremely dated and objectively bad file formats? You using Windows 95?

What’s wrong with PNG?

For what it is? Nothing.

Compared to something like JPEG XL? It is hands down worse in virtually all metrics.

I think this might sound like a weird thing to say, but technical superiority isn’t enough to make a convincing argument for adoption. There are plenty of things that are undeniably superior but yet the case for adoption is weak, mostly because (but not solely because) it would be difficult to adopt.

As an example, the French Republican Calendar (and the reformed calendar with 13 months) are both evidently superior to the Gregorian Calendar in terms of regularity but there is no case to argue for their adoption when the Gregorian calendar works well enough.

Another example—metric time. Also proposed as part of the metric system around the same time as it was just gaining ground, 100 seconds in a minute and 100 minutes in an hour definitely makes more sense than 60, but it would be ridiculous to say that we should devote resources into switching to it.

Final example—arithmetic in a dozenal (base-twelve) system is undeniably better than in decimal, but it would definitely not be worth the hassle to switch.

For similar reasons, I don’t find the case for JPEG XL compelling. Yes, it’s better in every metric, but when the difference comes down to a measly one or two megabytes compared to PNG and WEBP, most people really just don’t care enough. That isn’t to say that I think it’s worthless, and I do think there are valid use cases, but I doubt it will unseat PNG on the Internet.

I’m not under the impression it would unseat PNG anytime soon, but “we have a current standard” isn’t a good argument against it. As images get higher and higher quality, it’s going to increase the total size of images. And we are going to hit a point where it matters.

This sounds so much like the misquoted “640K ought to be enough for anybody” that I honestly can’t take it seriously. There’s a reason new algorithms, formats and hardware are developed and released, because they improve upon the previous and generally improve things.

My argument is not “we have a current standard”, it’s “people don’t give enough of a shit to change”.

You’re thinking in terms of the individual user with a handful of files.

When you look at it from a server point of view with tens of terabytes of images, or as a data center, the picture is very different.

Shaving 5 or 10% off of files is a huge deal. And that’s not even taking into account the huge leap in quality.

jpeg xl lossless is around 50% smaller than pngs on average, which is a huge difference

https://siipo.la/blog/whats-the-best-lossless-image-format-comparing-png-webp-avif-and-jpeg-xl

Soo they added webp and AV1, which aren’t that much better then old jpeg, especially with the modern jpeg encoder JpegLi. But JpegXL is out of the question.

Those examples all have a good reason that does not apply here. Browsers already support multiple formats and added a few in the last decade.

I use arch btw

Compared to something like JPEG XL? It is hands down worse in virtually all metrics.

Only thing I can think of is that PNG is inherently lossless. Whereas JPEG XL can be lossless or lossy.

I haven’t dug into the test data or methodology myself but I read a discussion thread recently (on Reddit - /r/jpegxl/comments/l9ta2u/how_does_lossless_jpegxl_compared_to_png) - across a 200+ image test suite, the lossless compression of PNG generates files that are 162% the size of those losslessly compressed with JPEG XL.

However I also know that some tools have bad performance compressing PNG, and no certainty that those weren’t used

It has a higher bit depth at orders of magnitude less file size. Admittedly it has a smaller max dimension, though the max for PNG is (I believe) purely theoretical.

So what your saying is that I should save everything as a BMP every time. Why compress images when I can be the anchor that holds us in place.

By us I guess I mean the loading bar

Compared to something like JPEG XL? [PNG] is hands down worse in virtually all metrics.

Until we circle back to “Jpeg XL isn’t backwards compatible with existing JPEG renderers. If it was, it’d be a winner.”

APNG, as an example, is backwards compatible with PNG.

If JPEG-XL rendered a tiny fallback JPEG (think quality 0 or even more compression) in browsers that don’t support JPEG-XL, then sites could use it without having to include a fallback option themselves.

Why are you using PNG when it’s not backwards compatible with gif? They don’t even render a small low quality gif when a browser which doesn’t support it tries to load it.

Are you seriously asking why a commonly supported 27 year old format doesn’t need a fallback, but a 2 year old format does?

Honest question, does JPEG XL support lossless compression? If so, then it’s probably objectively better than PNG. My understanding with JPEG is that there was no way to actually have lossless compression, it always compressed the image at least a little.

JPEG XL supports lossless compression with a roughly 35% reduction in file size compared to PNG.

Lovely! 🤌

That’s why all my files are in TGA.

Forgive my ignorance, but isn’t this like complaining that a PlayStation 2 can’t play PS5 games?

It’s a different culture between PCs and consoles. Consoles are standardized computers - they all have the same* hardware. Game developers can be confident in what functionality their games have access to, and so use the best they can.

PCs in comparison are wildly different from user to user due to being modular: you can pick from many parts to create a computer. As such, devs tend to focus on what most PC’s can do and make them optionally better if the PC has access to supporting hardware (e.g. RTX ray-tracing cores).

Besides, video games are drastically complex in comparison to static images 😛

All the cool kids use .HEIF anyway

I use jpeg 2000

You can’t add new and better stuff while staying compatible with the old stuff. Especially not when your goal is compact files (or you’d just embed the old format).

Isn’t that the same as other newer formats though?

There’s always something new, and if the new thing is better, adding/switching to it is the better move.

Or am I missing something about the other formats like webp?

You have to offer something compelling for everyone. Just coming out with yet another new standard™ isn’t enough. As pointed out earlier, we already have:

- jpeg

- Png

- Webp

- HEIC

What’s the point of adding another encoder/decoder to the table when PNG and JPEG are still “good enough”?

PNG and JPEG aren’t good enough, to be honest. If you run a content heavy site, you can see something like a 30-70% decrease in bandwidth usage by using WebP.

Look it’s all actually about re-encumberancing image file formats back into corporate controlled patented formats. If we would collectively just spend time and money and development resources expanding and improving PNG and gif formats that are no longer patent encumbered, we’d all live happily ever after.

JPEG-XL is in no way patent encumbered. Neither is AVIF. I don’t know what you’re talking about

https://encode.su/threads/3863-RANS-Microsoft-wins-data-encoding-patent

https://www.theregister.com/2022/02/17/microsoft_ans_patent/

https://avifstudio.com/blogs/faq/avif-patents/

https://news.ycombinator.com/item?id=26910515

https://aomedia.org/press releases/the-alliance-for-open-media-statement/

If AVIF was not patent encumbered, AOMedia would not need to have a Patent License to allow open source use.

A majority of the most recent standards are effectively cabal esque private groups of Corporations that hold patents that on the underlying technology and then license the patents among each other as part of the standards org and throw a license bone towards open source. That can all be undone by the patent holders at their whim.

There’s no need to create a standard format that’s patent encumbered especially if they don’t ever intend to monetize that paten,t. It’s all about maintaining control of intellectual property and especially who was allowed and when they are allowed to profit from the standards.

Royalty-free blanket patent licensing is compatible with Free Software and should be considered the same as being unpatented. Even if it’s conditioned on a grant of reciprocality. It’s only when patent holders start demanding money (or worse, withholding licenses altogether) that it becomes a problem

its royalty free and has an open source implementation, what more could you want?

No patent encumbrance. That was the entire point.

Clawing control of patent infected media standards is far more important for a healthy open internet built on open standards that is not subject to the whims and controls of capital investment groups eating up companies to exert control of the entire technology standards pipeline.

Why was it not included? AVIF creator influence bias. It’s a good story.

Google’s handling of jxl makes a lot more sense after the jpegli announcement. It’s apparent now that they declined to support jxl in favor of cloning many of jxl’s features in a format they control.

Why wasn’t PNG enough to replace jpeg?

PNG is a lossless format, and hence results in fairly large file sized compared to compressed formats, so they’re solving different issues.

JPEG XL is capable of being either lossy or lossless, so it sorta replaces both JPEG and PNG

not enough elitists

And JPEG2000 is what’s used in Digital Cinema Package (DCP) - that’s the file format used to distribute feature films. That’s not going away soon.

Does jpegxl work on firefox?

Only in Nightly and not by default (you need to enable it).

Removed by mod

Without jpeg compression artifacts how the hell are we supposed to know which memes are fresh and which memes are vintage???

I still think it’s bullshit that 20-year-old photos now look the same as 20-second-old photos. Young people out there with baby pictures that look like they were taken yesterday.

We need a file format that degrades into black and white over time.

The tradition has normally been to just have newer image formats and image-generation hardware and software that are more capable or higher fidelity so that the old stuff starts to look old in comparison to the new stuff.

What should be done is that every time a new format comes out all images in existence are re-encoded in that format. Hopefully that will cause artifacts, clearing everything up in terms of image age.

Slight tangent. But I’ve recently been pulling old home videos off of MiniDV tapes. And I’ve found that the ffmpeg dv1 decoder can correct several tape issues when re-encoding from

dv1to essentially any modern codec. So I’ve got like 3GB video files that look incredibly poor, but then I re-encode them into h264 files that look better than the original. It’s baffling how well that works.Wait, that’s illegal

lols

Could probably pull that off with meta information to determine the age of the photo.

lol nice one. It’s shocking how far we’ve come in quality.

Pretty much sums it up. JPEGXL could’ve been the standard by now if Google would stop kneecapping it in favor of its own tech, now we’re stuck in an awkward position where neither of them are getting as much traction because nobody can decide on which to focus on.

Also, while Safari does support AVIF, there are some features it doesn’t support like moving images, so we have to wait on that too… AVIF isn’t bad, but it doesn’t matter if it takes another 5+ years to get global support for a new image format…

People are quick to blame Google for the slow uptake of Jpeg XL, but I don’t think that can be the whole story. Lots of other vendors, including non-commercial free software projects, have also been slow to support it. Gimp for example still only supports it via a plugin.

But if it’s not just a matter of Google being assholes, what’s the actual issue with Jpeg XL uptake? No clue, does anyone know?

GIMP supports JPEG XL natively in 3.0 development versions. If I remember correctly GIMP 2.10 was released before JPEG-XL was ready, so I think that’s the reason. They could have added support in smaller update though, which was the case with AVIF.

Lots of other vendors, including non-commercial free software projects, have also been slow to support it.

checks

It doesn’t look like the Lemmy Web UI supports JPEG XL uploads, for one.

Imgur doesn’t let me upload it either, I have to use general file hosts

Removed by mod

The issue with jpegxl is that in reality jpeg is fine for 99% of images on the internet.

If you need lossless, you can have PNG.

“But JPEGXL can save 0,18mb in compression!” Shut up nerd everyone has broadband it doesn’t matter

What a dumb comment.

All of that adds up when you have thousands or tens of thousands of images. Or even when you’re just loading a very media-heavy website.

The compression used by JPEG-XL is very, very good. As is the decoding/encoding performance, both in single core and in multi-core applications.

It’s royalty free. Supports animation. Supports transparency. Supports layers. Supports HDR. Supports a bit depth of 32 compared to, what, 8?

JPEG-XL is what we should be striving for.

Removed by mod

shut up nerd

He said, on Lemmy. On the Technology community. On a submission about image formats.

If nerdiness, or discussion about image formats or other tech bothers you, why are you even here?

Moving on from that…

There’s storage improvements. There’s server side considerations for storage, processing, and energy efficiency. There’s poor mobile data connections to contend with.

There’s better compression (I’m guessing you don’t like artefacts all over images, or other oddities stemming from bad compression?)

There’s still HDR support. There’s still the support for animations. There’s still support for transparency. There’s still support for layers.

Imagine being upset about the prospect of their being a vastly better image standard. Are you that desperate to be contrarian? Are you that desperate for attention?

You are totally right AND He’s making a valid point with his sarcastic joke of “shut up, nerd!”

“Nobody cares” means companies dont want to spend money to incorporate it if there’s no demand from consumers.

Most consumers have no idea what a jpeg even is.

It won’t be until Apple or someone brands it as an iPeg and claims you have a smol pp if your device doesn’t have it that folks will notice.

Im reminded of telling folks about shoutcasts and nobody cared. Then apple comes out with podcasts and everyone was suddenly excited about 8 year old streaming tech

Yet for some reason, browsers started supporting other formats like WebP, even though even fewer consumers wanted them. This makes complete sense when looking at it from the perspective “the companies try to save money and increase market share without caring about the consumer”. How do you explain it from yours?

Excellent point on the webp.

I’m guessing that being google’s baby they integrated it into chromium

He said, on Lemmy. On the Technology community. On a submission about image formats.

I know my audience.

I’m not upset there’s a new better stronger faster harder standard, I’m just telling you why nobody cares about your jpeg2000 v2

Whatever you say. After all, you must be right. You’re a contrarian on the internet. You’re quirky and different. You’re not like the other girls.

That 0.18mb accumulates quickly on the server’s side if you have 10000 people trying to access that image at the same time. And there are millions it not billions of images on the net. Just because we have the resources doesn’t mean we should squander them…that’s how you end up with chat apps taking multiple gigabytes of RAM.

“I’m very small minded and am not important or smart enough to have ever worked on a large-scale project in my life, but I will assume my lack of experience has earned me a sense of authority” -Redisdead

While AVIF saves about 2/3 in my manga downloads (usually jpg). 10 GB to 3 GB. Btw, most comicbook apps support avif.

10 whole GB of storage? I understand now why you need such an ultimate compression technology, this is an insurmountable amount of data in these harrowing times where you can buy a flash card the size of a fingernail that can hold that amount about 25 times.

That was an example, is about a 100 chapter manga. Stop being a jerk.

Check how large your photos library is on your computer. Now wouldn’t it be nice if it was 40% smaller?

I have several TBs of storage. I don’t remember the last time I paid attention to it.

I don’t even use jpeg for it. I have all the raw pics from my DSLR and lossless PNGs for stuff I edited.

It’s quite literally a non issue. Storage is cheap af.

It’s competing with webp and it helps prevent jpg artifacts when downloaded multiple times

prevent jpg artifacts when downloaded multiple times

That’s not how downloading works

Slightly higher in this thread you spout off complaining about pedantry, and here you are, being even more pedantic?

If you download and upload repeatedly you potentially lose some data each time which is how we got jpeg memes

that happens when the sites you upload it to re-encode the image

Nobody remember JPEG2000 ?!?

“In the year two thouuusaaaaaannd, in the year two thouuusaaaaaannd”

Jpeg2000 was patent encumbered. They waived the patents but that wasn’t guaranteed going forward.

Yeah but it wasn’t free, right?

AnD tHaTs A bAd ThInG

😒

Wasn’t there a licensing issue with jpeg xl for using Microsoft’s some sort of algo?

No, there aren’t any licensing issues with JPEG-XL.

Then it’s absolutely soul-crushing to see Google abuse it’s market dominance like that…

Makes sense why AV1F isn’t supported in Windows. Likely a corrupt Microsoft backroom deal with proprietary algorithms makers.

there’s AVIF and AV1 extensions by Microsoft itself on their store afaik…

Oh cool I didn’t even know that. Thanks for the heads up. Wonder why the codec isn’t installed by default though

bring back bmp and tiff cowards

Oof, BMP. I remember those days…

PCX or nothin’

I liked TARGA, personally…

My game engine has TARGA as an option, and will be super important once I finish my own data package format using a dictionary-based zstd algorithm.

I’ll just revert to .IFF

There were 14 competing standards.

There are now 13 competing standards.

And that’s fine by me.

as a .png elitist i see this as a good thing.

As an EXR elitist I deeply resent Google’s blatant sabotage of JXL.

(And also laugh at the PNG elitists, as is custom.)

WHY IS NO ONE STANDING UP FOR GIF?!

I don’t know, because it sucks and has zero benefits over PNG?

Probably the least relevant benefit of APNG over GIF: Unlike GIF, I can even pronounce APNG with a soft G and not feel gross about it. (Like I’m betraying the peanut butter brand and my entire moral framework at the same time, y’know?)

DON’T USE A SOFT G ON EITHER, YOU MANIAC

I mean, I’m just pronouncing the letters aloud: A-P-N-“Gee.”

Especially after animated pngs were developed but nobody wanted to support those so we’re stuck with gifs that are actually mp4s or webms.

Strictly-speaking, last time I took a serious look at this, which was quite some years back, it was possible to make very small GIFs that were smaller than very small PNGs.

That used to be more significant back when “web bugs” – one-pixel, transparent images – were a popular mechanism to try to track users. I don’t know if that’s still a popular tactic these days.

It is, they are called canvas now. I recommend Canvas Blocker addon (doesn’t block them but falsifies them).

Is chrome modular enough to make it feasible for Edge and other Chrome based browsers to add support for jpegxl themselves?

TLDR how is that bad?

There should be a tl:DW in the comments here.