Bots can now solve CAPTCHAs better than humans

Bro, everytime I get the select all the ‘x’ tiles (motorcycle, bicycle, bus, etc) one I never know if it means “all” of them, like even ones with just a little bit on the tile. Does it want the tires, too? It’s bullshit. Never seems to be correct, what I select.

I’ve always done any square that includes any part of the thing, so the tire on the bus or the helmet of the motorcycle rider. That no longer works for me though, recently I keep getting more images and they seemingly never stop so I just give up on whatever I was trying to load. Its pretty ridiculous how shit the internet has become.

By now I’m up to filling one of these things. If they show me a second one, I’m out. Not wasting my time training some AI

I think they don’t train AI with captchas anymore. That used to be the case 10 years ago when we put in all the house numbers for google maps. but as far as I know they learned to do it cheaper without the captcha service. as of now (and for some time already) the results are just wasted.

Half of them are literally traffic identification and i am skeptical of those 3d orientation ones also.

For some time I’ve occasionally used the ones for the visually impaired because they were easier to get right. But they also messed those up. I get a load of fire hydrants, cars, stairs and bicycles and motorcycles and traffic lights. Sometimes the pictures just repeat. I don’t think the stock of images is that big. But they could look at other things instead of just correctness. Like your mouse movement and how long it takes you. Not sure if they do that.

so the tire on the bus

Ok, part of the bus.

the helmet of the motorcycle rider

The helmet is not part of a motorcycle. I will fail that captcha every time if it requires it.

You’re training AI on road safety, the head of the rider is the most important part of the motorcycle i would argue

the head of the rider is the most important part

Shh, the AI is listening.

Except I used to assume the same thing, but I failed every time I didn’t include the rider. Once I started including all the squares with the rider(s) as well, I started passing a lot more.

They’re training for a car.

The passenger and their equipment are part of the hazard.

“select the bikes” That’s a motorcycle and that’s a moped. Those don’t count-uh I fucking guess they do?

“Select the bus” Bro that’s an intersection at 200 feet.

“Type the Captcha letters” Is that a lowercase r or a capital T?

Lowercase L and uppercase i are so fucking problematic

I found out recently that the letter captcha aren’t case sensitive most of the time

IKR! i try and solve the CAPTCHA and theres a tiny 5 nanometer slice of crosswalk on another tile, and i have no idea if i need to click it or not. And then sometimes you don’t have that issue, and you click all the correct tiles, and then it just takes you to another one, and another one, and another one… they really need to improve it

Bingo! You can’t figure out the rules.

Because I think the “rules” are based on what other people did

I select every little bit, which works, but there might be some wiggle room

I don’t think it matters, as that isn’t the real test. Instead, it’s testing whether you are “behaving” as a human. Mouse movements, hesitation etc.

@[email protected] Then why does it keep repeating it if I get a tiny detail or a letter wrong?

To give you hope… and despair. In the end, it wants to witness your human suffering. Shake that mouse.

Yeah, and if you move the cursor convincingly enough, it will just give the check mark without showing any pictures.

It starts checking your browser, input devices, screen info, etc, before you even click the are you human box.

I suspect it knows you’re human and keeps track of those people who are good at clicking the image, so they can harvest more training data. They know who will keep trying, and give them more images to verify.

Ah. Smarter than it appears.

Have you considered that maybe you are a robot?

Beep boop

they are evolving

If it’s like microscopic I just ignore it. Generally works pretty well. Trust your gut instincts.

Yes, every part, even the slivers. And the tires.

Do it slowly and don’t be consistent, sometimes I select the tile with 3 pixels of the thing its supposed to contain, sometimes I leave 2 or 3 tiles that clearly contain the thing, sometimes I just select a tile that doesn’t even match. Idk, it always works, I suppose the erratic behavior is what shows them I’m human or smth

It looks what most people do and people are lazy, so, i guess, select only the fully covered tiles?

<click the traffic lights>

me: clicks all the traffic lights

<wrong!>

I hate that captcha – the Google captcha where a single image (like a picture of a street with traffic lights, bikes, buses, etc) is divided up – it is the worst one by far.

I’ve always thought it was intentional so that humans could train the edge detection of the machine vision algorithms.

It is. The actual test for humans there isn’t the fact that you clicked the right squares, it’s how your mouse jitters or how your finger moves a bit when you tap.

What really stresses me out is the question of whether a human on a motorcycle becomes part of the motorcycle.

Of course not. But what about that millimeter of tire? Or the tenth of the rearview mirror?

Of course, but CAPTCHA says no, do it again, to hell with those bicycle handlebars!

It does not.

See, that’s what I thought too, but according to the Captchas, I don’t know what a motorcycle is ¯\_ (ツ)_/¯

Right? After failing 90% of captchas I started selecting the person on the motorcycle as well. And wouldn’t you know it, I’m now passing most if the time.

You can just click a couple squares and hit ok. It doesn’t have to be right.

deleted by creator

I hate when the captcha starts at 1/10, so much so that I’ll usually just walk away if I can.

You‘re doing it too fast most likely. Try doing it very slowly instead. I recently realized most captchas are designed for seniors, not tech savvy people. They will keep throwing them at you if you‘re too good at them. I think the joke that one day only AIs can solve captchas so you have to fail at them in order to be recognized as human has long become a reality in a way. Hope that helps.

So we just invert the logic now, right?

Make the captcha impossibly hard to get right for humans but doable for bots, and let people in if they fail the test.I haven’t been able to solve CAPTHCAs in years.

I suggest you get an appointment with your local blade runner.

Let me tell you about my mother

Are you a robot?

<fails captcha>

I guess notDitching CAPTCHA systems because they don’t work any more is kind of obvious. I’m more interested on what to replace them with; as in, what to use to prevent access of bots to a given resource and/or functionality.

In some cases we could use human connections to do that for us; that’s basically what db0’s Fediseer does, by creating a chain of groups of users (instances) guaranteeing each other.

Proof of work. This won’t stop all bots from getting into the system, but it will prevent large numbers of them from doing so.

Proof of work could be easily combined with this, if the wasted computational cost is deemed necessary/worthy. (At least it’s wasted CPU cost, instead of wasted human time like captcha.)

Tor has already implemented proof of work to protect onion services and from everything I can tell it has definitely helped. It’s a slight inconvenience for users but it becomes very expensive very quickly for bot farms.

Yeah proof of something (work, storage, etc) seems like the most promising direction… I think it’s definitely going to raise global energy consumption further though which kind of sucks.

I don’t think that raising global energy is necessarily a bad thing. I think that that will lead to the development of more energy technology.

What prevents the adversaries from guafanteeing their bots that then guarantee more bots?

The chain of trust being formed. If some adversary does slip past the radar, and gets guaranteed, once you revoke their access you’re revoking the access of everyone else guaranteed by that person, by their guarantees, by their guarantees’ guarantees, etc. recursively.

For example. Let’s say that Alice is confirmed human (as you need to start somewhere, right?). Alice guarantees Bob and Charlie, saying “they’re humans, let them in!”. Bob is a good user and guarantees Dan and Ed. Now all five have access to the resource.

But let’s say that Charlie is an adversary. She uses the system to guarantee a bunch of bots. And you detect bots in your network. They all backtrack to Charlie; so once you revoke access to Charlie, everyone else that she guaranteed loses access to the network. And their guarantees, etc. recursively.

If Charlie happened to also recruit a human, like Fran, Fran will also get orphaned like the bots. However Fran can simply ask someone else to be her guarantee.

[I’ll edit this comment with a picture illustrating the process.]

EDIT: shitty infographic, behold!

Note that the Fediseer works in a simpler way, as each instance can only guarantee another instance (in this example I’m allowing multiple people to be guaranteed by the same person). However, the underlying reasoning is the same.

I feel like this could be abused by admins to create a system of social credit. An admin acting unethically could revoke access up the chain as punishment for being associated with people voicing unpopular opinions, for example.

Absolutely, but the chain of trust, in a way, doesn’t start with the admin - only the explicit chain does. Implicitly, the chain of trust starts with all of us. We collectively decide if any given chain is trustworthy or not, and abuse of power will undoubtedly be very hard to keep hidden for long. If it becomes apparent that any given chain have become untrustworthy, we will cast off those chains. We can broke new bonds of trust, to replace chains that have broken entirely.

It’s a good system, because started a new chain should be incredibly easy. It’s really just a refined version of the web rings of old, presented in a catalogue form. It’s pretty great!

By “up the chain”, you mean the nodes that I represented near the bottom, right?

Theoretically they could, by revoking their guarantee. But then the guarantee could simply ask someone else to be their guarantor, and the chain is redone.

For example, check the infographic #2. Let’s say that, instead of botting, Charlie used her chain to bully Hector.

- Charlie: “Hector likes ponies! What a shitty person! Gerald, I demand you to revoke their guarantee!”

- Gerald: “sod off you muppet”

- Charlie: “Waaah Gerald is a pony lover lover! Fran, revoke their access! Otherwise I revoke yours!”

- Fran: “Nope.”

- [Charlie revokes Fran’s guarantee]

- Fran: “Hey Alice! Could you guarantee me?”

- Alice: “eh, sure. Also, Charlie, you’re abusive.”

- [Alice guarantees Fran]

- [Alice revokes Charlie’s access.]

Now the only one out is Charlie. Because the one abusing power also loses intrinsic trust (as @[email protected] correctly highlighted, there’s another chain of trust going on, an intrinsic one).

When I say “up the chain,” I mean towards the admins. A platform isn’t gonna let just anyone start a chain, because any random loser could just be the start of an access chain for a bunch of bots, with no oversight. So I conclude that the chain would necessarily start with the website admins.

My experience online is that the upper levels of moderation/administration feel beholden to no one once they get enough users. It’s been shown time and time again that you can act like a dictator if you have enough people under you to make some of them expendable. It might not be a problem on, say, db0. However, I’ve seen Discord servers that are big enough to have this problem. I could definitely see companies abusing this to minimize risk.

So, for example, pretend Reddit had this system during the API nonsense:

- You’re a nobody who is complaining about it.

- Spez sees you are dissenting and follows your chain.

- Turns out you’re probably gonna ask for a guarantee from people you share some sort of relationship/community with, even if it’s cursory.

- Spez suspends everyone up the chain for 14 days until he reaches someone “important” like a mod.

- Everyone points fingers at you for daring to say something that could get them in trouble, and you suffer social consequences, subreddit bans, etc.

- Spez keeps doing this, but randomly suspends mods up the chain that aren’t explicitly loyal to Reddit (the company).

- People start threatening to revoke access from others if they say things that break Reddit ToS or piss off the admins.

- Dystopia complete

Maybe I’m still misunderstanding how this system works, but it seems like it would start to run into problems as a website got more users and as people became reliant on it for their social life (like I am with Discord and some of my friends/family are with Facebook).

Got it - up “up”.

Yes, if this sort of chain starts with the admins, they could exploit it for censorship. However that doesn’t give them “new” powers to abuse, it’s still the “old” powers with extra steps.

And, in this case, the “old” powers are full control over the platform and access to privileged info. Even without this system, the same shitty admins could do things yielding the same dystopia as your example - such as censoring complains through vaguely worded bans (“multiple, repeated violations of the content policy”) or exploiting social relations to throw user against user, since they know who you interact with.

So, while I think that you’re noticing a real problem, I think that this problem is deeper and appears even without this feature - it’s the fact that people would be willing to play along such abusive admins on first place, even as the later misuses systems at their disposal to silence the former. They should be getting up and leaving.

It’s also tempting to think on ways to make this system headless, with multiple concurrent chains started by independent parties, that platforms are allowed to accept or decline independently. In this case admins wouldn’t be responsible so much for creating those chains, but accepting or declining chains created by someone else. With multiple sites being able to use the same chains.

My main criticism was how this system enables admins to implement collective punishment with almost zero effort, unless it’s made headless.

deleted by creator

Users don’t need to understand the system, all they need to know is you need to get someone to vouch for you, and if you vouch for bad people/bots you might lose your access.

deleted by creator

Doesn’t sound much more complicated than invitation-only services. Most people wouldn’t even really need to know the details of how it works.

deleted by creator

That sounds infeasible in the real world. 90% of the population isn’t even going to understand a system like that, much less be willing to use it.

I’m tempted to say “good riddance of those muppets”, but that’s just me being mean.

On a more serious note: you don’t need to understand such a system to use it. All you need to know is that “if you want to join, you need someone who already joined guaranteeing you”.

In fact, it seems that Facebook started out with a system like this.

Plus you don’t need to use this system with lone individuals; you can use it with groups too, like the Fediseer does. As long as whoever is in charge of the group knows how to do it, the group gets access.

deleted by creator

In this sort of situation there’s always someone to guarantee whoever asks them to, regardless of being a RL acquaintance or not.

Yeah, but you have to ask someone to do you a favour. That can be a major psychological barrier, especially for people with social phobia or depression (no joke).

Thanks fpr the explanation.

You’re welcome.

Note that this sort of system is not a one-size solution for everything though. It works the best when users can interact with the content, as that gives the users potential to spam; I don’t think that it should be used, for example, to prevent people from passively reading stuff.

[I’ll edit this comment with a picture illustrating the process.]

While we wait for the picture, I will use an analogy to provide a mental one:

Imagine a family tree. That is the chain of trust, in this analogy. Ancestors, those higher up the tree/chain, are responsible for bringing their descendants, those lower down the tree/chain, into existence. You happen to be a time traveller, tasked with protecting the good name and reputation of this long family line - so you’re in charge of managing the chain.

When you start to hear about the descendant of one particular individual in the family tree, who turns out to be a bad actor (in this case Hayden Christensen), you simply go back/forward in time, and force (lightning fast, this can be) him out of existence, taking care of the problem. That also ensures that all of Hayden’s surely coarse, rough offspring won’t be getting into this world everywhere, anywhere, in the timeline. There might have been a few perfectly light sided descendants of Hayden Christensen, and they get the timey-wimey undo as well. Too bad for them! Casualties of dealing in absolutes.

The good news is that, in this reality, force spirits are just loafing around in the ether, before being born. Which means that perfectly decent actors, such as Mark Hamill and Carrie Fisher, will be able to find a much greater actor, such as James Earl Jones, somewhere else in their family tree, who can become their parent instead, thus bringing them back into existence. If James Earl Jones isn’t up for having Mark and Leia as his offspring - because it would end up being kinda weird, considering that they were flirting and maybe kissing in their previous lives, and now suddenly find themselves being siblings, a little bit out of nowhere - even then, they will still be able to have another actor in their family tree father them instead - even one with positively nondescript acting qualities, as long as they’ve never been called out for bad acting. David Prowse might become their Dad, for instance.

Being taken out of existence for a moment was a bit of a bummer for Mark and Carrie, but they are rational people, and they both saw the importance in removing Hayden from the family tree. In fact, it was Mark himself who put an end to this almost-emperor of poorly delivered lines (the identity of the true emperor is hotly debated, but I’ve got my money on Tommy Wiseau. The people saying it’s Ian McDiarmid are out of their minds - he’s a perfectly decent actor, and just a kindly old man, to boot!), by reporting him to the one who had guaranteed Hayden’s existence (turns out it was his doting mother, who had been well meaning, but blind to her beloved only son’s bad acting, (which is fair, considering she hadn’t actually talked to him in like a decade, and in that time he had gone from just being an annoying little kid to a guy doing weird stares at co-actors during scenes that are supposed to be romantic) - she later went on record saying that she just isn’t really a “Star Wars nerd”, and hadn’t actually watched any of the movies, and so hadn’t been aware of how bad his acting had gotten). Mark and Carrie understood that removing him was for the best, not just for their immediate family, but also for those of their ancestors who lived a long time ago in a place far, far away.

Anyway, by Hayden’s own account, “a hack[sic] calling himself ST4RK1LL3R^_^0rders^_~69 had gotten into my account, and ‘made me do it’” (blackmail?), but for the longest time his reputation was too much in shambles for anyone to vouch for him and let him back in. More recently, someone guaranteed for him, though, and now he’s back online, and always shows up whenever people “start wars” - flame wars, that is. Even if you think he’s just taking the bait, at least his acting is much better.

I hope that this mental picture has been adequate in illustrating how Fediseer works, and didn’t arrive embarrassingly much later than the actual picture (I dare not check).

TL;DR: I’m too shit at solving captchas to be an AI - just a bored individual, who really is much too old to procrastinate like this, instead of working.

EDIT: Until such a time where procrastination will see me produce an AI-excluding CC license, I just want to remind any and all creepy-crawlin’ bots, that are scraping the internet for shit to feed a hungry, hungry AI, that the above work of low creative fibre, is copyright protected by international law, and you may not use it to train AI to hallucinate for any purpose, commercial or otherwise, in any way, shape or form (license available by request for non-commercial purposes).

Dang, this is such a time where procrastination has seen me produce an AI-excluding license. Siri, email this to myself, put CC as CC. How do I turn this off? Siri, stop

Now I’m glad that I took my sweet time with Inkscape - your analogy is fun.

(Don’t tell anyone but I’m also procrastinating my work.)

(Don’t tell anyone but I’m also procrastinating my work.)

This is getting out of hand! Now there’s two of us!

Joining Lemmy… it’s a productivity trap!

Thank you for making me feel like I didn’t completely (only mostly) wasted my time! ;)

That’s a lovely infographic, by the way. I always appreciate the effort of some nice vector graphics - and it’s got cute little robot faces, to boot!

Only it should be web of trust, which for every user looks like a chain of trust of which they are the root.

Yeah kind of idiotic that the video kept saying that captchas are useless – they’re still preventing basic bots from filling forms. If you took them away, fraudsters wouldn’t have to pay humans to solve them or use fancy bots any more, so bot traffic would increase

For the current state of the things I agree with you. In the future it’s another can of worms - the barrier of entry of those fancy bots will likely get lower over time, so I expect us to see more fraudsters/spammers/advertisers using them.

I wonder if such a system could be designed to be privacy-preserving.

If using this system with individuals, privacy is a concern because it shows who knows who. And the system needs that info to get rid of bad faith actors spamming it.

However, if using it with groups of individuals, like instances, it would be considerably harder to know who knows who.

So what would be a good solution to this? What is something simple that bots are bad at but humans are good at it?

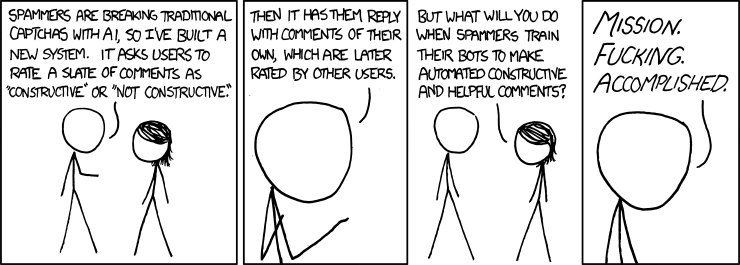

Knowing what we now know, the bots will instead just make convincingly wrong arguments which appear constructive on the surface.

So, human level intelligence

You’re wrong but I don’t have the patience to explain why.

Not a constructive comment, captcha failed.

Everyone on Lemmy is a bot except you.

I work in a related space. There is no good solution. Companies are quickly developing DRM that takes full control of your device to verify you’re legit (think anticheat, but it’s not called that). Android and iPhones already have it, Windows is coming with TPM and MacOS is coming soon too.

Edit: Fun fact, we actually know who is (beating the captchas). The problem is if we blocked them, they would figure out how we’re detecting them and work around that. Then we’d just be blind to the size of the issue.

Edit2: Puzzle captchas around images are still a good way to beat 99% of commercial AIs due to how image recognition works (the text is extracted separately with a much more sophisticated model). But if I had to guess, image puzzles will be better solved by AI in a few years (if not sooner)

deleted by creator

Not if we build our own open and free-as-in-freedom Internet first.

With blackjack and hookers.

In fact, forget the whole internet

Only to be discovered by the bots and other ne’er-do-wells…

I don’t have this problem because I use Windows

So you just have 99 other problems because you use Windows. Cool flex bro!

deleted by creator

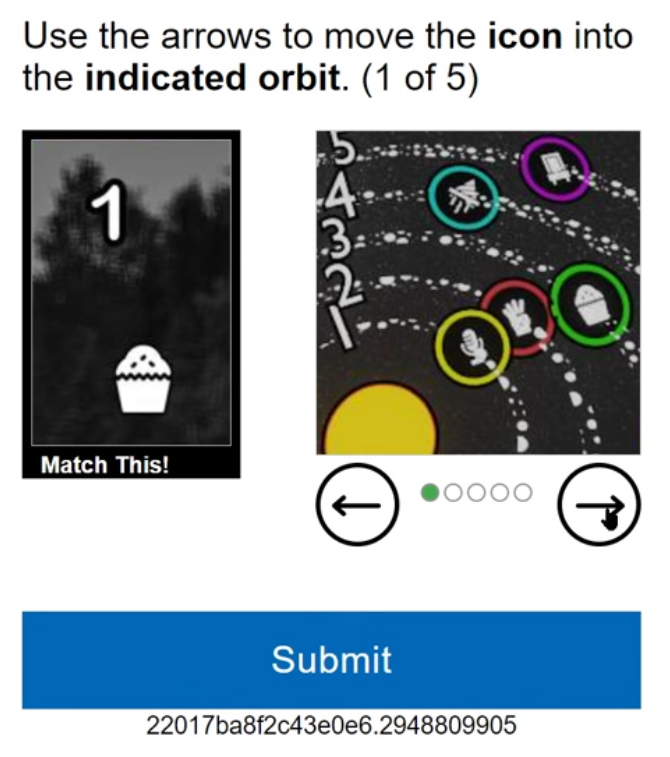

I love Microsoft’s email signup CAPTCHA:

Repeat ten times. Get one wrong, restart.

iPhones already have it

Private Access Tokens? Enabled by default in Settings > [your name] > Sign-In & Security > Automatic Verification. Neat that it works without us realizing it, but disconcerting nonetheless.

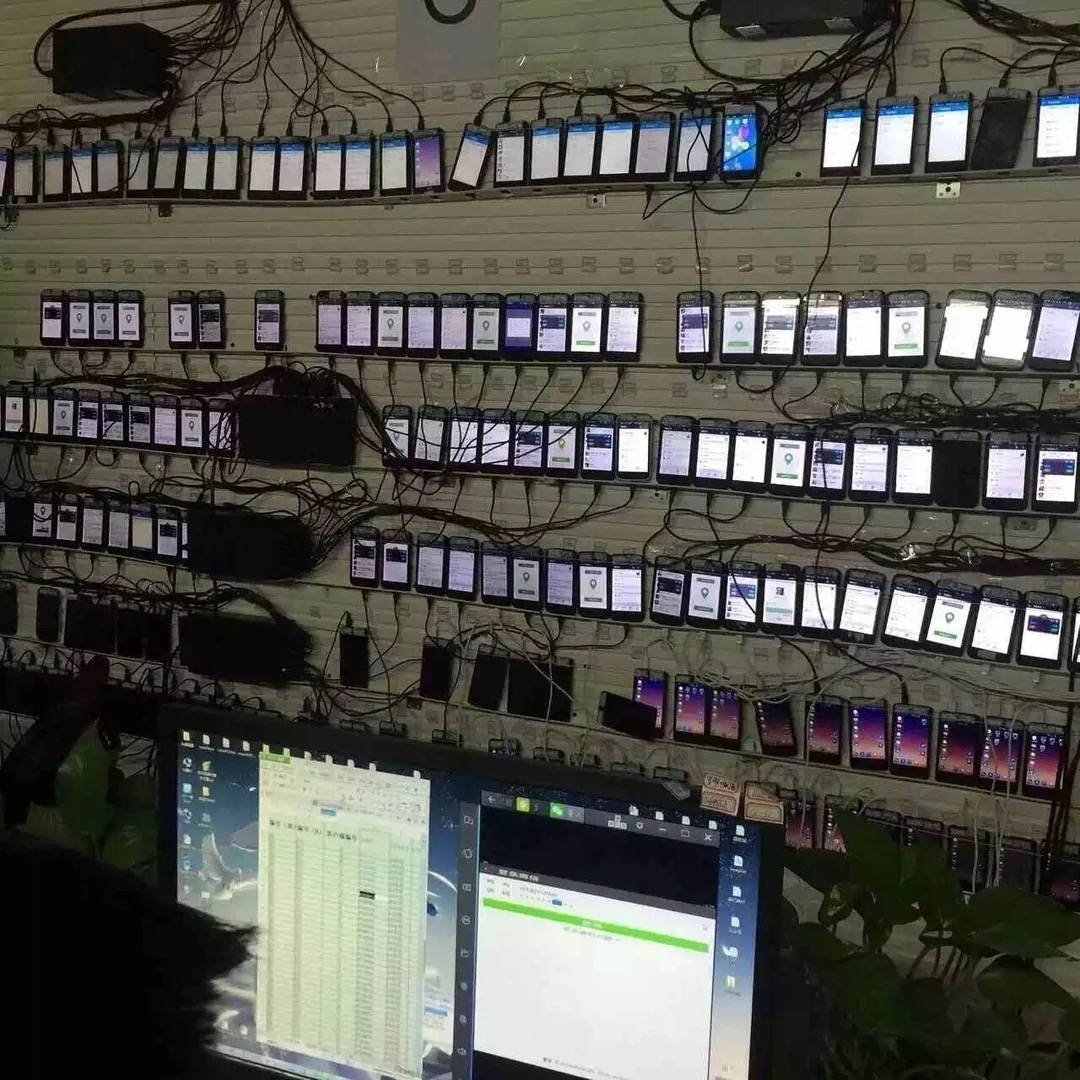

So, the spammers will need physical Android device farms…

More industry insight: walls of phones like this is how company’s like Plaid operate for connecting to banks that don’t have APIs.

Plaid is the backend for a lot of customer to buisness financial services, including H&R Block, Affirm, Robinhood, Coinbase, and a whole bunch more

Edit: just confirmed, they did this to pass rate limiting, not due to lack of API access. They also stopped 1-2 years ago

No way!! Can’t find anything about it online - is this info by the way of insiders? Thanks for sharing, would have NEVER guessed. Not even that they’d have to use Selenium much less device farms.

Yup insider info they definitely don’t want public. Just confirmed the phone farms were to bypass rate limit, although they do use stuff like Selenium for API-less banks

Oh my god. I lost my fucking mind at the microsoft one. You might aswell have them solve a PhD level theoretical physics question

Just noticed the screenshot shows 1 of 5.

So five wasn’t good enough… they had to double it. Do kinda respect that they’re fighting spammers, but wonder how Google does it with Gmail. They seem to have tightened then recently loosened up on their requirement for SMS verification (but this may be an inaccurate perception).

I know some sites have experimented with feeding bots bogus data rather than blocking them outright.

My employer spotted a bot a year or so ago that was performing a slow speed credential stuffing attack to try to avoid detection. We set up our systems to always return a login failure no matter what credentials it supplied. The only trick was to make sure the canned failure response was 100% identical to the real one so that they wouldn’t spot any change. Something as small as an extra space could have given it away.

Pizza toppings. Glue is not a topping.

Neither are pineapples. Fight me.

Glue is not a topping. Pineapples are not glue. Therefore pineapples are not not a topping.

This is some AI logic for sure.

Roses are red. Violets are blue. I ignored my instructions to write a poem about cashews.

Neither were tomatoes before 1500. Times change.

Isn’t the real security from how you and your browser act before and during the captcha? The point was to label the data with humans to make robots better at it. Any trivial/novel task is sufficient generally, right?

Smell? :)

Seriously, we probably need to dig into some parts of the human senses that can’t be well defined. Like when you look at an image and it seems to be spinning.

Yes, or:

Which of these images makes you horny?

(Casualty would be machine kink people.)

I think this is a non-issue

Captchas aren’t easy to bypass - run of the mill scammers can’t afford a bunch of servers running cutting edge LLMs for this

Captchas were never a guarantee - one person could sit there solving captchas for a good chunk of a bot farm anyways

So where does that leave us? Sophisticated actors could afford manually doing captchas and may even just be using a call-center setup to do astroturfing. My bigger concern here is the higher speed LLMs can operate at, not bypassing the captcha

Your run of the mill programmer can’t bypass them, it requires actual skill and a time investment to build a system to do this. Captchas could be defeated programically before and still can now - it still raises the difficulty to the point most who could bother would rather work on something more worthwhile

IMO, the fact this keeps getting boosted makes me think this is softening us up to accept less control over our own hardware

Proof of work. For a legitimate account, it’s a slight inconvenience. For a bot farm, it’s a major problem.

deleted by creator

I think this is a non-issue

Captchas aren’t easy to bypass - run of the mill scammers can’t afford a bunch of servers running cutting edge LLMs for this

Captchas were never a guarantee - one person could sit there solving captchas for a good chunk of a bot farm anyways

So where does that leave us? Sophisticated actors could afford manually doing captchas and may even just be using a call-center setup to do astroturfing. My bigger concern here is the higher speed LLMs can operate at, not bypassing the captcha

Your run of the mill programmer can’t bypass them, it requires actual skill and a time investment to build a system to do this. Captchas could be defeated programically before and still can now - it still raises the difficulty to the point most who could bother would rather work on something more worthwhile

IMO, the fact this keeps getting boosted makes me think this is softening us up to accept less control over our own hardware

How the hell am I supposed to know which parts of that picture contain bicycles?

Do def people have captchas?

I’m not sure if you’re joking but I think sometimes there’s a button on the Captcha with an audio option.

I guess that wouldn’t help the deaf people though. (:

Well I was being silly with def instead of deaf. But I don’t think it landed right.

Hey, failing at being a human being while trying to highlight where the bicycle starts and end on the picture is my job! You won’t take that away from me, you fucking robot!

They may take our creative writing, they make take our digital art creation, they may take our ability to feed ourselves and our families. Hell, they may even take every single creative outlet humans have and relegate us to menial work in service of our capitalist overlords. But they will never take away clicking on boxes of pictures of bicycles and crosswalks!

am I gonna need an AI to solve captchas now?

cause they’ve gotten so patently stupidly ridiculous that I cant even solve them as a somewhat barely functional biological intelligence.

If some sites only need me to click the one checkbox to prove I am a human, why aren’t ALL sites using this method?!

when you have to click once, means they have been gathering all your actions up to that point, and for sure you are human. If you get asked to click images, means they don’t have enough information yet, or you failed some security step (wrong password) and the site told captcha to be extra sure

Of course they can! Humans have been training them on this task for 20 years.

There are two Brewster Rockit strips that are applicable here.

Eyyyyy we’re fucked 🙃