Kinda already happening with usbc

USB-C uses the DisplayPort protocol in many cases.

Yeah but in the context of physical connector that doesn’t matter, you can really run almost all protocols over almost any connector if you want to (no bitrate guarantees)

deleted by creator

The year is 2045. My grandson runs up to me with a handful of black cords

“Poppy, you know computers, right? I need to connect my Jongo 64k display, but it has CFONG-K6 port, and my Pimble box only has a Holoweave port, have you got an adapter?”

Sadly I sift through the strange cords without a shred of recognition. Truly my time on this mortal coil is coming to a wrap

Don’t worry, maybe they misjudged the size of the asteroid and 2032 is it.

Cool, I won’t have to update my date formats.

Honestly, that’d be kinda sick. Imagine seeing the wall of fire, coming to cleanse the Earth once again. Hopefully the next ones to inherit it don’t fuck it up as bad as we did.

Remember DVI?

Hell, remember dot-matrix printer terminals?

VGA and DVI honestly were both killed off way too soon. Both will perfectly drive a 1080p 60fps display and honestly the only reason to use HDMI or Displayport with such a display is if that matches your graphics output

The biggest shame is that DVI didn’t take off with its dual monitor features or USB features. Seriously there was a DVI Dual Link with USB spec so you could legitimately use a single cable with screws to prevent accidental disconnects to connect your computer to all of your office peripherals, but instead we had to wait for Thunderbolt to recreate those features but worse and more likely to drop out

DVI – sure, but if you think 1080p over VGI looks perfect you should probably get your eyes checked.

I wouldn’t be surprised if it varies by monitor but I’ve encountered plenty of monitors professionally where I could not tell the difference between the VGA or HDMI input, but I can absolutely tell when a user had one of the cheap adapters in the mix and generally make a point of trying to get those adapters out of production as much as possible because not only do they noticably fuck with the signal (most users don’t care but I can see it at least) but they also tend to fail and create unnecessary service calls

We are still using VGA on current gen installations for the primary display on the most expensive patient monitoring system products in the world. If there’s a second display it gets displayport. 1080p is still the max resolution.

My dude, VGA is ANALOG it absolutely cannot run a LCD and make it look right, it is for CRTs

The little people inside the LCD monitor can absolutely turn the electricity into numbers. I’ve seen them do it.

This is incorrect. Source: do it all the time for work.

Remember? I still use it for my second monitor. My first interaction with DVI was also on that monitor, probably 10-15 years ago at this point. Going from VGA to DVI-D made everything much clearer and sharper. I keep using this setup because the monitor has a great stand and doesn’t take up much space with its 4:3 aspect ratio. 1280x1024 is honestly fine for having voice chat, Spotify, or some documentation open.

Hell yeah. My secondary monitor is a 1080p120 shitty TN panel from 2011. I remember the original stand had a big “3D” logo because remember those NVIDIA shutter glasses?

Connecting it is a big sturdy DVI-D cable that, come to think of it, is older than my child, my cars, and any of my pets.

Remember it? I work on PCs with DVI connected monitors every day.

Hell, I still use VGA for my work computer. I have the display port connected to the gaming laptop, and VGA connected to the work CPU. (My monitors are old, and I don’t care)

My monitors are old, and I don’t care

Sung to the tune of Jimmy crack corn.

My monitor is 16 years old (1080p and that’s enough for me), I can use dvi or HDMI. The HDMI input is not great when using a computer with that specific model.

So I’ve been using DVI for 16 years.

I ran DVI for quite a while until my friend’s BenQ was weirdly green over HDMI and no amount of monitor menu would fix it. So we traded cords and I never went back to DVI. I ran DisplayPort for a while when I got my 2080ti, but for some reason the proprietary Nvidia drivers (I think around v540) on Linux would cause weird diagonal lines across my monitor while on certain colors/windows.

However, the previous version drivers didn’t do this, so I downgraded the driver on Pop!_OS which was easy because it keeps both the newest and previous drivers on hand. I distrohopped to a distro that didn’t have an easy way to rollback drivers, so my friend suggested HDMI and it worked.

I do miss my HDMI to DVI though. I was weirdly attached to that cord, but it’d probably just sit in my big box of computer parts that I may need… someday. I still have my 10+ VGA cords though!

Yeah it was a weird system just like today’s usb-c it could support different things.

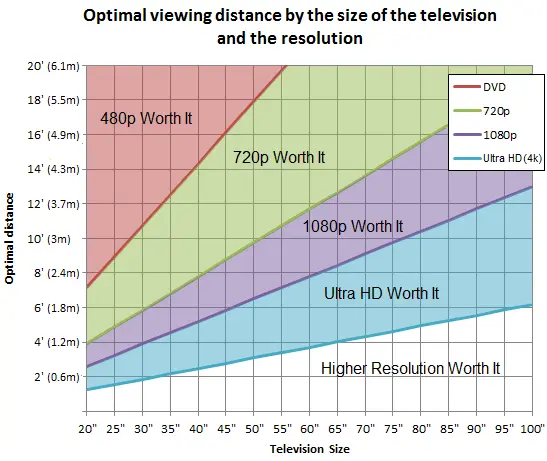

I mean, it could… but if you run the math on a 4k vs an 8k monitor, you’ll find that for most common monitor and tv sizes, and the distance you’re sitting from them…

It basically doesn’t actually make any literally perceptible difference.

Human eyes have … the equivalent of a maximum resolution, a maximum angular resolution.

You’d have to have literally superhuman vision to be able to notice a difference in almost all space scenarios that don’t involve you owning a penthouse or mansion, it really only makes sense if you literally have a TV the size of an entire wall of a studio apartment, or use it for like a Tokyo / Times Square style giant building wall advertisement, or completely replace projection theatres with gigantic active screens.

This doesn’t have 8k on it, but basically, buying an 8k monitor that you use at a desk is literally completely pointless unless your face is less than a foot away from it, and it only makes sense for like a TV in a living room if said TV is … like … 15+ feet wide, 7+ feet tall.

Yes. This. Resolution is already high enough for everything, expect maybe wearables (i.e. VR goggles).

HDMI 2.1 can already handle 8k 10-bit color at 60Hz and 2.2 can do 240Hz.

Commercial digital cinema projectors aren’t even 8K. And movies look sharper then ever. Only 70mm IMAX looks better and that’s equivalent of 8K to 12K but IMAX screens (the real ones not digital LieMAX) are gigantic.

Also screen technology has advanced faster than the rest of the pipeline. Making a movie in a full 8K pipeline would be way too expensive. It’s only since recent years that studios upgraded to a complete 4K pipeline from camera to final rendered out image including every step in between. And that’s mostly because Netflix forced studios to do so.

True native non upscaled 8K content won’t be here for a long while.

This is the most “um acktually” of um actualities but…

For the Apollo 11 documentary that uses only 1969 audio/video they built a custom scanner to digitally scan the 70mm film with the intention that the originals will never need to be touched again at least in their lifetime and its ~16k resolution.

Granted I don’t think you can get that version anywhere? But it exists.

Super cool doc by the way. Really surreal seeing footage that old at modern film quality. The documentary about the documentary is also really interesting.

Yo dawg, I heard you like documentaries.

👈😎👈

The one about Peter Jackson making They Shall Not Grow Old is also neat.

Apparently he collects WWI artillery and they used his private collection for the sound recording.

While it is pretty subtle, and colour depth and frame rate are easily way more important, I can easily tell the difference between an 8k and a 4k computer monitor from usual seating position. I mean it’s definitely not enough of a difference for me to bother upgrading my 2k monitor 😂, but it’s there. Maybe I have above average vision though, dunno though: I’ve never done an eye test.

Well, i have +1.2 with glasses (which is a lot) and i do not see a difference between FHD (1980) and 4k on a 15" laptop. What i did notice though, background was a space image, the stars got flatter while switching to FHD. My guess is, the Windows driver of Nvidia tweaks Gamma & light, to encourage buying 4k devices, because they needed external GPU back then. The colleague later reported that he switched to FHD, because the laptop got too hot 😅. Well, that was 5 years ago.

30 inch laptop? Is that a typo or does somebody actually make one that big?

We’re bringing back briefcase computers!

Right, brainfart. I didn’t measure it but it was largeish but not giant. Let’s say 15".

Interesting: in that sense 4k would have slightly better brightness gradiation: if you average the brightness of 4 pixels there’s a lot more different levels of brightness that can be represented by 4 pixels vs 1, which might explain the difference perceived even when you can’t see the individual pixels.

The maximum and minimum brightness would still be the same though, so wouldn’t really help with the contrast ratio, or black levels, which are the most important metrics in terms of image quality imo.

That graph is fascinating, thank you!

I don’t think it’s correct though.

The graph suggests that you should be looking at a 65-inch screen from a distance of 120 cm for resolutions above 4k to be worth it. I interpret that as the distance at which the screen-door effect becomes visible, so this seems awfully close actually.

A 65-inch screen has a width of 144 cm, which gives you a 100 degree angle of vision from the left edge to the right edge of the screen. Divide the approximate horizontal resolution of 4000 pixels by 100 and you get an angular pixel density of 40 PPD (Pixels Per Degree).

However for the pixel gaps to become too small to be seen or in other words for the screen-door effect to disappear, you need an angular pixel density of 60 PPD. That means you can sit at a more reasonable distance of 220 cm in front of a 65-inch screen for resolutions above 4k to be worth it.

This is still too close for comfort though, given that the resulting horizontal angle of vision is 66 degrees. The THX cinema standard recommends a horizontal viewing angle of 40 degrees.

So multiply 40 degrees by 60 pixels per degree to get a horizontal resolution of 2400 pixels. That means the perfect resolution for TVs is actually QHD.

There’s obviously truth to there being diminishing returns, but this chart is garbage.

That means the perfect resolution for TVs is actually QHD.

And yet QHD would be comparatively awful for modern content. 720p scales nicely to QHD, but 1080p does not. I suspect that’s why 4K has been the winner on the TV front, it scales beautifully with both 1080p (4:1) and 720p (9:1) content.

Btw, https://en.m.wikipedia.org/wiki/Angular_resolution

And already 4k tv would have to be, what, 2m diagonale, in usual viewing distance of 3m+.

doesn’t this suggest that my 27" monitor I sit a foot away from should just be 480p? that seems a little noticable to me

I think you got this wrong. If you sit 0.3 m away, more than 4k is worth it.

you’re right lol. at first glance I thought the y axis was in inches not feet. make sense now lol

I think you’re misreading ’ as "

If it’s 27" and 1’ away that puts it into the highest resolution category

indeed! im a computer science student who can’t read a graph, that’s tough haha

DVI is the Gen X of video connectors

Where do my boys Component and S-Video end up?

in my box of cables, for one!

Me: eagerly digs through a box of old AV cables to see if he has a GameCube component cable

Me only finding SVideo: ☹️

The good news is that you can get third party ones now. The better news is that you can get fully digital hdmi adapters that output over hdmi with not analog conversion in the middle.

S-Video is second from the left.

What did those poor cables do to you to get the Liam Neeson treatment?

They were sitting in a drawer doing nothing.

Now they bring joy to people again.

Ah, so they are for BDSM?

They are impact toys, yes.

Avast ye timbers, themses some fine ropes ya got thar

Always be appreciatin’ a ship hand that can tie good knots.

What’s that one on the right? Kinda looks like svideo, but I can’t tell from that angle.

They’re control connectors from an American DJ CD player+mixer set.

Sploosh

G-go on…

The third one is modeled after a working cattle whip I saw in South America (that one was leather ofc).

That’s actually really cool

With all the rest of the A/V cables, not the computer ones

I remember finding 10 foot combo Toslink S-video cables at Radio Shack for like $5. I bought 3 of them and ripped the S-Video off because the Toslink cable was all I was after. I still think it’s better than most other cables for audio.

HDMI ARC is technically better now

Yeah, ARC is pretty convenient, I use it as well.

My monitors only have DVI and VGA inputs. I’ve had to get adapters to use them with more modern equipment.

I’ve got no reason to replace them. They’re perfectly fine monitors. I’ve had them for over a decade, and I still like them a lot.

nobody noticed dark side of the moon cover on the background

It’s a woke poster. /$

VGA was just analog, it wasn’t because the resolutions supported weren’t HD.

100% right. I know it can handle 1920x1080 @ 60hz and it can handle up to 2048x1536

I remember my buddy getting a whole bunch of viewsonic CRTs from his dad who worked at a professional animation studio. They could do up to 2048×1536 and they looked amazing, but were heavy as fuck for lan parties lol. I loved that monitor though, when i finally ‘upgraded’ to an lcd screen it felt like a downgrade in alot of ways except desk real estate.

ViewSonics were the BEST

Trinitron was better because it was flat.

Sony just used to make good quality electronics, in general.

…ish

It can handle way more than that can’t it? It’s analogue, I remember being able to set my crt to 120hz way back in the day.

I’ve seen some videos on YouTube of people overclocking their CRTs so definitely that most be a thing. And yes it is 100% analog.

I should get a new CRT.

Dude, I’ve been thinking about that too and they’re getting rare or difficult to find, at least in the USA. I found one at work with the Windows 2000 Login Screen burned in…

The meaning of high Res wasn’t changed though also VGA was able to output Full HD as well.

My old 19" CRT monitors could do QXGA [2048x1536] (or maybe even QSXGA [2560x2048], though I think I skipped that setting because it made the text too small in an era before DPI-independent GUIs) through their VGA connections, which is more than “Full HD.”

When I switched to flat-panel displays close to two decades ago it was a downgrade in resolution, which I only made up for less than a year ago when I finally upgraded my 1080p LCDs to 1440p ones.

But no sound, that was one of the advantages of hdmi over vga

Wait, how is losing my independent speakers and having an audio setup not instantly compatible with my CD player, VCR, and my Victrola an upgrade?

You arent losing that unless you throw away all your cords. For most cases, less cords for same result is good.

Why would you lose that? It has the ability to carry sound but it doesn’t need to.

Your classic VGA setup will probably be connected to a CRT monitor, which among other things has zero lag, and therefore running your sound separately to your audio setup, which also has zero lag, will be fine. Audio and video are in sync.

HDMI cables will almost certainly be connected to a flatscreen of some kind. Monitors tend to have fairly low lag, but flatscreen TVs can be crazy. Some of them have “game” mode (or similar) but as for the rest, they might have half-a-second or more of image processing before actually displaying anything. Running sound separately will have a noticeable disconnect between audio and video; drives me crazy although some people don’t notice it. You would connect your audio setup to the TV rather than directly to source to correct this.

Now, the fact that a lot of cheap TVs only have a 3.5mm headphone jack to “send on the sound” is annoying to me, too. A lot of people just don’t care about how things sound and therefore it’s not a commercial priority. Optical digital audio output would be ideal, in that cheap audio circuitry inside the television won’t degrade the sound being passed over HDMI and you can use your own choice of DAC, but they can be both expensive and add a bit of lag as well.

Lossless transmission over longer distances

It already happened to them multiple times that’s why we are on HDMI 2.2 which can go up to:

7680 × 4320 @ 60FPS

HDMI 1.0 could only reach:

1920 x 1080 @ 60FPS

The only reason it still works is because they keep changing the specifications.

And I think I can confidently say we will never need more than 8K since we are reaching the physical limits of the human eye or at the very least it will never be considered low resolution.

I can see more than 8k being useful but not really for home use. Except possibly different form factors like VR, not sure on that one either though.

Na, when you go any higher in commercial use you just use multiple cables, HDMI 2.2 can only reach 10ft as it is if you lower the bandwidth it can go farther, I could see a optical video standard for commercial use though.

Maybe, but I could see thunderbolt replacing HDMI and display port over time. It can carry video, audio, data, and power simultaneously, and has more bandwidth for additional information like HDR or 3D.

USB can do that as well, using Displayport alt mode. And it doesn’t need Intel’s fees.

Also, 3D. LOL

Same cable, same port, I don’t really care what it’s called if the capabilities are the same.

3D is important for stuff like VR, there’s a reason it’s the predominant cable for headsets.

It’s REALLY important for adoption. An open standard is far more likely to be used.

But can it spy on users for marketing purposes? That’s the real question.

/s

Yes? Just add a usb rubber ducky

VGA should have released VGA2.1 that is what all the cool display cables do

Then a a month later VGA 2.1B. After that VGA 3.0 Fullspeed and 3.0 Highspeed, to make things “less confusing.”

Names are uaeful, I use fast Ethernet when connecting a server because it’s fast.

I don’t think you’ll need much more than 8k 144hz. Your eyes just can’t see that much. Maybe the connector will eventually get smaller, such as USBC?

USB-C is already a plug standard for display port over thunderbolt. Apple monitors use this to daisy chain monitors together.

But its DP implemented within USB, vs an actual DP. There’s a latency there which might matter for online realtime gaming

The USB-C is just the connector. The cables are thunderbolt. Thunderbolt 3 and 4 are several times the speed of USB 3.2. No latency issue.

Yeah but there are still signal processing steps required to tell both ends of the connector which pins to use to implement the DisplayPort protocol.

- DP cable? Connection is direct from GPU → Screen.

- DP through USB? Connection has to go through the USB bus on the machine → Screen.

Let’s hope that bus is dedicated, and not overloaded with other USB tasks!

Let’s hope that bus is dedicated

It is. That’s always been one of the key features of thunderbolt. There’s no negotiation or latency involved after you plug it in. It just works.

Right, the pins just automatically know which to use without any negotiation from the USB bus. Come on.

Edit: Your reaction to this friendly debate is, for lack of a better word, hilarious. The fact that I’ve been upvoting your responses out of mutual respect, and that you’ve been clearly downvoting mine speaks volumes.

Just because you don’t know how any of this works, don’t lash out at me in anger.

Blocked

640k of RAM should be enough for anybody

You think that’s a clever analogy but it’s not even close.

Well, I don’t know much about the resolving power and maximum refresh rate of human vision but I’m guessing that the monitor they described is close to the limit.

The analogy refers to someone who has their thinking constrained to the current situation. They didn’t imagine that computers would become resource-intensive multimedia machines, just as this person suggests the cable wouldn’t be asked to carry more data than would be necessary for the 8k monitor.

I can imagine a scenario with dual high resolution screens, cameras and location tracking data passing through a single cable for something like a future VR headset. This may end up needing quite a bit more data throughput than the single monitor–and that isn’t even thinking outside the box. That’s still the current use case.

Do you have a crystal ball over there? I still think it’s a clever analogy.

Well then maybe you should read the comment you replied to again? They did not talk about how much data the cable would need. They even hypothesized that the cable format might even change. The meme talks about defining hd and they commented that 8k would be enough. Human eyes will not magically get more resolving so yeah, your analogy is still bad.

I do disagree on the Hz though. It would indeed be nice if we got 8k@360hz at some point in the future but that’s not resolution so I’ll let it slide.

Fair enough.

Just be sure to keep lubricated while you permit all that sliding.

I can see a clear difference between 144hz and 240hz, so even that part isn’t right

And I haven’t used an 8K monitor, but I’m confident I could see a difference as well

Yeah, frequency might go a bit higher. But I doubt many people could tell the difference between 8k and 16k.

I still use VGA

laughs in bnc

Supposedly 0-4Ghz passband and can carry 500v. No idea what that translates to in terms of resolution/framerate, only that it’s A Lot. Biggest downside is that it’s analog.

Edit: for comparison, iirc my CRT monitor runs somewhere around 20~30Khz for a max of 1280x1024@75hz. I may be comparing apples to oranges here (I’m still learning about analog connectors, how analog video works, etc), buuuuut that suggests that a bnc connect running at its highest rated output would potentially be able to run some fairly large displays.

Nah, not only analog.

SDI - with 24G SDI being the latest standard, able carry up to 24gbps - is alive and well.

I hope HDMI dies off in favour of DisplayPort. We need fewer proprietary standards in the world.